By Silke von Gemmingen

The inspection of critical infrastructure such as energy plants, bridges, and industrial complexes

is essential to ensure their safety, reliability, and long-term functionality. Traditional inspection methods

require the movement of people to

areas that are unsafe or difficult to

access. Autonomous mobile robots offer great potential for making

inspections more efficient, safe, and accurate. Uncrewed aerial vehicles (UAVs), such as drones in particular, have been recognized as promising platforms because they can be used flexibly and can reach areas that are difficult to access from the air.

A drone approaches a high-voltage electrical transmission tower. Courtesy of aau/Müller.

One of the biggest challenges in

this use case is navigating the drone precisely — relative to the objects to

be inspected — to reliably capture high-resolution image data or other sensor data. A research group at the University of Klagenfurt in Austria has designed a real-time-capable drone based on object-relative navigation using artificial intelligence. Also on board: a USB3 Vision industrial

camera from the uEye LE family from IDS Imaging Development Systems GmbH.

As part of the research project — funded by the Austrian Federal Ministry for Climate Action, Environment, Energy, Mobility, Innovation and Technology — the drone had to independently recognize the difference between different objects. It is programmed

to fly around the insulator at a distance of 3 m and take pictures. “Precise localization is important such that

the camera recordings can be compared across multiple inspection flights,” said Thomas Georg Jantos, a doctoral student and member of the Control of Networked Systems research group at the University of Klagenfurt.

The prerequisite for this flight pattern is that object-relative navigation must be able to extract so-called semantic information about the objects in question from the raw sensory data captured by the camera. Semantic information makes raw data, such as camera images, understandable and actionable, enabling not only capture of the environment but also the correct identification and localization of relevant objects.

Thomas Jantos with an inspection drone. Courtesy of aau/Müller.

In this case, an image pixel is not only understood as an independent color value (i.e., an RGB value) but also as part of an object, such as an isolator. In contrast to the classic global navigation satellite system (GNSS)-based approach, this method provides the UAV not only with a position in space but also with a precise relative position and orientation with respect to the

object being inspected (for example, “the drone is located 1.5 m to the left

of the upper insulator”). The key requirement for this capability is that image processing and data interpretation must be latency-free so the drone

can adapt its navigation and interaction to the specific conditions and requirements of the inspection task in real time.

Intelligent image processing

Object recognition, object classification, and object pose estimation are performed using artificial intelligence in image processing. “In contrast to GNSS-based inspection approaches using drones, our AI with its semantic information enables the infrastructure to be inspected from certain reproducible viewpoints,” Jantos said. “In addition, the chosen approach does not suffer from the usual GNSS problems such as multipathing and shadowing caused by large infrastructures or valleys, which can lead to signal degradation and therefore safety risks.”

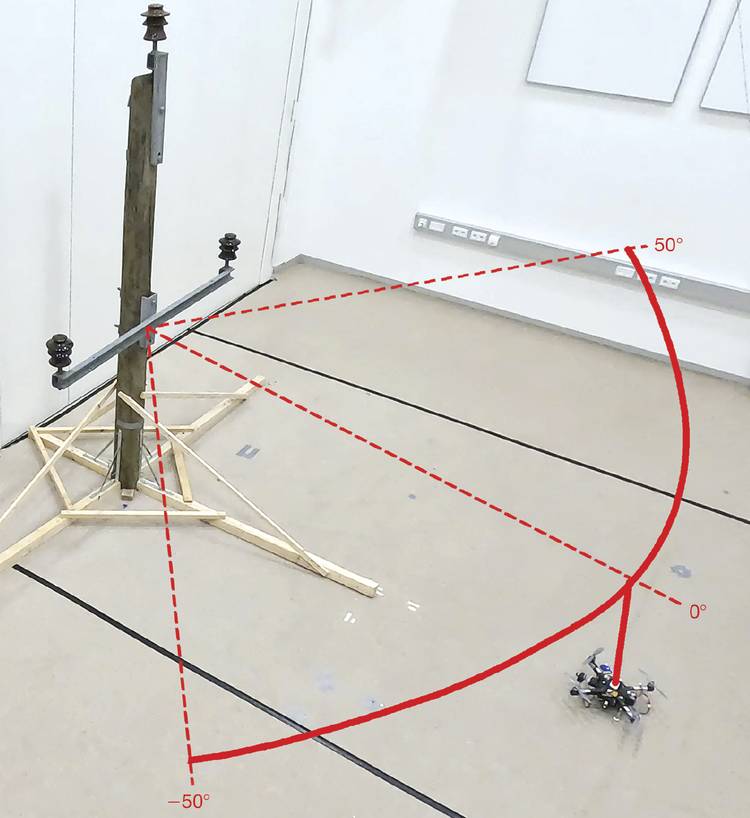

Visualization of the flight path of an inspection flight around an electricity pole model with three insulators, in the research laboratory at the University of Klagenfurt. Courtesy of aau/Müller.

The hardware setup consists of a TWINs Science Copter platform equipped with a Pixhawk PX4 autopilot, an NVIDIA Jetson AGX Orin 64GB Developer Kit as the onboard computer, and a USB3 Vision industrial camera from IDS. “The challenge is to get the artificial intelligence onto the small helicopters,” Jantos said. “The computers on the drone are still too slow compared to the computers used to train the AI. With the first successful tests, this is still the subject of current research.”

But the camera delivered perfect basic data almost instantly, as demonstrated by tests in the university’s own drone hall. Choosing a suitable camera model involved more than just meeting requirements for speed, size, and price.

“The camera’s capabilities are essential for the inspection system’s innovative AI-based navigation algorithm,” Jantos said. He opted for the U3-3276LE C-HQ model, a project camera from the uEye LE family. The integrated Sony Pregius IMX265 sensor enables a resolution of 3.19 MP (2064 × 1544 pixels) with a frame rate of up to 58 fps. The integrated 1/1.8-in. global shutter does not produce any distorted images at these short exposure times, as compared to a rolling shutter. And as a navigation camera, the uEye LE provides the embedded AI with the comprehensive image data that the onboard computer needs to calculate the relative position and orientation of the drone with respect to the object being inspected. The drone is thus able to correct its pose in real time.

The IDS camera is connected to the onboard computer via a USB3 interface. IDS peak enables efficient raw image processing and simple adjustment of parameters such as auto exposure, auto white balancing, auto gain, and image downsampling.

The sensor fusion with camera data, lidar, and global navigation satellite system (GNSS) enables real-time navigation and stabilization of the drone — for example, for position corrections or precise alignment with inspection objects.

To ensure a high level of autonomy — including control, mission management, safety monitoring, and data recording — the researchers use the source-available communications, navigation, and surveillance (CNS) Flight Stack on the onboard computer. The CNS Flight Stack includes software modules for navigation, sensor fusion, and control algorithms, and allows the autonomous execution of reproducible and adjustable missions.

Alignment with sensor fusion

The high-frequency control signals for the drone are generated by the inertial measurement unit (IMU). The sensor fusion with camera data, lidar, and GNSS enables real-time navigation and stabilization of the drone — for example, for position corrections or precise alignment with inspection

objects. For the Klagenfurt drone, the IMU of the PX4 is used as a dynamic model in an extended Kalman filter. The filter estimates where the drone should be at a specific time, based on last known position, speed, and attitude. Data from the IMU or camera is then captured at up to 200 Hz and incorporated into the state estimation process.

The USB3 Vision industrial camera U3-3276LE Rev.1.2. Courtesy of IDS.

“With regard to research in the field of mobile robots, industrial cameras are necessary for a variety of applications and algorithms. It is important that these cameras are robust, compact, lightweight, fast, and have a high resolution,” Jantos said. “On-device preprocessing — for example, binning — is also very important, as it saves valuable computing time and resources on the mobile robot.”

Meet the author

Silke von Gemmingen is a corporate and product communications specialist at IDS Imaging Development Systems GmbH; email: s.gemmingen@ids-imaging.com.