In the factories of the future, vision systems will supply data that adjusts processes in real time to minimize the occurrence of downtime and out-of-spec parts.

HANK HOGAN, CONTRIBUTING EDITOR

Smart factories require

collecting information about products, processes, and people to achieve highly automated manufacturing. In this undertaking, machine vision will supply key data.

Traditionally, vision technology has performed parts inspection, product tracking, or robot guidance. Because of smart factories’ increasing emphasis on process control and wide sharing of vision outputs, vision systems now face new demands.

Highly automated and running with minimal human intervention, a smart factory will depend on machine vision to inspect parts and control processes. Courtesy of Cognex

“Successful smart factory initiatives will need smooth, multilevel data flow between devices, machines, and enterprise systems,” said Gaurav Sharma, product marketing manager at vision solution supplier Cognex.

Sharma said customers expect data and device management not just on a single production line but across all lines in a facility — and often across multiple facilities. Customers also want information to flow in a variety of industrial systems and for this information to be controlled and accessible from anywhere.

Such vision capabilities, he said, provide a means for monitoring the health of manufacturing processes and systems. A drop in read rates for a barcode scanner, for instance, may indicate a failing label printer. Factory managers, or perhaps automated systems themselves, can then schedule preventive maintenance, thereby avoiding costly unplanned downtime.

Lights-out operation

The ultimate goal of a smart factory is to be able to run lights-out production, with as little human intervention needed as possible, said Steven Wardell, director of imaging at systems integrator ATS Automation in Cambridge, Ontario, Canada. Vision will play a key role in achieving this capability, so vision fundamentals of resolution and contrast still hold, and so lighting, optics, and cameras remain critical to success.

“It’s not to just get a good image so that you’ve got the things that you’re looking for properly contrasted, but that it’s robust,” Wardell said. “It’s got to work 99.9% of the time.”

The requirements of vision system users change over time, he said. A company may switch a part color from blue to yellow. It may decide to inspect for finer defects because doing so reveals hidden problems and reduces customer returns. In such situations, the system also has to change, perhaps by using different lighting or by moving an inspection point. Mounting cameras and lights on robot arms can accomplish these tasks.

Thus, it’s important to design for flexibility, Wardell said. While the goal may be to reduce the need for people on the floor, he added, a few people will likely still need to be present. Vision can help track their location, as well as that of machines such as forklifts. This information can be used to improve workplace safety and workflow.

Vision provides data across multiple production lines and devices in smart factories. Courtesy of Stemmer Imaging

According to Sam Lopez, senior vice president of sales and marketing at Matrox Imaging in Dorval, Quebec, Canada, a key feature of a vision system is its ability to communicate with other devices within the factory, and to communicate to other factories and to the cloud. In the past, systems integrators implemented such connections. Now, machine vision suppliers such as Matrox Imaging incorporate modules into their products to enable communications among vision systems and robots, industrial computers, and other smart devices.

“We’ve taken care of all of that for our customers, including how to communicate with PLCs (programmable logic controllers),” Lopez said.

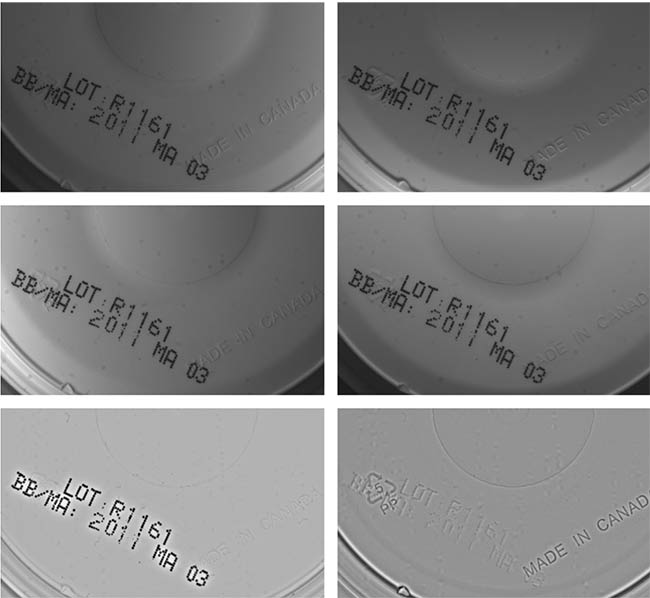

Connected devices and intelligent light solutions in a smart factory may make it easier to handle difficult vision tasks, such as reading stamped or embossed markings on a tire or a can of food. Photometric stereo lighting is one such solution that can be used to create shadows to help make markings more visible. A smart factory’s interconnectedness can make implementing such a solution easier, while meeting throughput demands.

Deciding which data, features, and parameters in a smart factory should be tracked — a decision that drives the levels of imaging performance, data storage, and analysis that are needed — is important, said James Reed,

vision product manager at LEONI, based in Lake Orion, Mich.

A badge mounted on the doorframe of a car may contain a string of characters that describes many product parameters and identifies the product, he said. Analyzing the image data may reveal, for example, that the third character fails inspection more often than other characters, and that the defect is most likely due to a particular aspect of the badge-making process being out of spec. This information then uncovers when a die that produces the character is wearing out. The die can then be replaced to avoid downtime and improve productivity.

Often, LEONI’s vision solution will include storing uncompressed image data in a local industrial PC for a few days, followed by compressing the data to save space. This approach creates a visual record for a human to review.

However, high-speed production

typically requires only collecting the data derived from image analysis — which may be only a few bytes per parameter — to perform statistical process control. The size of the uncompressed image itself, in contrast, may be tens of megabytes.

A vision system uses photometric stereo lighting to capture embossed or stamped markings on cans. This lighting highlights features that are otherwise difficult or impossible to spot with a vision system and could be more widely deployed in smart factories. Courtesy of Matrox Imaging.

The analysis performed by a vision system, and the determination of which parameters in the sensor data correlate to specific manufacturing defects, may best be handled by neural net-based deep learning, along with other AI technologies. These analytics may be run at or near the network edge. This raises the question of how best to achieve the lowest cost of owning smart factory vision systems.

Error detection rates to 99%

Chipmaker Intel has worked with companies around the world to automate processes and solve various manufacturing problems, and thereby reduce costs. Often, the solutions involve vision. Humans successfully spot only one in five defects on an automotive part, according to Brian McCarson, vice president in the IoT group of the Santa Clara, Calif.-based company. He said a vision system that is engineered with the appropriate lighting, optics, and sensors, however, can push this detection rate to over 99%.

An interconnected smart factory could operate with few people, while the data generated and the mechanisms of control could be accessed from anywhere. Courtesy of Intel/Adobe stock.

According to McCarson, connecting

as many as 32 cameras to a local industrial PC allows the cameras to be relatively “dumb” and therefore inexpensive, which helps reduce the total cost of system ownership. The PC performs image analysis by using a

machine learning-derived inference engine to classify parts.

“It can be running all the inference needs on that one box, that one industrial PC, processing through that CPU and GPU that’s built in it,” he said.

An industrial PC can also handle the complex task of understanding the many computer languages running on the factory floor, as well as communicating with manufacturing execution systems.

Mark Williamson, managing director of machine vision technology provider Stemmer Imaging of Tongham, England, said that in the future, such communications may include collecting data beyond image files or the information extracted from them. For instance, the machine vision working group OPC UA (Open Platform Communications Unified Architecture) is in discussions about other data that could be useful to share in the context of the wider smart factory.

“As a minimum, the inspection data being fed back to the factory could report the vision system configuration settings,” Williamson said.

Vision systems supply data that can be used to adjust the manufacturing process to reduce out-of-spec parts. Courtesy of Intel/Adobe stock.

He noted that vision systems usually sit on the edge of the manufacturing network and generally feed only pass/fail results or specific measurements back to the wider factory. Returning actual image data, which may run to tens of megabytes for a multimegapixel image, would put a large burden on the network and infrastructure.

According to Williamson, it is more likely that vision systems will send all measurement data to an AI-based smart factory solution that could monitor for possible problems and therefore prevent system failures. Another future advancement may involve the use of computational imaging to address shortcomings with lighting. In computational imaging, multiple images are taken and combined to create a hybrid image, which can identify a defect that otherwise could not be detected. Because of the computational burden of this approach, a PC-based system is usually used, Williamson said.

Finally, since the smart factory concept includes the idea of self-correcting manufacturing, the possibility of using vision as part of an automated

process-control loop is a growing desire. In this approach, the vision system supplies data that could then be used to adjust the manufacturing process, thereby helping to reduce or eliminate the occurrence of out-of-spec parts. To avoid costly errors, said Alex Shikany, vice president of membership and business intelligence at the Association for Advancing Automation trade group, the vision data would have to be of high quality, and the software that uses the data to fine-tune the process would have to be virtually error-free.

“The value proposition of getting to where decisions can be made autonomously reliable, with or without human intervention, is tremendous,” he said about efforts within the industry to close the process-control loop.

Discussing a desire for a threshold for a correct-decision success rate that exceeds 99%, Shikany said, “Perfection is an ideal that drives many automation innovators in our space.”