The advent of less expensive and higher-performing 3D vision, coupled with innovations in light mounting and software, is clearing hurdles for robots to better perform unstructured bin picking.

HANK HOGAN, CONTRIBUTING EDITOR

For robots, unstructured bin picking has traditionally

been challenging, if not

effectively impossible. Part of the reason is mechanical, since getting a robotic arm into a bin and securing its grip on a part to pick can be difficult. Another problem,

however, is the time needed for standard 2D vision to locate a part and position the robot to make a successful pick.

A robot arm picks random objects from a bin. A 3D camera sits above and shines structured light (blue) on the bin to help identify and locate objects to be picked. Courtesy of FANUC.

Now the advent of less expensive and higher-performing 3D-vision technology makes random bin picking much more practical. This is even more the case when 3D imaging is combined with 2D technology and innovations that involve mounting cameras and lights or software.

In unstructured bin picking, the biggest hurdle is often be the geometry of the parts, said James Reed, vision product manager at automation systems integrator LEONI. Grabbing one part and pulling it out often leads to more parts coming out of the bin, much like what happens in the children’s game Barrel of Monkeys.

The geometry of the part also plays

a role in choosing between 2D and 3D vision, with distinct advantages for each technology, Reed said. “2D is typically faster. The algorithms and processing

are faster. The image acquisition is faster.”

But, he said, “The more complex the geometries on the part are, the stronger 3D seems to work.”

Picking complex parts

When complex parts need to be picked out of a bin and placed on a conveyor in a particular orientation for later processing, 2D vision alone is able to perform this task. But the procedure requires repeatedly picking the part up, setting it down, imaging it, and then moving it to achieve a particular identifying orientation.

Multiple touches and movements of

a part take time. Even though the acquisition time needed for any one image is short, the total time required altogether is too long, while the part must be sturdy enough to avoid damage when being handled repeatedly. These variables may make a 3D vision system actually less complicated and costly than the 2D alternative, while offering higher performance, Reed said.

When selecting the best vision approach to unstructured bin picking, it is important to consider the total picture, said Edward Roney, national account manager for intelligent robotics at FANUC America of Rochester Hills, Mich. The company makes robots, vision systems, and other automation products.

“We don’t see a lot of slow bin-picking applications,” Roney said to describe requirements for any vision solution.

This means that predictable cycle time is critical, placing a premium on reliable part picking in a fixed period of time. Given these requirements, he said, “That favors 3D. 3D gives you more information.”

While 3D vision is increasingly preferred for bin picking, 2D vision still has its place. Sometimes a combined 2D/3D approach works best. When gripping a single part and pulling it out of a bin leads to other parts coming out as well, for instance, one solution is to place the entire group in a drop area and use 2D vision to guide a robot that separates the parts from one another.

3D color imaging is used in random bin picking because color helps to differentiate one part from another. Courtesy of Zivid.

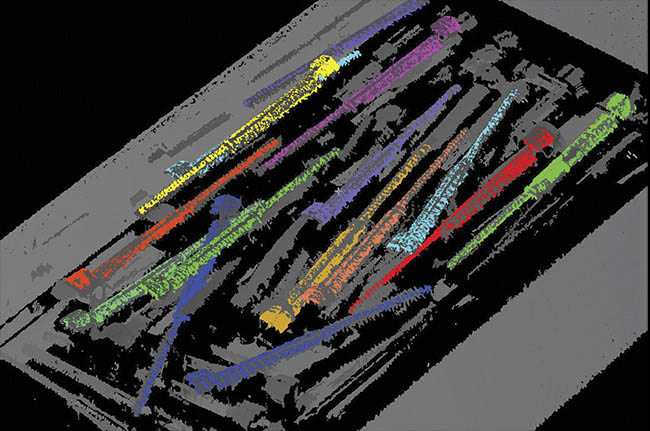

A 3D point cloud helps software to locate objects, even if the point cloud is incomplete. Such object localization aids random bin picking. Courtesy of MVTec Software.

Such a multistep method may be necessary due to nonvision-related constraints. A robot has to avoid running into bin walls or other objects, a requirement that itself limits arm movement and may make working a single part free from a bin too slow and costly.

Lighting is king

FANUC’s latest 3D vision system captures images using a single shot of structured light. The system measures distortions in the projected pattern of light to yield an object’s point cloud, or the 3D coordinates of a set of points measured on its surface. As with 2D

vision, lighting is king, said Roney. Therefore, the proper illumination is critical to getting acceptably precise vision data.

This imaging is typically performed when the robot arm is out of the way, leaving the parts unobscured. Such an approach reduces overall cycle time because image acquisition takes place while the robot arm is doing something else. Imaging and selection of the next part to be picked, for example, can occur while the arm is moving the part last picked to another location.

There may be performance-related reasons to mount the camera, lighting, or both on the robot arm that is doing the bin picking, said Raman Sharma, vice president of sales and marketing for the Americas at Zivid, a 3D industrial machine vision system maker. Sharma said people move their heads slightly from side to side to gain somewhat different views when trying to capture details about and identify a visually challenging collection of objects, such as a bin full of shiny metal cylinders.

One way to use the same method in machine vision is to mount a 3D camera on the robot arm itself. Sharma said this arrangement will become more popular because of the capabilities it allows, such as being able to alter the camera angle.

“You tilt the camera in one direction, capture the scene. You tilt it in another, capture another angle. Those 3D images can then be merged together, and you get a complete image of an object that was challenging at first glance,” he said.

To make mounting a camera at the

end of a robot arm feasible, Zivid reduced the size of its latest system substantially and also cut the system’s weight to less than 850 g — a significant reduction of the product’s load on an automated arm. The company’s vision systems come with integrated structured light illumination that is strong enough to overcome the ambient lighting found on factory floors, in warehouses, and in other industrial settings. Sharma said embedding lighting improves the quality and consistency of the 3D point cloud output.

In addition to on-arm robot mounting

of vision systems, he predicted that

3D color imaging will become more

important to bin picking because color is often a significant feature for differentiating among parts. He also said software, such as neural nets and other AI tools, will become increasingly important.

Software helps make up for some hardware limitations by effectively enhancing parameters such as dynamic range. A shiny metal cylinder illuminated from above will have a single bright line running down it, surrounded by two relatively dark bands. Sharma said that 3D cameras can capture an image of the cylinder, and then software can process the image while taking this known characteristic into

account. The combination thus helps

to enable robust bin picking.

Overcoming reflectivity

Bin picking of reflective objects can be a challenge, said Luzia Beisiegel, product owner of the HALCON Library at machine vision solution supplier MVTec Software. She said approaches exist, however, that ease the software burden of dealing with the problem of reflectivity and other issues.

In general, Beisiegel advocated employing techniques that take advantage of the geometry of the situation or other information that is known ahead of time. When parts are randomly arranged on a conveyor belt, or they have little depth to them, such geometry-based information may make it possible to locate parts using only 2D images.

Alternatively, a 2D tool — such as a blob analysis that identifies regions of interest based on intensity or depth — may be used to reduce the size of the 3D point cloud. This approach minimizes the amount of 3D data and processing needed.

Beisiegel said lighting is critical, as are the 3D imaging hardware capabilities and the resulting point cloud. “The most important thing is that you have enough information in the point cloud available. The more information in the point cloud, the better the results.”

She said each vision solution, however, must confront trade-offs regarding speed, accuracy, and robustness. In some bin-picking tasks, part selections

cannot be missed, such as when fulfilling customer orders. The difficulty of achieving 100% performance means that the more cost-effective solution may be a less-than-perfect bin pick

followed by an inspection to ensure

accuracy of the process.

In the end, the right balance between speed, accuracy, and the robustness of the vision solution, and the choice between 2D and 3D methods, will be determined by the details of the bin-picking task itself. Beisiegel summed up the situation when she said, “It depends on the application and the requirements of the application.”