Whether powered by time-of-flight, structured light, or stereoscopic cameras, 3D-imaging technology is making space for itself in industrial and other applications.

MICHAEL EISENSTEIN, SCIENCE WRITER

From time to time, we all find ourselves forced to rummage through the cluttered jumble of our home “junk drawer”

to dig out a screwdriver, battery, paper clip, or other much-needed object. For humans, this is merely cause for fleeting irritation — but for robots working on a factory floor, this same problem poses a major technological challenge.

Courtesy of iStock.com/Maksim Tkachenko.

David Dechow, principal vision systems architect at Integro Technologies, said many manufacturers working with automation have struggled with this bin-picking problem on their assembly lines. “It’s a matter of knowing how to segment and identify an individual object and then find it, even though it may be randomly oriented with a bunch of other objects.” But thanks to decades of advancements in 3D-imaging technologies, this hurdle is no longer insurmountable.

Indeed, 3D imaging is now enabling unprecedented levels of automation

on a number of fronts, including self-driving vehicles and the oversight and quality control management of complex industrial workflows. “All of these robots need ‘eyes,’” said Anatoly Sherman, head of product management and applications engineering at SICK AG in Waldkirch, Germany. “And in many cases it’s not enough to have a one-layer 2D scanner — they need to perceive the world as a human sees, in 3D, because then they can do their job much more efficiently.”

Many technologies are available for

achieving this goal, and it can be disorienting to figure out which is the best fit for a given application. A key part of Dechow’s job is helping industry clients to navigate this labyrinth and designing an integrated machine vision system that best suits their needs. “The biggest challenge is in constraining the application,” he said. This makes the task of selecting the right 3D-imaging solution more straightforward, because each technology has evolved with its own distinctive combination of strengths and weaknesses for various scenarios.

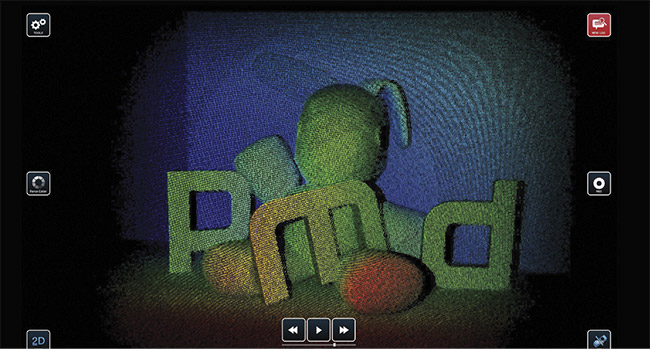

Time-of-flight cameras, such as the systems developed by pmdtechnologies AG, rely on sensor measurements of reflected infrared light to reconstruct entire 3D scenes even in low-light settings. Courtesy of pmdtechnologies AG.

Time-of-flight (TOF) imaging — one of the more straightforward 3D-imaging approaches — essentially uses light in the same way that bats use sound for echolocation. These cameras typically couple an infrared light source used

to illuminate the area in front of them with a sensor that can specifically

detect and interpret the reflected light as it bounces back from surfaces situated at various distances from the camera.

The underlying principle has been around for decades. Germany-based pmdtechnologies AG, for one, got its start in the TOF space in 2005 with a one-dimensional range-finding instrument based on this principle. But Bernd Buxbaum, pmd’s co-founder and CEO, said the same principle is easily scalable to imaging in three dimensions. “We use the same technology for 3D cameras on autonomous guided vehicles [AGVs] driving around in full sunlight, detecting objects at up to 50 or 60 m away.”

SICK’s Sherman said 3D TOF has blossomed as an industrial imaging solution over the past decade. SICK offers several such devices, and other companies — such as Basler, LUCID Vision Labs, and Odos Imaging — have made TOF the main focus of their 3D-imaging portfolio.

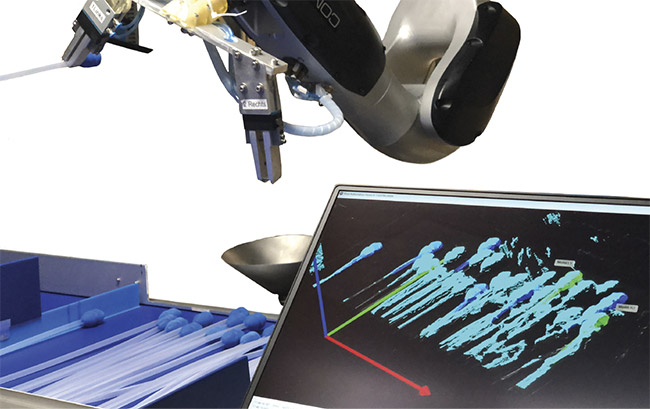

Bin-picking tasks — in which robotic arms use machine vision systems to identify, select, and extract individual objects from mixed contents — can still

pose a serious challenge for

3D-imaging technologies. Courtesy of Imaging Development Systems (IDS) GmbH.

One of the technology’s key advantages is speed. “It’s nearly real-time and can get full streaming at the resolution for which it’s specified,” Dechow said.

Also, because the technology relies on active illumination, it can routinely be used indoors in low-light conditions to deliver VGA (video graphics array) resolution (640 × 480 pixels) at ranges of up to 10 m, though Microsoft’s latest-generation Azure Kinect DK (developer kit) leads the TOF pack with megapixel resolution.

Sherman said SICK’s Visionary-T cameras are often used to assist AGV guidance or to measure dimensions of freight in factories or warehouses. But TOF technology is also effective for imaging at longer ranges in outdoor settings — if one is willing to accept reduced spatial resolution, and the sunlight is not too bright. This trade-off is acceptable for the obstacle-avoidance systems that AGVs use to navigate, for example.

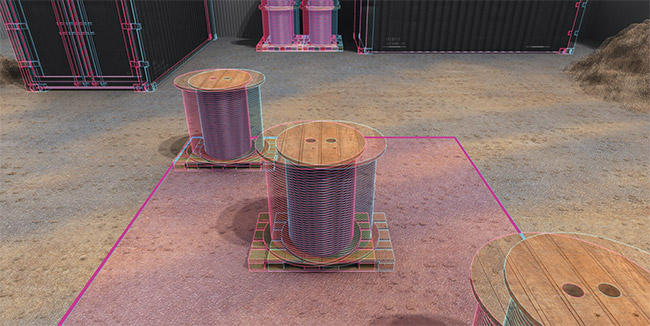

Passive stereo vision systems, such as SICK’s Visionary-B family of instruments, are commonly used to assist in obstacle identification and avoidance in outdoor industrial settings. Courtesy of SICK AG.

Buxbaum said pmd’s cameras further benefit from an efficient filtering strategy. “We are able to differentiate photons coming from the sun from photons we are transmitting, and that gives us very stable performance.”

Finding patterns

Where TOF often falls short is in its ability to deliver high depth resolution, according to Dechow.

“I haven’t seen a TOF camera yet that gets much better than several millimeters of accuracy in the z dimension,” he said. The approach can also struggle with certain kinds of surfaces, such as materials that are highly light-absorbent or that generate interreflection of the infrared light. Both scenarios can confound the sensor.

The solution to such challenges may involve changing the active illumination method, which can offer better performance when fine details are essential to the imaging effort. Several companies, such as Cognex and Zivid, are employing strategies based on structured light, in which a complex pattern of photons is projected onto a surface or object. The reflected photons are then detected by a sensor situated some distance from the projector, enabling a digital 3D reconstruction of the illuminated surface’s topography based on how the surface warps and distorts the projected pattern.

Structured light systems require multiple projections to capture a full scene and are thus far more computationally demanding and slower than TOF cameras, typically capturing only a few frames per second versus the 30 to 50 fps that TOF can achieve. However, the technology excels at imaging fine details. “It provides an unprecedented level of accuracy,” said Tomas Kovacovsky, CTO and founder of Slovakia-based Photoneo SRO. Structured light methods routinely achieve submillimeter resolution in all three dimensions,

making them a good fit for tough tasks such as bin picking and robot guidance.

In 2018, Photoneo launched a system called the MotionCam-3D that considerably ramped up the imaging speed that could be achieved with structured light. The key is a fully programmable sensor, in which each pixel can be trained to respond to a specific light pattern. As a consequence, Photoneo’s system can project, detect, and analyze multiple patterns in parallel, to allow resolution of roughly half a megapixel at speeds up to 20 fps. This is a powerful advantage for robot-based inspection processes in which structured light would normally require routine pauses. “You don’t need to be stationary along the path if you can also scan while in motion,” Kovacovsky said.

Moving in stereo

The human visual system relies on

a sophisticated process of data integration based on the triangulation between slightly mismatched images received by each eye. A number of imaging companies — including SICK, Tordivel, VISIO NERF, and Imaging Development Systems (IDS) — have developed strategies that mimic this stereoscopic approach to machine vision.

Simpler implementations based on so-called passive stereo vision use a computer to reconstruct a 3D scene from image data received by two

separate cameras. SICK’s Sheman said such systems are relatively straightforward to implement and that they function reliably under a wide range of operating conditions. SICK’s passive stereo-based Visionary-B imaging gear is most often used in mining, construction, and other outdoor settings. “You need really high robustness in terms of temperatures that can range from −30 or −40 °C up to 70-plus °C, as well as shock robustness,” he said. These systems depend on external illumination to function and are thus best suited for applications that involve abundant natural or artificial light.

Martin Hennemann, product manager for 3D imaging at IDS, pointed out that passive stereo imaging struggles with certain kinds of surface conditions. “It’s actually very difficult if they look at blank and homogeneous raw data images, like white office walls or even difficult surfaces with reflections, like oil-covered objects,” he said. This can represent a major limitation for use in interior industrial settings.

As a solution, IDS has integrated structured light into its Ensenso family of stereo imaging systems to create an active stereo vision approach. The introduction of a light pattern creates an additional layer of visual information that the sensor can use to reconstruct the 3D scene. Hennemann said users have a range of options in terms of how these imaging systems are configured, including the ability to generate specific structured light patterns that enable higher-precision imaging for specific tasks. “Our Ensenso cameras have advantages, in that large volumes and motion can be covered,” he said. “But if you have steady scenes, you can use more images to get better accuracy, or scale up the resolution.”

Ensenso imaging instruments developed by IDS combine stereo vision with patterned light to achieve high-resolution 3D images. Courtesy of IDS.

SICK also offers active stereo solutions as part of its Visionary-S camera line, and these instruments have proved to be popular in a variety of industrial settings because of their discriminatory power at close range. “We use these cameras for logistical automation tasks like depalletization or box picking,” Sherman said. “If you have a pallet with boxes that are tightly stacked, you don’t have any information based on depth, but you can distinguish the boxes by contrast.” Although active stereo cameras can generally achieve submillimeter 3D resolution up close, their performance drops off sharply at distances beyond several meters.

Room to grow

Many companies that entered the 3D imaging business in its early days see a field on the cusp of going mainstream. “It’s kind of widely used, but still not anywhere near its full market potential in the manufacturing world,” said Photoneo’s Kovacovsky. That said, the number of companies in the sector continues to increase, and global technology giants such as Sony, Panasonic, and Microsoft are now facing stiffening competition from a rising number of smaller companies and startups. Although much of the booming growth is driven by industrial applications, there are also opportunities for 3D imaging to make a splash in the consumer space. “Our technology was used in several smartphones that we developed jointly with Google,” said pmd’s Buxbaum. “And we have a German OEM car company that is using our technology for interior sensing.” In the longer run, he added, 3D imaging will help monitor motorists as more vehicles incorporate advanced driver awareness systems.

In parallel, the success of 3D imaging in solving industrial problems means that the technology is now moving on to even bigger challenges. For example, Dechow said, solutions for bin picking are still very much a work in progress while businesses attempt to apply automation to increasingly complex manufacturing and logistics processes. “I have a couple of requests on the docket right now for picking any one

of 10,000 SKUs [stock-keeping units] out of a bin,” he said. “In the bigger logistics companies, it becomes a question of ‘Can you pick any one of 2 million SKUs and, by the way, they might be totally mixed.’” The COVID-19 pandemic has injected additional urgency into this problem, with homebound consumers taxing the capabilities of online retailers.

As the market grows for 3D imaging, the spectrum of technological offerings has remained remarkably diverse, with TOF, structured light, and stereo vision platforms steadily evolving distinct solutions for specific 3D imaging scenarios. For now, Dechow believes this rich ecosystem will continue to persist. “I think the diversity of offerings is necessary,” he said. “There is crossover, so maybe we’ll see some consolidation, but there seems to be enough of a demand for all of it that I don’t think everything will merge together.”