The Merits of Processing Data at the Edge Versus in the Cloud

An increasing number of use cases will incorporate AI-powered cameras and selective cloud processing.

SEBASTIEN DIGNARD, iENSO

Embedded vision, whether it involves the ability to capture still images or video, is the biggest source of data available today, as more and more devices become part of the Internet of Things. In an IoT world, vision is no longer just about the best picture. It is also about how data can be mined for insights that aid in decision-making. With a modern embedded vision system, a product can not only see, but can also process and understand what it sees.

The use case scenario for this kind of intelligence could be health- and wellness-related, such as a parent remotely monitoring her newborn’s vital signs. It may take the form of a smart refrigerator signaling when to restock groceries or an oven capable of helping with meal preparation. Or it could provide added value across an entire supply chain, such as a farmer taking advantage of the latest precision farming technologies to know when to irrigate or when to order and apply fertilizer or pesticide to boost crop yield. Law enforcement could use the same technology to ensure public safety at a sporting event or airport.

But what is the best way to process digital images to unlock the insight and value of their data? And, where to process it?

Data can be processed either at the network edge or in the cloud. Each

option has its pros and cons.

Data-driven insight from the cloud doesn’t come free. Every image processed by a typical cloud agent has associated costs that include bandwidth, connection, and cellular data fees. These costs are hard to contain when everything must be sent to the cloud to effectively process all the data. Cameras that are constantly streaming without any local preprocessing capability must upload every second of footage to avoid missing an important moment.

By adding intelligence and security to the camera, at the network edge, it can decide whether any activity is taking place that warrants action. The camera can then prioritize when to stream and what to stream to the cloud. Even if all image data must be pushed to the cloud, the edge approach can still be used to prioritize bandwidth usage and improve searchability. Adding some measure of intelligence to the device

offers other benefits as well, including:

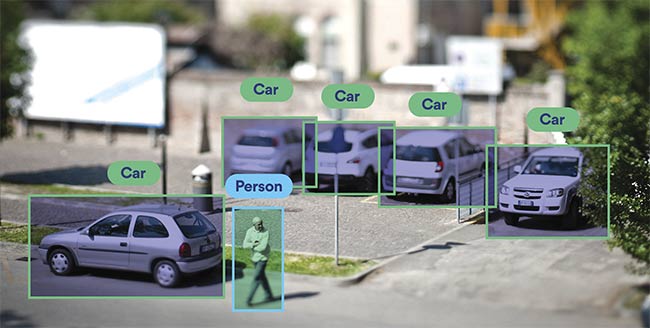

A surveillance camera differentiates between automobiles and people. Courtesy of iENSO.

• Security. Most of the data — which could be personal, private, or otherwise of a sensitive nature — never needs to leave the device. An alert can be sent to the user when the camera identifies information of interest. In some cases, an alert is enough, without having to send an image to the cloud at all.

• Latency and offline applications. Processing at the edge also reduces latency and makes it possible for the device to deliver a desired function even while offline. Decisions can still be made, immediately, with no internet connection and no external power. In some security applications, this capability is very important.

Consider a scenario in which a

secure facility is attacked and the power is turned off. The Wi-Fi router isn’t working. This means that cloud-based systems are not functioning. But analytics processed on local edge devices that have self-contained power sources can continue to function. When the internet comes back, all of the processed data will then be pushed to the cloud as necessary.

However, this doesn’t mean that all the processing power should be placed on the camera. The use of onboard chipsets may push the upfront retail price of a device too high for the consumer market. Onboard batteries may not fit the design footprint, or a power plug may not be desired.

At the same time, the cloud remains

an important aggregator of data that can be accessed centrally from anywhere, with any remote device. The cloud enables bulk management of multiple devices and aggregation of

selected data from any desired combination of devices.

With all these performance, cost, and efficiency considerations in mind, an adaptive hybrid of both edge and cloud is the ideal solution.

The smart baby monitor

Let’s explore this further by looking at the example of a company that wanted to bring an intelligent baby monitor to market.

New parents always fear the dangers that their sleeping children could face. Sudden infant death syndrome (SIDS), also known as cot or crib death,

involves the sudden, unexplained death of a child before he or she reaches a year old. SIDS is the leading cause of infant mortality in the Western world.

A baby monitor captures vision data. Courtesy of iENSO.

The company set out to create the first AI-powered baby-wellness monitor by taking a “dumb” and inexpensive, off-the-shelf digital camera and coupling it with proprietary software and public cloud processing. Custom algorithms used subpixel feature learning to distinguish biosignals from background noise, even in a darkened room.

Parents could remotely monitor and be alerted to any movement or sign that their child was about to wake, as well as to changes in vitals such as breathing, heart rate, and body temperature. The device also included two-way audio.

For the device’s intended consumer market, and to create a platform that could scale with new features and

capabilities, the company needed to fulfill specific requirements. These included low hardware and firmware development costs, nonrecurring engineering and production costs, low end-to-end latency for video streaming and analytics, a low upfront retail device purchase cost for the consumer, and the ability to scale and offer new analytics services easily (with various tiers of subscription-based services).

How cloud-only fell short

Creating a device that completely relied on the cloud for its processing, analytics, and outputs — while delivering the peace of mind that parents wanted — soon proved to be unfeasible. For one, the necessary data compression caused issues with data utility and accuracy. To accurately monitor a child’s vital signs, the rawer the image, the better. In the device’s first iteration, it consumed a large amount of bandwidth. A single camera would consume 500 to 1000 GB of data per month to transport motion JPEG (MJPEG) video. In many cases, this amount exceeded the monthly upload limits of the typical residential internet account. Additionally, the company had no end-to-end control over video streaming or video encoding to manage any connectivity issues. Finally, a cloud-only approach limited the company’s ability to offer a scalable monthly recurring revenue business model.

A hybrid model

The company considered putting all the storage and compute power on the device itself, but this would have made the upfront cost of the device too high for the intended consumer market. The key to addressing these issues was to divide the load, by having an adaptive, hybrid model that blended edge computing with cloud computing.

A smart fridge detects product levels and creates shopping lists. Courtesy of iENSO.

The baby monitor company could make use of this type of hybrid model without inflating the upfront retail price of the device because the chip market has undergone a paradigm shift in recent years. Almost every system on a chip (SOC) that is released today for cameras and vision systems has a GPU on board. This makes analytics processing in AI-based systems easy, with a price tag that still works for a cost-conscious consumer.

This design resulted in a more adaptive system that could offload data and processing between edge and cloud, on demand. As a result, the company reduced bandwidth consumption to around 150 GB per camera per month and accessed only the raw data that

offered the greatest fidelity, to preprocess and extract certain features that are critical for accurate vital signs analysis. Additional benefits included improving data security, gaining end-to-end control of the data pipeline, and retaining full protection of intellectual property, since the device chip components are off-the-shelf commodity

items and the company’s propriety algorithms reside only in the cloud.

With an all-cloud option, cloud computing costs per camera were in the range of $73 per month. When the company switched to a hybrid of edge and cloud, costs were slashed to pennies per month.

A hybrid model combined the best of both worlds. The company could build a recurring revenue stream that combined the upfront purchase of

an affordable device with monthly subscription options at various price points.

Processors at the edge

For integrators or developers, the

first step is to right-size the edge processor for the job it must perform within the device. Having more horsepower than is required by the application incurs unnecessary costs and power consumption. For this reason, it is important to bring the processing architecture into the product design early on. If the design team gets too far into designing the algorithm and the business model before the processing architecture is decided upon, the whole product roadmap may end up missing out on possible efficiencies. This will affect product cost and/or service to the end user.

Searchability with images and video is notorious for being inefficient, expensive, and difficult. The alternative way is to upload all the video to the cloud and then do pixel matching or comparison. As was demonstrated with the baby monitor example, when processing

occurs at the edge, data doesn’t necessarily have to be sent to the cloud

at all.

For an IoT world, a hybrid model that blends the edge (always on) with the cloud (available on demand) is the way of the future, and embedded vision companies are creating platforms that leverage their expertise so that IoT product companies can focus on their core expertise and markets.

The supply chain for embedded vision has reached the tipping point at which new SOC designs that have high processing power combine with low power consumption, more onboard storage, and a price point that is suitable for a consumer market.

A drone detects crop conditions using a vision camera. Courtesy of iENSO.

This situation creates the conditions needed to bring to market affordable IoT devices that have a revenue model that couples the upfront sale of a

device with a sliding scale of subscription-based service levels.

With a hybrid model, device makers

also benefit from increased data security, reduced reliance on expensive cloud services, greater IP protection, and privacy assurance for consumers.

Meet the author

Sebastien Dignard leads iENSO’s global activities, focusing on the provision of effective solutions in embedded and AI-enabled imaging. He has an extensive background in the imaging and wireless industry. The former CEO and president at FRAMOS — where he was recognized for achieving 4000% growth — and a recipient of the prestigious 40 Under 40 Award, Dignard is respected for his ability to balance strategy development and execution; www.ienso.com.

How Data Is Preprocessed at the Edge for Selective Upload to the Cloud

Object recognition is a computer vision process for identifying objects in images or videos. Every object has a unique set of attributes that can be extracted from the image or video stream.

The extraction of these attributes “at the edge” implies there is an algorithm running directly on the camera hardware. This computing power can be located on the device SOC (system on a chip) or on an MCU (microcontroller unit) — for example, in an ARM core, GPU, or similar processing core. The computing power could also be located on a companion processing entity such as an FPGA (field programmable gate array) or an AI

accelerator. Considerations include the following:

• The algorithm used for object detection would typically involve a

model that has been trained in a deep learning framework to optimize the algorithm’s capabilities. The target of the training is typically low

latency, privacy (meaning data stays on the edge device), efficiency

or low power consumption, and, of course, accuracy.

• There are many different deep learning frameworks that can be used, such as convolutional neural networks (CNNs) or recurrent neural networks (RNNs).

• The use of the trained model to make a prediction while image data

is being analyzed on the device (in real time) is called inference. Once the image or video has been analyzed and the relevant data has been inferred and extracted, its attributes can be included as tags in the header or metadata of the video or image files.

• Data can be sent in a small text file to the cloud, accompanied by

a low-res thumbnail.

• The data is searchable in the cloud and can be used to filter and

drive decisions.

•

After users narrow down the items of interest, data is sent from the device to the cloud only if needed.

|

/Buyers_Guide/iENSO/c32432