Deep neural networks are playing an increasingly important role in machine vision applications.

ADRIEN SANCHEZ, YOLE DÉVELOPPEMENT

Increasing volumes of data required for smart devices are prompting a reevaluation of computing performance, inspired by the human brain’s capabilities for processing and efficiency. Smart devices must respond quickly, yet efficiently, thereby relying

on processor cores that optimize energy consumption, memory access, and thermal performance.

Courtesy of www.iStock.com/MF3d.

Central processing units (CPUs) address computing tasks sequentially, whereas the artificial intelligence (AI) algorithm for deep learning is based on neural networks. Its matrix operations require parallel processing performed by graphics processing units (GPUs), which have hundreds of specialized cores working in parallel.

The deep neural networks powering the explosive growth in AI are energy hungry. As a point of comparison, a neural network in 1988 had ~20,000 neurons; today that figure is 130 billion, escalating energy consumption. This requires a new type of computing hardware that can process AI algorithms efficiently and overcome obstacles that limit logarithmic growth, as well as overcome physical constraints imposed by Moore’s law, memory limitations, and the challenge of thermal performance in densely populated devices.

Inspired by human physiology, neuromorphic computing has the potential to be a disruptor.

Chips based on graph processing and manufactured by companies

such as Graphcore and Cerebras are dedicated to neural networks. These GPUs, accelerators, neural engines, tensor processing units (TPUs), neural network processors (NNPs), intelligent processing units (IPUs), and vision processing units (VPUs) process

multiple computational vertices and points simultaneously.

Mimicking the brain

In comparison, the human brain has 100 billion neurons and 100 to 1000 synapses per neuron, totaling a quadrillion synapses. Yet its volume is equivalent to a 2-L bottle of water. The brain performs 1 billion calculations per second, using just 20 W of power. To achieve this level of computation using today’s silicon chips would require 1.5 million processors and 1.6 PB of high-speed memory. The hardware needed to achieve such computations would also occupy two buildings, and it would take 4.68 years to simulate the equivalent of one day’s brain activity, while consuming 10 MWh of power.

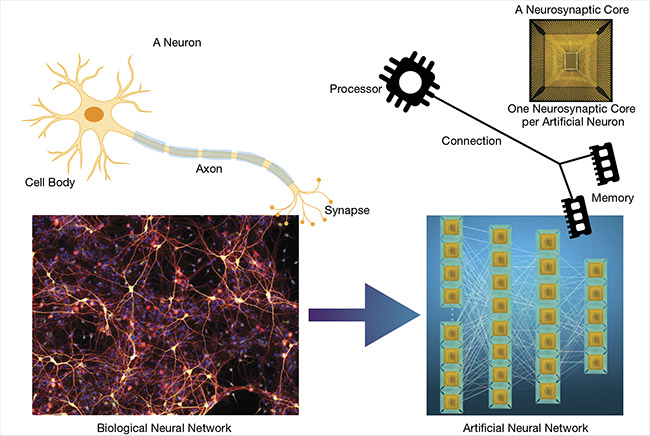

The model for neuromorphic computing uses silicon to create a network of neural synaptic cores or processors.

Each core integrates memory, computation, and communication, and communicates with other cores on the same chip via an on-chip, event-driven network. Instead of running each computation at an expected and regular time, one is made only when, and if,

the artificial neuron is activated by an incoming signal. This clockless network, or spiking neural network, isn’t based on the pace of the chip’s clock, but is adapted to run on non-von Neumann chips. This results in a significant and fast decrease in energy consumption to just milliwatts.

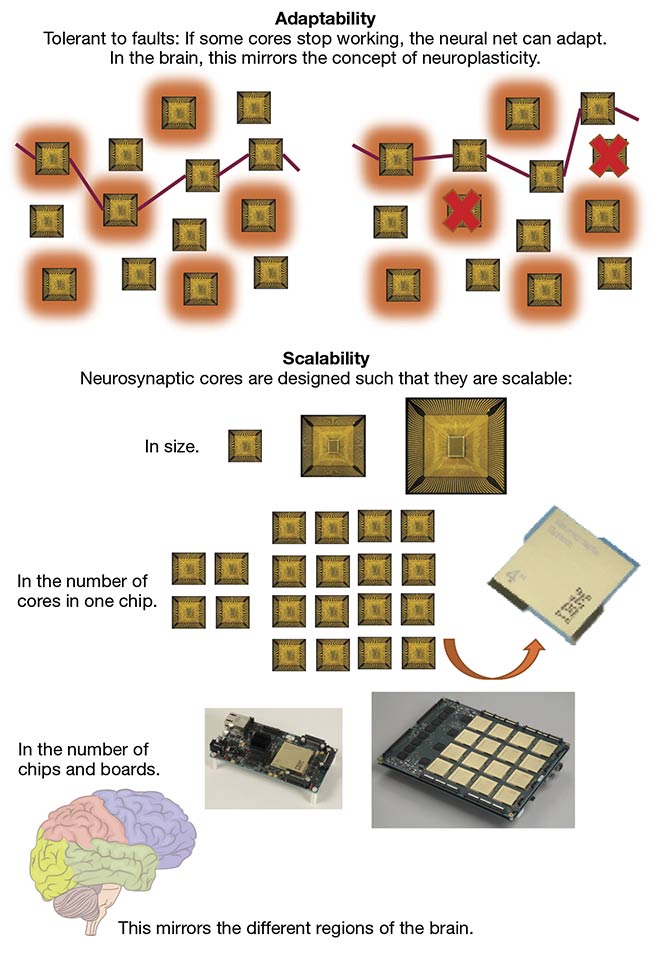

The human brain’s flexibility is also mimicked. Just as the brain adapts to bypass the neuron and create another neural network in the event of synapse failure, if a core stops working, the

neural network takes another path.

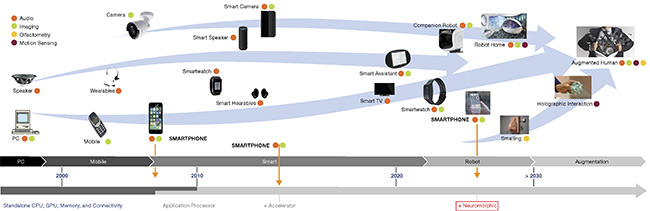

The road to augmented intelligence began with standalone CPU, GPU, memory, and connectivity in the 20th century, and then evolved to wearables, smartwatches, and smart cameras in the 21st century. On the horizon are neuromorphic applications for holographic interaction and the augmented human. Courtesy of Neuromorphic Sensing and Computing report,

Yole Développement, 2019.

Chips communicate via an interchip interface, leading to seamless availability — similar to the human brain’s cerebral cortex — to create scalable neuromorphic systems. Neurosynaptic cores are scalable in terms of chip size, the number of cores in a chip, and the number of chips and boards — mirroring the various regions of the human brain.

Neuromorphic ecosystem

While the market for AI is expected to remain small through the next few years, registering at $69 million in 2024, demand is expected to accelerate growth to $5 billion in 2029. Neuromorphic sensing, principally used in computer imaging, is expected to reach $43 million in 2024 and grow to $2.1 billion in the same five-year period1.

The neuromorphic ecosystem is rich and varied, including incumbents such as Intel and its Loihi chip, Samsung, and SK Hynix, and joined by startups such as General Vision, BrainChip, nepes, and Vicarious. Memory companies are also developing nonvolatile memories such as RRAM (resistive random-access memory), created by researchers at CEA-Leti and Stanford University. Disruptive startups — such as Weebit Nano, Robosensing, Symetrix, and Knowm — have entered the mix, as well as pure-play memory startups, such as Crossbar and Adesto.

IBM, rather optimistically, predicts that by 2025 neuromorphic computing will be able to compete with the human brain. The level of progress is solid — advancing from a chip produced in 2011 that integrates 256 neurons and 144 synapses in a single neurosynaptic core to one produced in 2014 that integrates 1 million neurons, 256 million synapses, 4096 neurosynaptic cores, and is capable of 46 billion SOPs/W (synaptic operations per second, per watt). In parallel, the company intends to develop a chip — the 4096-core TrueNorth (its on-chip network) —

that integrates 4 billion neurons and

1 trillion synapses and that consumes just 1 kWh.

To accompany the hardware, the SyNAPSE (Systems of Neuromorphic Adaptive Plastic Scalable Electronics) project by DARPA aims to offer ready-to-use software stacks to develop neuromorphic computing chips by sometime this year. Intel believes a neuromorphic computing chip will be integrated across its portfolio in both end-point technologies — such as Internet of Things (IoT) sensors, autonomous vehicles, and desktop and mobile devices — and edge technologies such as servers and gateways, as well as in the data center.

Use cases for AI

The ubiquitous smartphone is likely to be the trigger for the introduction of neuromorphic computing. Today, many operations, such as biometrics, are power hungry and data intensive. For example, in speech recognition, audio data is processed in the cloud and then returned to the phone. Adding AI requires more computing power, but low-energy neuromorphic computing could push applications that run in the cloud today to run directly in the smartphone in the future, without draining the phone battery.

Machine vision applications also

hold promise. Neuromorphic computing can run AI algorithms very efficiently. (Currently AI is used extensively within numerous machine vision applications.) Moreover, the combination

of event-based sensors that use a

neuromorphic, asynchronous architecture can offer additional benefits such as speeds unlimited by frame rates, along with greater sensitivity and dynamics.

The smart home is also an interesting example of where neuromorphic computing could be used — especially with audio. The clockless operation means that AI in speakers can understand when a person has finished a command and can understand the command no matter how quickly it is spoken. The low power consumption of neuromorphic computing means that devices do not have to be recharged frequently, nor do batteries have to be changed. Its thermal performance means there is no heat dissipation.

As a result, devices can be fanless, making them quieter and more compact, thus opening up even more uses and applications.

A unique aspect of neuromorphic computing involves its adaptability —

if some cores stop working, the neural net can adapt. Another unique feature is scalability. Neurosynaptic cores are designed to be scalable, and they can mirror the various regions of the brain. Courtesy of Neuromorphic Sensing and Computing report, Yole Développement, 2019.

Looking ahead, AI may be used to add other senses, such as smell, to smartphones, or AI may be used to add motion sensing so that humans can interact with holograms, for example. The availability of a large amount of intelligence in a hand-held device has a great deal of potential. Farther into the future, humans may interact with holograms using neuromorphic computing.

The next largest market after smartphones is likely to be the industrial sector. This market relies heavily on battery-operated devices, such as drones, inspection tools, and mining equipment. All of these would benefit

from neuromorphic computing’s low-power, AI-enabled, and efficient

processing capability.

The industrial market is not as cost- sensitive as the consumer market. Yole Développement expects the industrial AI market to be worth around half of

the mobile market, at $36 million by 2024 and growing to $2.6 billion by 2029. The mobile market is expected

to be worth $71 million and to grow to $3.2 billion during the same period. Other sectors are expected to have modest to no market value in 2024 but then gain over the five-year period.

Consumer AI is expected to be worth $3 million in 2024 and to grow to $190 million in 2029. Automotive may grow from $1 million to $1.1 billion in the same five-year period, and medical and computing is predicted to grow from zero to $5 million and $35 million, respectively1.

Neurmorphic computing imitates a biological neural network. There is one neurosynaptic core per artificial neuron. Courtesy of Neuromorphic Sensing and Computing report, Yole Développement, 2019.

The acceleration of AI will require an additional approach for quickly processing data that will complement deep learning. The ability to run software on a neuromorphic chip is occupying a lot of R&D resources today, as is the development of silicon that is feasibly sized for devices such as smartphones and speakers, and that does not generate excess heat.

Market indicators show that the time is right for neuromorphic computing. Its efficiency in terms of memory use, energy consumption, and thermal performance could overcome many of the roadblocks that lie ahead for AI, while introducing new use cases.

While neuromorphic computing is still in need of a “killer app,” today the technology has all the attributes of a disruptive technology. The escalation of AI will continue to drive the quest for a processor technology that overcomes the obstacles of Moore’s law, memory access, and energy consumption. The growth of neuromorphic computing may go unnoticed initially, but with realistically sized chips and power management, the concept’s usefulness may turn it into a necessity.

Meet the author

Adrien Sanchez is technology and market analyst within the Computing & Software division at Yole Développement, part of the Yole Group of Companies. In collaboration with his team, Sanchez produces technology and market analyses covering computing hardware and software, AI, machine learning, and neural networks; email: [email protected].

Reference

1. Yole Développement (2019). Neuromorphic Sensing and Computing 2019: Market and Technology Report.