Innovative applications and the resulting demands of power consumption and data bandwidth require a paradigm shift in communication and network infrastructure.

MASAHISA KAWASHIMA, IOWN GLOBAL FORUM

In today’s fast-paced digital world, speed is everything. Low latency is critical for enabling the need for speed, which can transform industries, enhance our digital lives, and create a smarter world. By reducing the time required for data to travel from one point to another, low latency can help applications to run faster and more smoothly, improving the user experience and ensuring customer satisfaction.

Courtesy of iStock.com/ imaginima.

However, current compute and network infrastructures must achieve quantum leaps in latency to achieve the performance needs of latency-sensitive applications. These range from banking systems with synchronous data replication across data centers to critical life situations, such as AI-driven surgical robots.

The limitations of existing networks owe to probabilistic packet losses and delay variations that are inherent to electronic packet switching systems. These drawbacks are evident in key metrics such as message transfer latency and power efficiency because computing nodes must perform buffering and resending to cope with packet losses and delay variations. Message transfer latency, measured in milliseconds, may not impede a user waiting for a webpage to load. But this parameter becomes critical for latency-sensitive applications in which even a

10-ms delay can compromise performance.

At the same time, the energy requirements for sustaining this data explosion are plagued by unsustainable rates of growth. Data centers already account for ~1% of global electricity consumption, with projections indicating an increase to 3% to 4% by 2030. This rising power usage exacerbates the environmental impact, because each byte of data transferred requires energy for processing, cooling, and transmission.

By contrast, photonic networks offer unparalleled speed, efficiency, and scalability, addressing the dual challenges of managing latency-sensitive applications while aligning with global sustainability goals. Photonic networks do not cause probabilistic packet losses or data variations, therefore the technology is particularly suited for latency-sensitive applications. Moreover, the recent evolution of optical transmission technology has made it possible to send gigabits per second data over long distances without using intermediate relay nodes.

To prepare the marketplace for photonic

network technology, like-minded organizations from the tech world and key

industry verticals have been brought

together by the IOWN (Innovative Optical and Wireless Network) Global Forum to create a smarter world through next-generation communications infrastructure. The forum has published proof-of-concept use cases to drive network rollouts, which include multi-data center infrastructure for financial services institutions (FSIs), remote media production and streaming, and green computing.

Financial services’ digital transformation

Perhaps more than any other industry, the financial services sector is already engaged in its digital transformation. This journey has not been seamless. The performance limitations of current technologies, increased regulation(s), and reliability requirements all conspire

to affect business agility and service resiliency even amid emerging opportunities to improve infrastructure and advance service models. And existing hybrid cloud solutions are significantly complex, expensive, and challenging to implement.

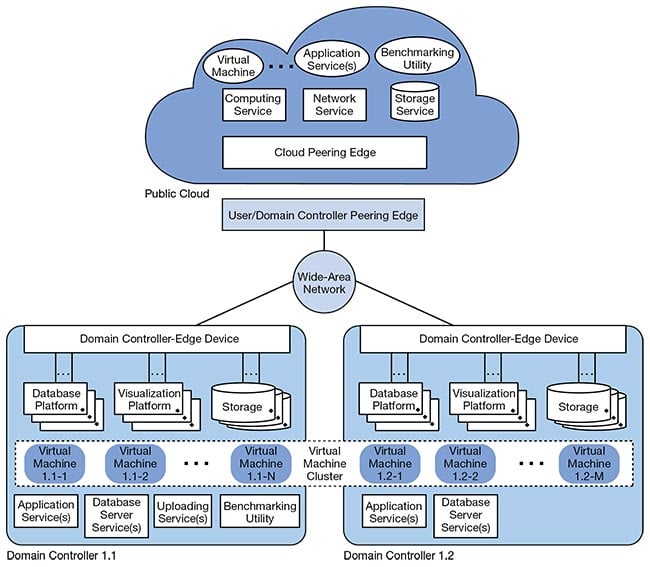

A transition to photonic infrastructure in financial services would both expedite and streamline this technological shift. By using infrastructure in multiple data centers with replication between sites close to real time, operational resilience, agility, and cost optimization will

improve (Figure 1). This approach will support FSIs’ disaster recovery and workload migration following a human-induced or natural disaster, such as

earthquakes. It will also introduce an improvement in the flexible usage of

computing resources, such as resource pooling and dynamic resource allocation.

Figure 1. Financial services institutions (FSIs) achieve disaster recovery and inter-regional workload migration through the use of infrastructure in multiple regions, with replication between sites close to real time. These inter-regional technology evaluation connections are shown. Courtesy of IOWN Global Forum.

If applications could deploy without service outages, and computing resources could be used more flexibly, improvements to scalability would follow. It would also help to facilitate the provision of services, such as banking-as-a-service, which relies on external service requests and makes it challenging to predict transaction volumes. This approach not only streamlines the system architecture but also significantly improves data security and system efficiency.

FSIs have the highest priority for business-critical data and services, such as account systems and ledger data (known as Tier 1 systems). This level of reliability is crucial to support business continuity planning. With traditional infrastructure,

it is challenging to replicate data synchro-

nously to a remote data center; this causes either application performance degradation or asynchronous replication that tolerates either a certain level of data inconsistency or assumes operational coping. Essentially, these Tier 1 systems require synchronous replication to ensure the continuity of financial transactions. This is where the IOWN All-Photonic Network (APN) comes in. With APN,

systems will be able to achieve synchronous replication with much less performance degradation.

Remote media production

The rapid advancement of media production technology poses a multifaceted challenge. Industry must secure enough highly and appropriately skilled media production operators to meet demand,

especially in rural areas. A lack of qualified personnel, paired with limited resources for acquiring advanced solutions, plus high networking costs, make distributing and developing quality

content a daunting prospect. As a result, there is increasing demand to develop technologies and architectures that provide an optimized profit structure to address these new realities of the market.

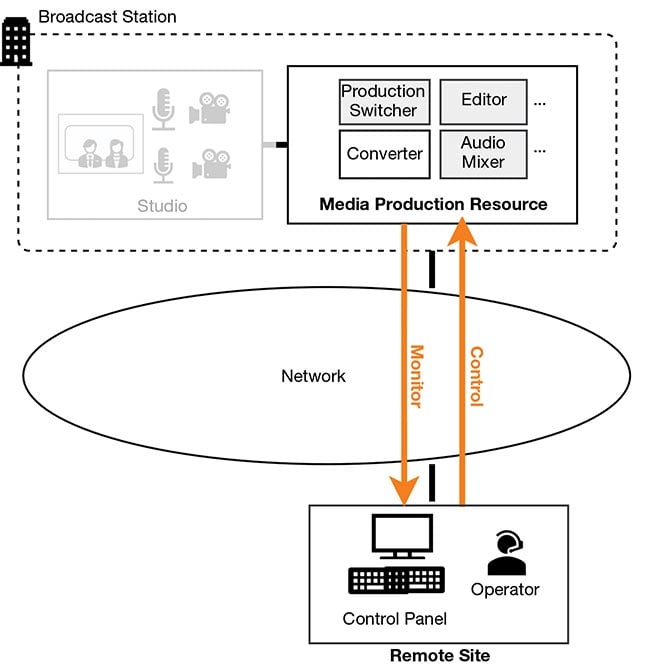

Open APNs provide dynamic, flexible, resilient, high-bandwidth, and low-latency connections to enable distributed applications, including high-quality video production. Using this technology, an operator or operators who manipulate the media essence for live broadcasting can remotely control the media production resources, such as the production switcher and editor, as if the operators and the media production resource were together at the same site (Figure 2). The flexible workflow enabled by this model will help secure necessarily skilled media production professionals who may be geographically distributed.

Figure 2. Using Open All-Photonic Networks (APNs), an operator(s) who manipulates the media essence for live broadcasting can remotely control the media production resources, such as the production switcher and editor, as if the operator and the media production resource were at a common site. Courtesy of IOWN Global Forum.

And, unlike today’s leased lines, Open APNs enable users to turn on/off connections. This will enable significantly reduced wide-area network (WAN) infrastructure cost, and connection to many venues and production sites as a result.

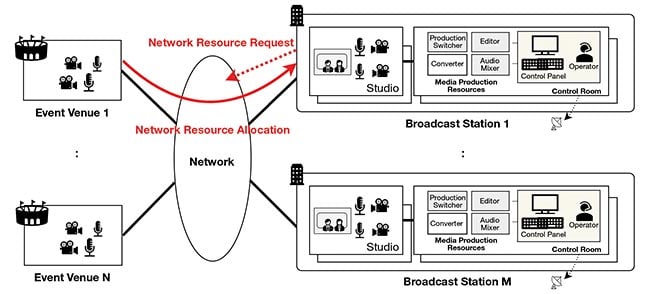

Broadcasters themselves can also

benefit from network resource sharing.

The broadcast station can request network resources from the event venue to the broadcast station, at any time that an event occurs, to provide high-quality connectivity at an affordable cost (Figure 3). Unlike conventional media production taking place at the event venue, in these instances raw media essence streams

are sent directly from the venue to then undergo editing at the broadcast station itself.

Figure 3. Broadcasters benefit from network resource sharing, as the broadcast station can request network resources from the event venue to the broadcast station whenever an event occurs, resulting in high-quality connectivity. Courtesy of IOWN Global Forum.

Green computing

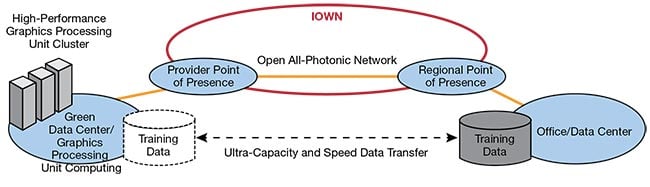

The use of environmentally friendly green data centers is an effective approach to accommodate large-scale calculations for generative AI/large language models (LLMs), which consume considerable electrical power. However, today’s graphics processing unit (GPU) computing services assume that computing and data storage resources are located in the same data center. This makes it difficult for enterprises to use GPU computing resources at green data centers, because enterprises cannot readily store their confidential data in green data centers — which are typically operated by third parties. To effectively use the large amount of data located beyond the data center, end users will require a high-performance network such as an IOWN-based APN. An IOWN-based APN will provide a solution for enterprises to build generative AI/LLMs using green data centers and maintain data confidentiality.

Here, the vision is for users to build

an LLM by performing training on

GPU computing located on Open APN-connected green data centers, using the training data that exists at their own location. The training process runs by retrieving the training data from the remote site on a chunk-by-chunk basis. As APN(s) provide guaranteed-bandwidth, fixed-latency, and packet-loss-free connections, the time for retrieving a chunk of data is predictable and constant. This will enable the training process to keep running in parallel with the data retrieval process.

It is assumed that many packets used for data reference will be transferred on the Open APN, and that the network latency will affect model training time and power consumption regarding GPU computing (Figure 4). Additionally, high-performance GPU computing consumes more power and has higher threat density in proportion to its performance. This means that a green power supply and water-cooling system are essential. A green data center, in which both of these elements are present and available, is therefore the core of efficient operation of high-performance GPU computing.

Figure 4. Training data transfer on the Open All-Photonic Network (APN) to graphics processing unit (GPU) clusters for large-language models (LLMs). Courtesy of IOWN Global Forum.

The road to 2030

While the benefits are clear, achieving this vision of APN adoption requires a global effort. The success of APNs depends on collaboration across industries, governments, and academia, which must unite to capitalize on the opportunities offered by photonic solutions. By increasing capacity to unprecedented levels, reducing delays to near zero, and slashing energy consumption, photonics offers a sustainable and scalable solution to the challenges of tomorrow.

Fortunately, the shift to photonic networks is already underway. The IOWN Global Forum’s work on open standards and proof-of-concept initiatives has laid the groundwork for scalable, globally deployable solutions.

However, this transition is not without its challenges. Building a new type of network involves rethinking not only technology but also the way we design, build, and manage infrastructure. It requires investment, innovation, and a shared commitment to creating a more connected and sustainable future.

Meet the author

Masahisa Kawashima is chair of the Technology Working Group at IOWN Global Forum and NTT’s IOWN technology director. His expertise includes high-speed networking, software defined networking, cloud/edge computing, AI, and data management; email: [email protected].