The advent of autonomous vehicles, advanced spacecraft, and other technologies that rely on sensors for navigation has created a need for advanced technologies that can scan for obstacles, pedestrians, or other objects. Currently, obstructed objects pose a challenge for autonomous vehicles. A mechanism is needed to scan for hidden objects that may be in the vehicles' path.

To address this need, Caltech researchers and their colleagues described a method that essentially transforms nearby surfaces into lenses that can be used to indirectly image previously obscured objects. The technology is a form of non-line-of-sight (NLOS) sensing — or sensing that detects an object of interest outside of the viewer's line of sight. The developed method, called UNCOVER, does this by using nearby flat surfaces, such as walls, like a lens to clearly view the hidden object.

Most current NLOS imaging technologies detect light from a hidden object that is passively reflected by a surface. However, surfaces such as walls cause the light to scatter, which affects the resolution of the image. Computational imaging methods can be used to extract information from the scattered light and improve the image clarity, but they cannot generate high-resolution images.

UNCOVER, however, directly counteracts scattering through its use of wavefront shaping technology. Wavefront shaping was previously unviable because it requires the use of a guidestar, an approximate point source of light that allows details of the hidden object to be deduced.

“We know that lenses image a point onto another point,” researcher Ruizhi Cao said. “If you are looking through a bad ‘lens’ with matte surfaces, the image of a point is now blurred, and the light spreads all over the place, but you can grind and polish the matte surface to navigate the light to the correct position."

A guidestar helps, in principle, by telling where the tiny bumps are, so that the surface can be correctly polished, he said.

Assistant professor Changhuei Yang and his colleagues found that the hidden object itself could be used as the guidestar. The UNCOVER NLOS imaging method pieces the scattered light back together into a clear image of the hidden object.

According to Cao, the team’s NLOS imaging method could make autonomous driving safer and be used for rescue missions and other remote-sensing related missions.

“We can see all the traffic on the crossroads with this method," Cao said. “This might help the cars to foresee the potential danger that one is not able to see directly.”

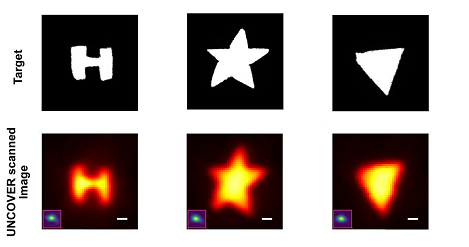

Target objects and the images of them created by UNCOVER NLOS technology. The sensing method could enable safer autonomous mobility by bringing obstructed objects into the vision of autonomous vehicles and other technology. Courtesy of Caltech.

The use of UNCOVER might allow automobiles to see as well as humans do, and may also help humans to become better drivers. Whereas a human may be able to spot an upcoming jaywalker a few feet away, an autonomous car outfitted with UNCOVER technology could potentially be able to spot such an instance on the next block, provided that the imaging conditions are optimal.

UNCOVER imaging could also prove useful beyond Earth — for example, in future robotic missions to explore Mars.

“We are counting on the rovers to take images of another planet to help us develop a better understanding about that planet.” Cao said. “However, for those rovers, some places might be hard to reach because of limited resources and power. With the non-line-of-sight imaging technique, we don't need the rover itself to do that. What is needed is to find a place where the light can reach.”

The research was published in Nature Photonics (www.doi.org/10.1038/s41566-022-01009-8).