UCLA researchers led by Aydogan Ozcan and Mona Jarrahi have developed a multispectral imaging technology capable of turning a monochrome sensor into a multispectral one. Rather than the traditional absorptive filters used for multispectral imaging, the technology uses a diffractive optical network to form 16 unique spectral bands periodically repeating at the output image field of view to form a virtual multispectral pixel array.

Multispectral imaging is used in applications including environmental monitoring, aerospace, defense, and biomedicine. Due to their compact form factor and computation-free, power-efficient, and polarization-insensitive forward operation, diffractive multispectral imagers can be used at different parts of the electromagnetic spectrum where high-density and wide-area multispectral pixel arrays are not widely available.

Despite the widespread use of spectral imagers such as the color camera for various imaging applications, scaling up the number of the absorptive spectral filter arrays — that feature in the design of traditional RGB cameras — to collect richer spectral information from many distinct color bands poses various challenges. This is due to their low power efficiency, high spectral cross-talk, and poor color representation quality.

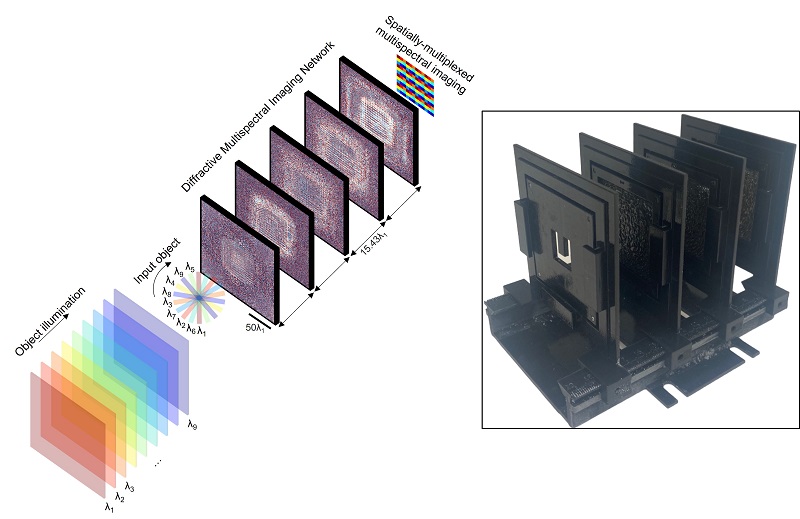

To construct a diffractive optical network, which can function as an alternative to these absorptive filters, several transmissive layers that are structured to compute using light-matter interactions are fabricated to form an actual material stack. The design of the network is based on deep learning, and the transmissive layers serve as an all-optical processor. As the input light is transmitted through these thin elements, different computational tasks such as image classification or reconstruction can be completed at the speed of light propagation, Ozcan said.

This diffractive multispectral imager can convert a monochrome image sensor into a snapshot multispectral imaging device without conventional spectral filters or digital reconstruction algorithms. Courtesy of Ozcan Lab, UCLA.

The researchers’ diffractive network-based multispectral imager is optimized to spatially separate the input spectral channels onto distinct pixels at the output image plane, serving as a virtual spectral filter array that preserves the spatial information of the input scene or objects, instantaneously yielding an image cube without image reconstruction algorithms.

An image cube is a stack of images in which each image has a different spectral line that looks at the input scene at different spectral bands. The third dimension of this cube, Ozcan said, is the optical spectrum.

Therefore, the diffractive multispectral imaging network can virtually convert a monochrome image sensor into a snapshot multispectral imaging device without conventional spectral filters or digital algorithms.

“Simply put, you can place this thin diffractive network (like a transparent stamp) on a monochrome imager chip to convert it into a multispectral imager,” Ozcan told Photonics Media.

The diffractive network-based multispectral imager framework offers both high spatial imaging quality and high spectral signal contrast.

“We showed that ~79% average transmission efficiency across distinct bands could be achieved without a major compromise on the system’s spatial imaging performance and spectral signal contrast,” Ozcan said.

The initial experimental demonstration of this work was carried out in the terahertz region of the electromagnetic spectrum. Ozcan and the researchers plan to move the technology into the infrared and visible wavelengths in future work.

The research was published in Light: Science & Applications (www.doi.org/10.1038/s41377-023-01135-0).