As vision systems increase in speed and bandwidth, scientists are looking to biological systems for ways to process image data more quickly.

FAROOQ AHMED, CONTRIBUTING EDITOR

Data, as the saying goes, is king. But as any chess player knows, the king without his minions is hamstrung by limited mobility. The ubiquitous deployment of CMOS and CCD imagers across a range of industries and applications has generated an avalanche of image data. However, without the use of centralized processing units — a CPU, GPU, or cloud processor — that data is similarly hobbled.

Whether CMOS or CCD, image sensors receive incoming photons and convert optical signals into electrical ones. Converting and moving this data between the sensor, an analog-to-digital converter, and the processor consumes both power and time. This reliance on off-chip processing is hindering machine vision’s capabilities.

“We think that machine vision as a whole isn’t even close in reaching its full potential,” said professor Thomas Müller, who directs the Nanoscale Electronics and Optoelectronics Group at the Vienna University of Technology in Austria.

In addition, the growing adoption of machine vision in sectors such as intelligent vehicles, autonomous robots, surveillance, and mobile medical devices demands rapid, real-time processing that consumes less power compared to current technologies.

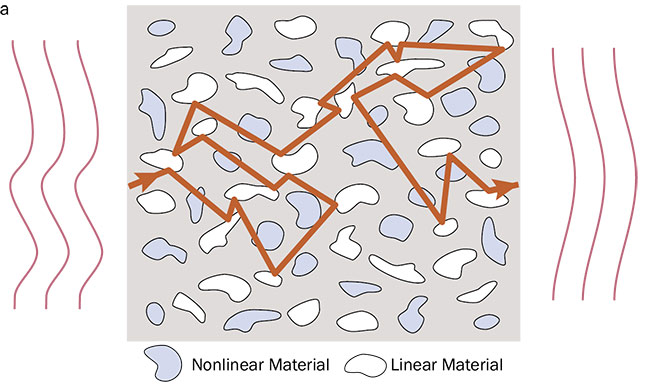

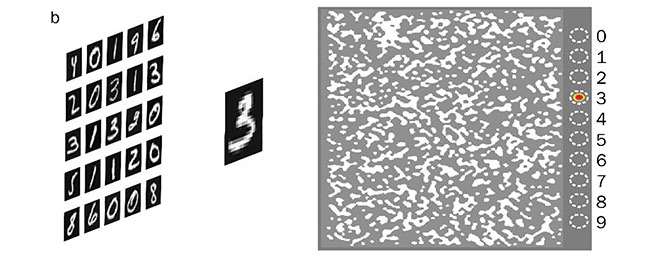

Zongfu Yu and colleagues at the University of Wisconsin-Madison developed a smart glass inspired by

the brain’s neural network. The glass acts as a nanophotonic neural medium trained to recognize

handwritten digits by focusing incoming light to various locations based on the image’s content (a).

In practical terms, it processes images directly. When the number 3 was projected onto the glass, for example, the medium transformed the image into a wavefront of optical energy that exited at a specific output (b). Courtesy of University of Wisconsin-Madison.

“With the automation of more and more complex tasks, which often need visual information and context, faster and more energy-efficient machine vision will be

of high demand in the next decades,” Müller said.

Integrated approaches

One solution that has sped up the processing of images is on-chip processing, which is helping machine vision to reach its full potential by reducing the need to transfer image data to an off-chip processor. The king is getting help at the source, as computational tasks move onto the image sensor itself.

But such solutions still confront a fundamental divide in image processing: Because of the different requirements of the sensing and computing functions, engineers separated the design of sensory systems and computing units in conventional vision systems. This separation has become the next hurdle to overcome.

Zongfu Yu, an engineer at the University of Wisconsin-Madison, said,

“Historically, machine vision has been divided into the people who develop the sensors and those who develop the algorithms.” While this division of labor helped spur innovation in each sector, he added, “It’s certainly not how vision evolved in animals. We need an integrated approach to optimize both [hardware and software] together.”

Scientists are looking to biology for inspiration on how they might advance data processing methods using intelligent sensors. Evolution, of course, has developed a blueprint for this: the brain’s neural network, which integrates sensory information and reacts quickly by leveraging complex parallel processing. Neuromorphic, artificial neural networks mimic the brain’s ability to process data.

Müller defined neuromorphic engineering as “a process that uses biologically inspired concepts to develop novel solutions.” The Austrian scientist and his colleagues combined neuromorphic engineering with sensor-side computing to create a neural network directly on an image sensing chip1.

“The sensor represents and operates

as an artificial neural network,” he said.

“We think incorporating computational tasks directly into the vision sensor is key to reducing bandwidth and boosting speed for the next generation of smart machine vision hardware.”

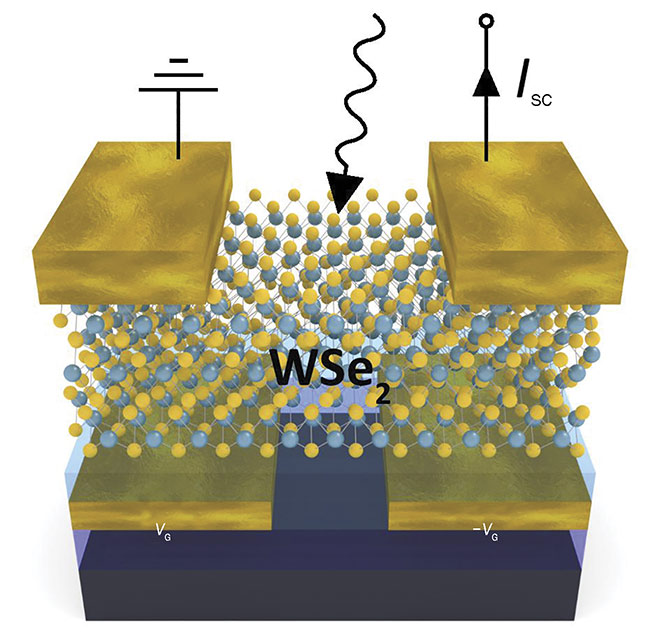

Müller’s chip contains individually tunable tungsten diselenide (WSe2) photodiodes that respond proportionally to incoming light. The photodiodes — in a two-dimensional, 3 × 3 array — act as nodes in the artificial neural network to preprocess incoming visual data. Less light on a photodiode gives it less synaptic weight in the neural network and affects the interpretation of the image. This analog processing removes the need for analog-to-digital conversion, and the

artificial neural network is continuously tunable. Conventional silicon-based photodiodes respond to light in a fixed, on-off, binary manner, limiting their utility as a tunable computational platform.

The researchers used a 2D semiconductor instead of a conventional 3D one because doping in two-dimensional materials provided better control of the responsivity of each photodetector. As a photovoltaic device, the chip is essentially

self-powered and requires electrical energy only while training the artificial neural network.

Müller’s team trained the chip to classify simple letters through a supervised learning algorithm and to act as an autoencoder to detect image shapes through unsupervised training. The device responded in about 50 ns. And Müller noted an added benefit. “Neuromorphic design has proven to be rather robust to fabrication-related variations and imperfections,” he said.

Vienna University of Technology’s Thomas Müller developed individually tunable tungsten diselenide

(WSe2) photodiodes that respond to light proportionately and can act as an artificial neural network.

SC: semiconductor; VG/−VG: voltage pair. Courtesy of Vienna University of Technology.

Yu said this is important. “Machine learning has to generalize. Just like with a brain’s neural network — if one cell dies you still have the right output.”

Smarter glass

Like Müller, Yu’s group, led by

graduate student Erfan Khoram, developed a device that leveraged analog preprocessing of visual information at the sensor to both speed up image recognition and decrease power consumption. In their case, however, the sensor was a piece of glass that used lightwave propagation dynamics to perform computation2.

“We thought we could exploit the physics to do the computing for us for free instead of manipulating electrons,” Khoram said.

The smart glass acted as an optical, nanophotonic medium that performed nonlinear mode mapping using photons instead of electrons. As light passed through the glass, asymmetric structures called inclusions scattered the incoming light. The inclusions, Yu said, were crucial to the glass’s activity. “They can be minute air holes or other materials with different diffractive indices from the

host material.”

The group trained the glass to recognize numbers. When projected onto the glass, the number 3, for example, transformed into a wavefront of optical energy that exited at a specific output.

“The training was done directly on the optical medium instead of on a digital algorithm,” Yu said. The glass responded dynamically and it detected when the scientists changed the number 3 into the number 8. After training, the glass required no electrical energy because it operated passively.

Analog signal processing has added benefits, such as decreased noise and

increased privacy. “We all have Alexa and Google in our homes, but no one knows who’s listening,” Yu said. “Once the physical signal is converted into the

digital domain, it can go anywhere.” Analog computing ensures that the original signal remains in the physical domain. “There’s no way to access it externally,” he said.

Yu’s team is working with researchers

at Columbia University to expand potential applications such as fingerprint or facial recognition. “It would be useful, for example, in situations that require a device to work after being deployed in the field for decades without maintenance,” he said.

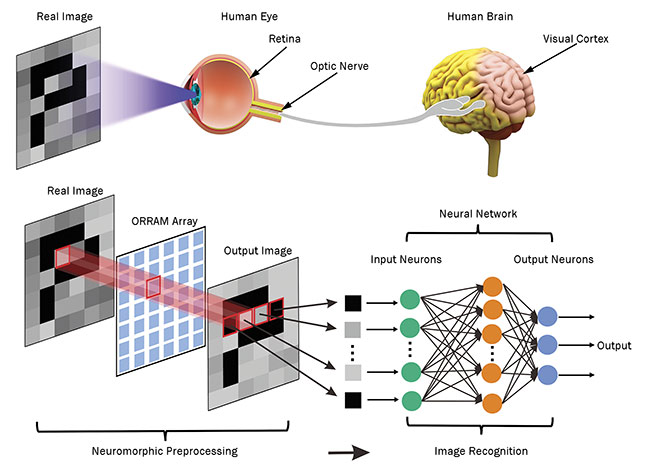

Yang Chai of Hong Kong Polytechnic University developed an artificial neuromorphic visual system inspired by the human visual system.

The intensity and duration of incoming device light modulates the device’s response, enabling it to preprocess images for an artificial neural network

— just as the retina does for the brain’s neural network. ORRAM: optoelectronic resistive random-access memory. Courtesy of Hong Kong Polytechnic University.

Optical devices have been notoriously difficult to miniaturize, and smart glass could also pave the way for smaller, intelligent laser cavities, photonic crystals, and beamsplitters.

The key, Yu believes, is to functionalize the inclusions, which would expand the device’s capabilities. “Inclusions made of dye semiconductors or graphene saturable absorbers could allow for distributed nonlinear activation,” he said.

Visual memory

While neuromorphic systems and artificial neural networks can emulate the basic functions of the human brain, their ability to integrate with other electronic components, especially those based on conventional technology, can be an obstacle to widespread adoption. Yu said, “Our optical media is just a computing arm. There’s no storage, for example. No retrieval memory.”

A solution devised by applied physicist Yang Chai’s group at Hong Kong Polytechnic University also used biology as

an inspiration — the human visual system and specifically the retina3. They designed memory that performed in-sensor visual preprocessing. The device, called ORRAM for “optoelectronic resistive random-access memory,” was fabricated from molybdenum oxide and responded to light in the ultraviolet range.

Chai said, “We shifted some of the computational and retention tasks to the sensory devices, which reduces redundant data movement, decreases power consumption, and generates and consumes the data locally.”

As with Müller’s sensor, light intensity and duration modulated the ORRAM device’s response — so that it was tunable and could, in a sense, learn to retain information. A higher dose of photons, for example, resulted in longer memory retention than a lower dose, mimicking the long-term and short-term plasticity of the human brain. The chip also filtered out and preprocessed noise from background light. After training, an 8 × 8 ORRAM array remembered learning several letters in the researchers’ experiments.

“Image preprocessing is a characteristic of the human retina, and we believe that biomimicry will contribute to the development of applications in edge computing and the Internet of Things,” Chai said. While still in the proof-of-concept stage, the device has attracted investors for large-scale prototyping.

“This integration promises a new, exciting future for the optimization of machine vision technology,” said the University

of Wisconsin-Madison’s Yu. He added, “Reprogrammability could transform these devices into ultrahigh-density

computing platforms. This would allow us to rethink the entire idea of the computer.”

www.linkedin.com/in/farooqtheahmed

Acknowledgments

The author would like to thank Thomas

Müller of the Vienna University of Technology, Zongfu Yu and Erfan Khoram of the University of Wisconsin-Madison, Yang Chai of Hong Kong Polytechnic University, and Francesco Mondadori from Opto Engineering.

References

1. L. Mennel et al. (2020). Ultrafast machine vision with 2D material neural network image sensors. Nature, Vol. 579, pp. 62-66, www.doi.org/10.1038/s41586-020-2038-x.

2. E. Khoram et al. (2019). Nanophotonic

media for artificial neural inference. Photonics Res, Vol. 7, Issue 8, pp. 823-827, www.doi.org/10.1364/PRJ.7.000823.

3. F. Zhou et al. (2019). Optoelectronic

resistive random access memory for

neuromorphic vision sensors. Nat Nanotechnol, Vol. 14, pp. 776-782, www.doi.org/10.1038/s41565-019-0501-3.