For the inspection of shiny or translucent objects, or those with complex geometries, machine learning-powered vision trumps conventional vision.

HANK HOGAN, CONTRIBUTING EDITOR

Plucking individual objects from a collection, or bin picking, is a staple of manufacturing and processing. Robotic bin picking has been around for decades but often required special expertise to configure, as well as some sort of manual intervention, such as placing parts on a fixture prior to the robot’s actions. However, recent advancements in machine learning and associated AI technologies are prompting emerging capabilities in robotic bin picking, saving time and money.

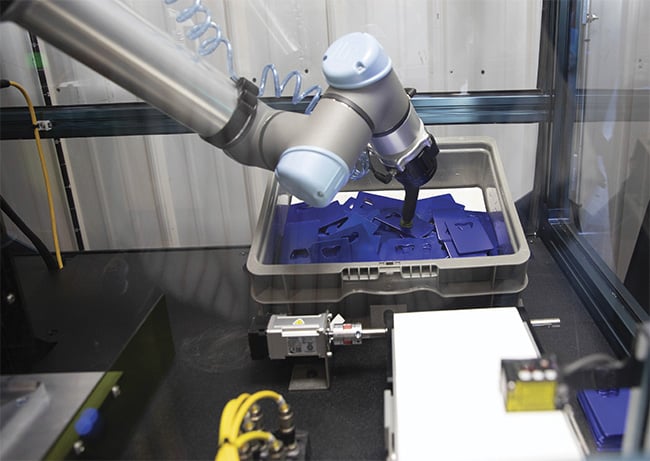

Advancements in AI enable the random bin picking of objects that were difficult for traditional vision solutions to pick up, such as shiny metal parts. Courtesy of FPE Automation.

Sandia Harrison, director of marketing at FPE Automation, has witnessed these innovations in action. FPE Automation is a system integrator that

develops turnkey bin picking and custom solutions with collaborative, high-speed robots.

Robotic bin picking was not effective when objects were difficult to detect, shiny, or otherwise challenging; such constraints have eased lately due to

AI.

“What was not doable quite recently is very much possible now. That has opened the concept of bin picking to a lot of companies where it might not have been an option before,” Harrison said.

She said that in the past, it was sometimes required for parts to be placed into fixtures or onto boards for imaging and machine vision analysis. Setups controlled lighting to enhance contrast and lessen variability. Such

actions ensured that a robot could identify a part and successfully grip it before moving it to another location.

This approach was not functional with randomly oriented parts, such as those piled into a bin, or nonuniform objects, including apples, potatoes, or other fruits and vegetables. Issues also arose with shiny objects because their reflective surfaces tend to wash out images.

Applying machine learning

Recent AI advancements allow for machine learning to be applied to bin picking. These implementations depend on artificial neural nets that consist of layers of connected software neurons. Feedforward and feedback mechanisms adjust the relative weight of the neurons during training to

create a model that takes an input, such as the image of an object, and produces an output, such as a classification of whether the object is a potato or not.

A robot accurately picks up blue plastic parts due to the improvements in vision technology. Courtesy of FPE Automation.

A pair of off-the-shelf 12-MP cameras focus on clear plastic tubes in an Apera AI vision system. Information from the cameras runs through AI-powered vision software and gives a Kawasaki cleanroom robot instructions to pick and place the object. Clear objects were previously a struggle for conventional vision solutions. Courtesy of Apera AI.

This training involves showing the AI system lots of pictures of objects, such as potatoes, in all orientations as well as many pictures of objects that are not potatoes. As the system moves through the training set, it distinguishes the visual features that make a potato a potato. Eventually, it creates a model that can accurately classify objects, even in cases of poor contrast or other vision challenges. During bin picking, the AI technology identifies edges and extracts the relevant information needed for the robot to decipher what to pick up and in what order in a pile of objects.

“Which one is the one I can pick the fastest so I can get down to the bottom of this bin the fastest?” Harrison asked while explaining how this capability impacts cycle time.

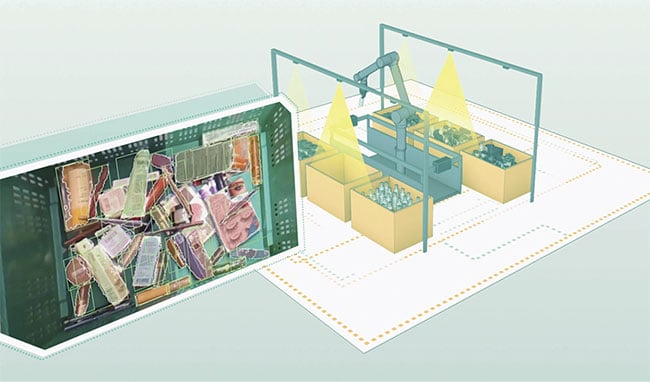

An Apera AI bin picking system using an Epson industrial robot. Two pairs of 12-MP cameras focus on four bins containing bolts of different sizes, colors, and degrees of shininess. AI-powered vision software guides the picking of the bolts and their vertical placement into a fixture. Courtesy of Apera AI.

FPE Automation has investigated various AI-enabled bin-picking solutions, including technology from Apera AI. Eric Petz, head of marketing at Apera AI, said that the company uses off-the-shelf 2D cameras and lenses to capture a scene and combines these images into a 3D interpretation of the objects’ layout.

The objects in the camera’s lens

are evaluated by an artificial neural

network, using simulations from computer-aided design drawings or 3D scans. These generated images ensure that the training set covers all eventualities, such as all orientations of an object. The training involves about 1,000,000 simulated cycles to achieve a greater than 99.99% reliability, according to Petz. This approach enables the system to understand and recognize an object of interest in every orientation.

Petz pointed to several advantages offered by this AI bin-picking technology. Because it uses standard lenses and cameras, with Apera AI recom mending 12-MP ones, it lowers hard-

ware expenses. It also works in

ambient light and is immune to lighting changes, which is an asset for working outside or in locations, such as a

loading dock, in which the doors

may be open at times and closed at others.

RightHand Robotics’ AI is trained on a limited set of items but can scale to items it has never seen before due to the use of what the company calls broad AI. Courtesy of RightHand Robotics.

“Whereas a conventional vision system may have a vision cycle time of 3+ seconds, we regularly achieve total vision cycle times as low as 0.3 seconds,” Petz said. This speed makes a key milestone of 2000 picks an hour possible, he said.

A third benefit is the ability to deal with shiny, clear, or translucent objects as well as complex geometry. These are object categories that conventional vision systems struggle with, according to Petz.

He added that the technology is used at a tier 1 automotive supplier for the picking and sorting of highly similar black plastic parts on a black conveyor belt. The contrast between the part

and the belt is low, and the parts are only differentiated by features that are millimeters long.

Apera AI produces robot vision software, not the robot itself, but other companies, such as Soft Robotics, offer AI bin-picking solutions along with hardware. Soft Robotics’ mGripAI combines the company’s proprietary soft grippers, 3D machine vision, and AI software. Austin Harvey, director of product management, noted that bin picking involves locating individual

pickable candidates, identifying the best pick point, planning a robot path for the process, and avoiding collisions with other objects.

A fully integrated vision and picking system moving objects for the online pharmacy Apotea in Sweden. Courtesy of RightHand Robotics.

“AI algorithms can be incorporated in each of these functions and, in many cases, can help to improve reliability and accuracy. AI may reduce the

complexity of implementing a bin-

picking operation,” he said.

Pretrained models

Soft Robotics equips its solutions with pretrained object models, allowing the robotic system to have an

understanding of the object it is interacting with. These models can save weeks of programming time and allow faster deployment, according to Harvey.

RightHand Robotics, founded by a team that won a DARPA-sponsored robotic gripper competition, has developed an integrated vision and picking system that incorporates AI, according

to Annie Bowlby, senior product manager. She noted that this broadly based AI approach frees the system from having to know every item that will be picked in advance, which allows the system to handle objects presented in any orientation, with varying lighting.

Certain industries, such as major manufacturers and online pharmacies, are likely to be early adopters of such

a broadly based AI bin picking because of the environments they operate in. “Those are two areas where the items are more standardized and have

less variation in size and packaging, enabling a larger percentage of items to be picked immediately,” Bowlby said.

Soft Robotics’ Harvey pointed to other areas as likely early adopters due to the benefits that the technology offers. “The food industry is a strong candidate for the adoption of bin picking and other 3D applications using

AI because, whether you look at protein or produce, food product is often consolidated into bulk throughout the process. Combining soft gripping with 3D vision and AI allows end users to automate complex tasks without implementing significant changes to their operation.”

Apera AI’s Petz said that objects that can change shape in unexpected ways, such as a bag of peas, are not presently applicable for AI bin picking. Objects that are highly variable in size and shape, such as a pile of leaves,

are another currently impossible use case.

But other tasks and objects are candidates for this technology. One example Petz cited is the placement of unmachined parts into a computer numerical control machine, with these parts pulled automatically from a randomly filled bin.

Minimizing latency

Manufacturers are working to reduce training time for future uses. Training involves the use of large data sets, high network bandwidth, and significant computing power, but can tolerate latency, making it suitable for a cloud solution. It is best to locally operate the models that guide the robot, due to the need to minimize latency and maximize robot responsiveness. But the models require substantially fewer computing, storage, and network resources.

Advancements in cloud computing and improvements in neural networks are enabling training time reduction. Additionally, improvements in graphics processing units support faster system vision cycle times.

“The ideal future state would be very short training times — hours, not days — to support high-mix, low-volume manufacturing environments. If this were the case, companies could consider automation of processes that were previously not economical,” Petz remarked.

AI can extract useful information

from less-than-ideal images, allowing fast and reliable bin picking to take place in situations in which it could not before. However, minimal vision requirements still exist. Petz summed these up by saying that if a human cannot see what is occurring, then an AI-enabled system probably will not be able to do so. This lack of visibility could be due to dim lighting, or a camera being blocked.

Vision can also play a critical role in the training data set. John Leonard, product marketing manager at 3D camera developer Zivid, said that the information in the data set is ultimately what the model depends on.

“The more you can make sure the data you are putting into your model is clean, accurate, and pertinent, then the model will generally perform well. So, in this regard, the 3D vision’s job is to supply point clouds that fulfill on those requirements,” he said.

Continued innovations in vision, computer, network, and algorithms are anticipated to induce future progress

in AI bin picking. Recent developments

in automated bin picking should provoke interest from those who have overlooked it in the past, said FPE Automation’s Harrison.

“It would definitely be a very good idea to revisit that now,” she said.