A method for generating holograms uses an artificial intelligence program that a consumer-grade laptop is capable of running, giving implications in VR and 3D printing. A team at MIT introduced the method, which generates holograms almost instantly.

The process of generating holograms via computer typically necessitates a supercomputer device to run the necessary physics simulations. The process is slow even on a supercomputer and often delivers subpar results. By comparison, the new method enables a consumer-grade computer to generate real-time 3D holographic images in milliseconds.

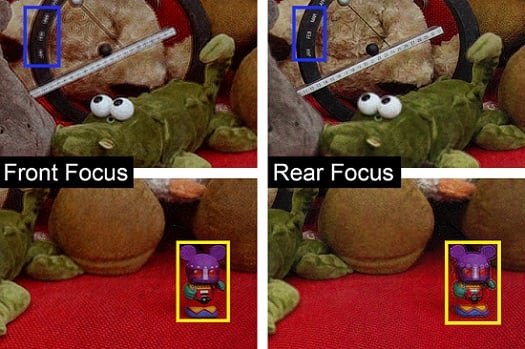

This figure shows the experimental demonstration of 2D and 3D holographic projection. The left photograph is focused on the mouse toy (in yellow box) closer to the camera, and the right photograph is focused on the perpetual desk calendar (in blue box). Courtesy of Liang Shi, Wojciech Matusik, et al.

“People previously thought that with existing consumer-grade hardware it was impossible to do real-time 3D holography computations,” said lead author Liang Shi, a Ph.D. student in MIT’s Department of Electrical Engineering and Computer Science. “It’s often been said that commercially available holographic displays will be around in 10 years, yet this statement has been around for decades.”

Shi believes the new approach, “tensor holography,” will bring the goal within reach.

Ultimately, the difference between a photograph and a hologram lies in the hologram’s encoding of the brightness and phase of each lightwave. This allows a hologram to portray a more life-like representation of a scene’s parallax and depth. To optically capture a hologram, a laser beam is split, with half used to illuminate the subject and the other half used as a reference for the lightwaves’ phase. The reference generates a sense of depth. These holograms, however, which were developed in the mid-20th century, were static and therefore unable to capture motion. And the method only produced one hard copy.

Computer-generated holography is designed to bypass these challenges by simulating the optical setup. Because each point in the scene is of a different depth, though, one cannot apply common operations for each point.

“That increases the complexity significantly,” Shi said.

A supercomputer running these simulations could take up to several minutes to generate a single holographic image. Existing algorithms also do not model occlusion with photorealistic precision.

The MIT team used deep learning and designed a convolutional network in a method that uses a series of tensors to mimic the way humans process visual information. Training a neural network typically requires a large, high-quality data set, which the team had to assemble on its own.

The custom database contained 4000 pairs of computer-generated images, each matching a picture — including color and depth information for each pixel — with its corresponding hologram. The researchers created the database holograms with scenes that included complex and varying shapes and colors, with an evenly distributed depth of pixels from the background to the fore.

To address occlusion, they also provided a new set of physics-based calculations.

The algorithm, with a photorealistic training data set, optimized its own calculations, successfully enhancing its ability to generate holograms. The network operated orders of magnitude faster than physics-based calculations.

The method is able to generate holograms in milliseconds from images with depth information — provided by typical computer generated images and can be calculated with a multicamera setup or a lidar sensor. The compact tensor network requires less than 1 MB of memory.

“It’s negligible, considering the tens and hundreds of gigabytes available on the latest cellphone,” researcher Wojciech Matusik said.

In VR, the team believes the technology could provide more realistic scenery and eliminate eyestrain and other side effects of long-term VR use. The technology could also see use in displays capable of modulating the phase of lightwaves.

“It’s a considerable leap that could completely change people’s attitudes toward holography,” Matusik said. “We feel like neural networks were born for this task.”

The work was published in Nature (www.doi.org/10.1038/s41586-020-03152-0).