RADHA NAGARAJAN, MARVELL TECHNOLOGY

The cloud. It evokes an ethereal, weightless environment in which problems get whisked away by a breeze.

In reality, the cloud consists of massive industrial buildings containing millions of dollars’ worth of equipment spread over thousands and, increasingly, millions of square feet. In Arizona, some communities are complaining that cloud data centers are draining their aquifers and consuming far more water than

expected. In the U.K. and Ireland, the power requirements of data centers are crimping needed housing development. Even in regions such as northern Virginia where the local economies are tightly bound to data centers, conflicts between residents and the cloud are emerging.

With the rise of AI, these conflicts will escalate. AI models and data sets are growing exponentially in size and developers are contemplating clusters with 32,000 GPUs, 2000 switches, 4000 servers, and 74,000 optical modules1. Such a system might require 45 MW of power capacity, or nearly 5× the peak load of the Empire State Building. This resource-intensiveness also shows how AI services could become an economic high-wire act for many.

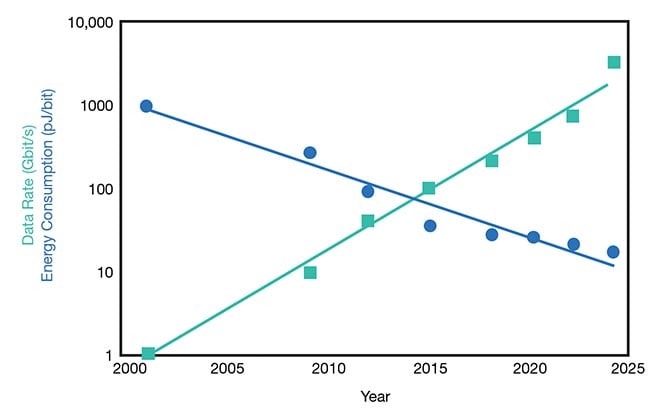

Performance is up and power is down: Over 20 years, the data rate of optical modules has increased 1000× while power per bit has decreased by >100×. Courtesy of Marvell Technology.

Armchair critics would say the answer is easy: No more data centers! Our daily lives, however, depend on these anonymous beige buildings.

Data centers will also play a pivotal role in sustainability. The World Economic Forum asserts that digital technology could reduce emissions by 20% by 2030 by fine-tuning power consumption in buildings, homes, and factories2.

Optical technologies can become a gateway for turning these facilities into good neighbors and putting AI on firm, sustainable footing. During the last

20 years, data centers have been increasingly integrating optical technology into their facilities. Microsoft, for instance, runs more than 200 data centers connected by >175,000 miles of terrestrial and subsea optical connections. Equinix, another cloud provider, has 392,000

interconnections. Streaming and other new services would not have been able to grow as quickly as they have without the exponential improvements in efficiency and performance that data centers have seen.

Now, new and upcoming technological advancements are set to launch a chapter in this story. A brief tour of what the

industry can expect to see as a result of this progress follows.

Design changes for the data center

Although data center workloads grew by nearly 10× in the past decade, total power consumption stayed remarkably

flat3. One of the factors driving this

success story was a focus on good housekeeping practices, such as unplugging

unproductive “zombie” servers or dimming overhead lights.

Data center operators became more

adept at dealing with wasted heat, while administrators let internal temperatures rise from ~70 to 80 °F and beyond to reduce air conditioning or replace it with ambient air cooling. The use of air conditioners can consume nearly 50% of a data center’s load.

Optical technology can further extend these gains. Linking data centers by optical fiber, for example, could allow cloud providers to exchange one massive 100,000-sq-ft facility for ten 10,000-sq-ft facilities, which are about the size of a grocery store. The result is that local grids and electrical distribution networks could then spread their electrical load across different cities over an extended geographic area, reducing the effect on housing and the need for additional grid investments. Overall power could be

reduced as well: Instead of serving consumers’ video streams from data centers 1500 miles away, the latest releases could be cached in urban corridors near end users’ locations.

Also, small data hubs could be housed in closed strip malls, abandoned industrial sites, or shuttered offices in which power infrastructure still exists. What about water? Some companies are experimenting with using gray and/or tainted water for cooling.

Creating virtual hyperscalers out of a web of satellite data centers is practical

because of the emergence of 400-Gbps and, more recently, 800-Gbps ZR and ZR+ optical modules — a form factor

managed by a standards body. Such devices essentially convert the electrical signals passing between servers and switches inside data centers into optical signals that can travel over fiber. These modules need less space, emit less heat, and use less power than traditional optical equipment, and they reduce the overall cost of an optical link by 75%. And, in terms of footprint, these modules go from the size of a pizza box to a component the size of a mozzarella stick. As a result, they provide the bandwidth and capacity needed within the constraints of smaller satellite data centers.

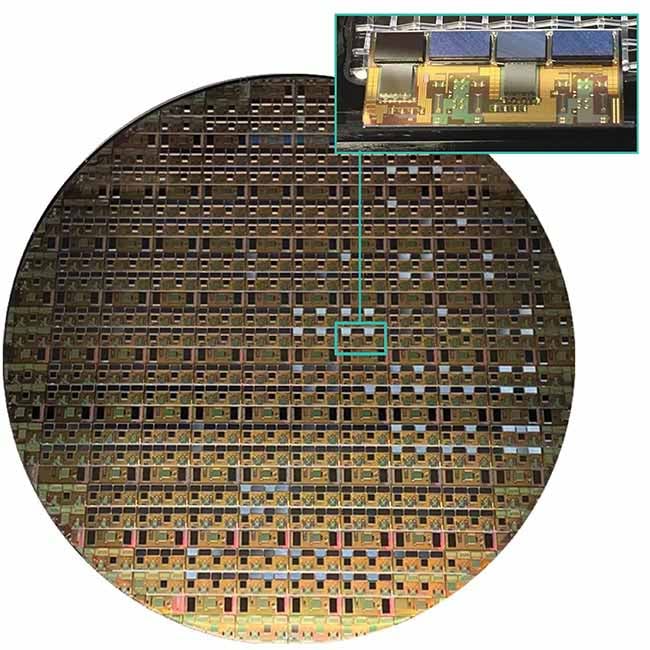

A silicon photonics device and its source wafer. By miniaturizing optical components and integrating them into the same piece of silicon, reliability, performance, and power can be simultaneously improved. Courtesy of Marvell Technology.

While 400-Gbps ZR/ZR+ modules have been deployed broadly by most of the large cloud providers, 800-Gbps modules represent a tipping point where modules will become the default option in most cases. The 400-Gbps modules cover ~50% of the use cases of cloud providers. The 800-Gbps modules can deliver full performance in metro networks ~500 km in diameter, though they can also support 400-Gbps rates at longer distances. With 75% of Europeans and >80% of North Americans living in cities, 800-Gbps modules overlap quite well with bandwidth needs.

So, what made 800-Gbps ZR/ZR+ possible? First, the coherent digital signal processors (DSPs) for pluggable modules — the principal component inside them — have doubled their performance and capacity roughly every three years. Catching up to traditional equipment was only a matter of time. In a few years, 1.6T DSPs and modules will likewise appear.

Additional applications and use cases

While coherent DSPs effectively manage the links between data centers, optical modules containing a different class of signal processor (PAM4 DSPs) serve to bridge the distances inside data centers. Currently, these links are anywhere from 5 m to 2 km long. For links <5 m in length, plain, passive, inexpensive copper cables can connect servers to racks or to each other.

Copper cables, however, face a problem involving basic physics: 100-Gbps signals degrade after 2 m, whereas 200-Gbps signals degrade after 1.5 m. Increasing the diameter of the cable can increase capacity and partly reverse this trend. But doing so creates its own problems, since thicker cables are heavier and not very flexible. This makes them impractical for the tight spaces between server racks.

Active optical cables (AOCs) and active electrical cables (AECs) reverse the trend. These cables contain PAM4 DSPs at each end to boost and refine data transmission.

It is akin to turning a cable into a set of modules. Cost and power can be reduced by integrating the amplifier and driver into the same piece of silicon. Although adding DSPs increases the cost of the cable itself, active cables hold the promise of reducing costs overall. More work and data transmission can be performed with roughly the same footprint as conventional cables. Initial AEC and AOC products have already been developed, and their deployment should begin in earnest during the next few years.

Further in the future, optical technology will begin to displace wire connections inside data center equipment, where it will connect separate chips within a server and a switch. Compute Express Link (CXL) is an emerging technology that will allow individual chips to access more memory and hence more data per cycle. CXL technology will also enable cloud designers to create massive pools of memory that can be shared by different processors to improve overall equipment use and productivity per watt.

This advancement is poised to be one of the largest changes in core computing architectures in years. Though the first generations of CXL chips will use copper connections, they are expected to shift to optical technology comparatively quickly4.

Some readers might have heard this argument before. Twenty years ago, visionaries predicted optical technology was on the verge of permeating the data center rack. Copper turned out to be far more resilient than anticipated. Likewise, shrinking optical links to fit within the confines of a server rack or chassis brings its own challenges. We are now, however, approaching more formidable challenges. Copper will not vanish overnight, but optical technology will steadily displace it.

Breakthroughs in core technology

Optical modules, such as those listed above, have been one of the unsung

heroes of the cloud era.

But the fundamental core technology must also be improved. Power per bit for optical modules has declined by >100× during the last 20 years. Yet, the number of bits has grown by ~1000×. This means that the power cumulatively consumed by modules has increased by 8×. For clouds, optical modules are becoming a megawatt-size issue.

Another area of R&D that shows promise is silicon photonics. Producing multiplexers, waveguides, photodetectors, and other optical components with conventional silicon manufacturing techniques enables hundreds of formerly discrete components to be combined into a single, comparatively small piece of silicon. Advanced 2.5D and 3D packaging being developed for CPUs and AI processors can also be employed to combine several silicon photonics devices to further increase performance.

The benefits of this technology, as you might imagine, are far reaching. Cost, space, and power all decline. At the same time, optics companies can leverage R&D from other segments of the industry. Meanwhile, verification and testing can be streamlined.

Historically, the availability of silicon components has also jumpstarted innovation among equipment designers. One of the big debates in the industry is whether the light source should be integrated into the same piece of silicon with the other components or placed on a separate device connected over a short-reach fiber to better dissipate heat and lower the cost of repairs. It is an issue that can only be determined adequately by evaluating what it is that vendors in an open ecosystem can devise.

Silicon photonics is already used in

coherent ZR/ZR+ modules for long-

distance connections. In the not-too-distant future, silicon photonics devices will also appear within data center PAM4 modules.

Likewise, expect to see experimentation in co-packaged optics and linear-packaged optics in which smaller, less powerful DSPs embedded in a network switch would replace the need for a second DSP in the module. Eliminating the module DSP could reduce the power needed for copper-to-optical connectivity by 20% to 50%. On the other hand, the exacting requirements for implementing both technologies have proved to be difficult. During the next three to five years, the industry will formulate the designs and processes needed to bring these concepts to mass production.

Power consumption is the signature problem for our industry for the foreseeable future. Simply put, we need to do

far more work with the same, if not

fewer, resources. There will be no single silver bullet for solving it. But luckily, we have a number of incremental, practical steps to follow as well as a somewhat clear roadmap to further development in the future.

Meet the author

Radha Nagarajan, Ph.D., is the senior vice president and CTO of connectivity at Marvell Technology. As a leader in the domain of large-scale photonic integrated circuits, he

has earned more than 235 U.S. patents and numerous awards. He serves as visiting

professor with the National University of

Singapore; email: [email protected].

References

1. IEEE. How Big Will AI Models Get?

February 9, 2023.

2. World Economic Forum. Digital technology

can cut global emissions by 15%. Here’s

how, January 15, 2019.

3. IEA. Global trends in internet traffic, data centres workloads and data centre energy use, 2010-2020, November 4, 2021.

4. Embedded. Are we ready for large-scale AI workloads? May 16, 2023