Full company details

Teledyne DALSA

A Teledyne Technologies Co.

Machine Vision OEM Components

605 McMurray Rd.

605 McMurray Rd.

Waterloo, ON N2V 2E9

Canada

Phone: +1 519-886-6000

Fax: +1 519-886-8023

Detecting Dents and Damage in Aluminum Cans Using AI Computer Vision

Vision Spectra

Winter 2023Traditional rule-based inspection methods for difficult inspection tasks are painstaking to set up and maintain, given the number of parameters that affect the required sensitivity and accuracy of a vision system.SZYMON CHAWARSKI, TELEDYNE DALSA

Predictive artificial intelligence (AI) and deep learning are rapidly undergoing adoption for computer vision tasks in automation and manufacturing. Applications that were previously impossible with traditional computer

vision tools can now be developed using AI. For many applications, AI offers manufacturers the tools to solve challenging problems without the need for a deep background in vision systems programming.

A common computer vision inspection challenge is to detect defects in metal parts. A real-world example of this is checking for dents in aluminum beverage cans. Dents on the outside of a can may compromise the inner coating, allowing beer or soda to come into contact with exposed aluminum, giving

the beverage a bad taste. Currently, ~100 billion aluminum beverage cans are produced every year in the U.S., according to IndustryARC. This is equivalent to one can per American per day — making accurate inspection a prominent issue for beverage manufacturers to maintain the quality of their brand.

An inspection of aluminum cans on a production line using a Teledyne DALSA Genie Nano camera and ring light. Courtesy of Teledyne DALSA.

Using a vision system equipped with a single overhead camera, a wide-angle lens, and a ring light, it is possible to capture an image of the full inside surface of a can and use computer vision to determine whether the can is damaged.

Ideally, this type of inspection should be performed as late in the production process as possible, just before filling the can, to catch any damage that can occur on the production line. The final step before the cans are filled entails a washing process that leaves the cans variably wet; some will have water droplets of varying size while others will appear dry. This variability makes it a very challenging application to solve with traditional vision inspection methods.

Rule-based programming approach

The traditional approach to solving this problem is through the design

and use of a rule-based image inspection algorithm. Dents can be discovered using a stack of image preprocessors’ outputs to measure pixels in regions

of interest and then comparing the measurements to see if they fall outside normal ranges. The parameters of the preprocessors could be tuned to the sensitivity of the inspection and limits could be applied to the measurements to classify parts as good or bad.

Dent damage to a single can, as seen from the outside, inside, and from the camera image. Courtesy of Teledyne DALSA.

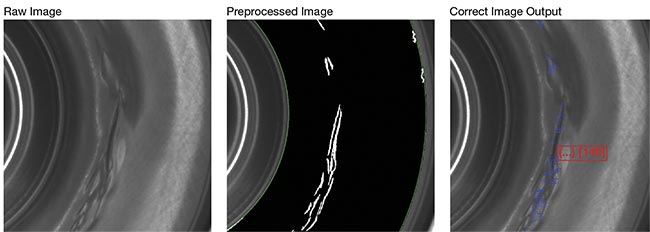

(From left) These images show visible ripple damage on the interior surface of a dry can, then the image preprocessor stack, and the measured edge count and location output using traditional rule-based programming. This rule-based programming approach can be effective, but it must take many parameters into account; variations, such as water droplets, could be mistaken for defects. Courtesy of Teledyne DALSA.

A rule-based inspection algorithm falsely identifies water droplets as damage on a good sample (left). A rule-based inspection algorithm falsely identifies brightness variation as damage on a good sample (right). Courtesy of Teledyne DALSA.

Applying this traditional approach allows the images to show areas with edges or ripples. It is then possible to count the edges and highlight their locations. Based on how many edges are present and how tightly they are grouped together, it could be possible to program a set of rules that could reasonably determine whether a can is damaged or not.

Multiple parameters

Developing and deploying an approach like this is a painstaking process that requires experience and experimentation to determine the best image processor stack and the ideal tools to use. Enabling this method to work in production requires many different examples in varying orientations to be examined and the parameters and limits to be tuned manually. This difficult inspection could easily have more than 50 key parameters that will change the sensitivity or accuracy of the vision

system under varying conditions. Developing and maintaining a vision system with this number of parameters is one of the main roadblocks preventing the wider adoption of computer vision systems in the field.

Ultimately, it was possible to create a reasonably accurate system that could identify damage and dents in dry cans, but this system would fail to perform when water droplets were present. Water droplets add “noise” to the image and are random in size, location, and grouping. The traditional system could not be programmed to filter out the shapes and edges created by water droplets while maintaining any level of accuracy in identifying damage.

A typical AI development loop. A data gathering phase in which samples are collected, imaged, and manually categorized. A model training phase in which the model is developed. A validation phase in which the model accuracy is tested and checked for production readiness. A deployment phase in which the model is tested in real-world conditions. These phases are often performed in a loop with tweaks and improvements in each cycle until the model is performing with a high degree of accuracy. Courtesy of Teledyne DALSA.

In a factory setting, system designers often need to consider the changing conditions due to maintenance, process changes, damage, and other factors that are common to a manufacturing environment. A complex rule-based vision system may not fare well under changing conditions. Even slight changes in camera orientation, distance, and lighting may have an adverse effect on the system, which would result in factory maintenance personnel assuming responsibility to fine-tune and fix the vision systems to keep up with the changing conditions. An experienced vision system programmer can define rules to try to deal with these factors, but the complexity of vision system programs is increasing, making long-term support difficult.

AI approach

Currently, predictive AI and deep learning can be used for computer

vision tasks. AI is different from traditional methods because the programmer does not need to define rules, measurements, and parameters for all the application scenarios and defect

types. AI models are created by capturing image samples and using these images to train a model to accurately recognize features through a process called deep learning. When this process emerged, a great deal of programming and data analysis experience was required to build a model, but in recent times, the rapid growth in software solutions packaged for nontechnical users has made the process of deep learning very easy for manufacturers to adopt. Often the model creation loop involves no programming and is no-code with a graphical user interface. Users only need to capture a variety of images and identify images as good or bad. The modern software packages overtake the deep learning aspect and provide easily deployable AI models that can be used in production.

An AI classification model was created to accurately recognize the visible patterns created by damage in these challenging conditions — without mistaking water droplets on good cans for damage. Classification is a deep learning architecture that can be trained to distribute an image into one or more categories. It is a simple and effective architecture that is very useful in factory applications. Using examples of parts imaged in a wide variety of difficult conditions, the model can be trained to make the correct classification very accurately.

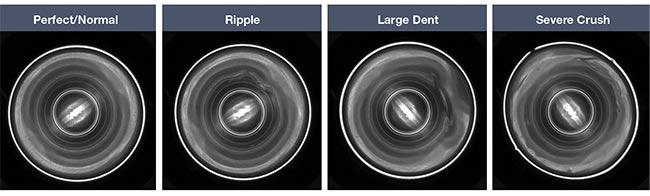

The range of aluminum can defects that are present in a production environment. Courtesy of Teledyne DALSA.

It is important to note that there is still necessary work involved when training AI models; AI does not learn by itself. Training a model requires human input but it requires a different, more widely accessible skill set, making it easier for anyone to accomplish. Rather than requiring a detailed knowledge of computer vision and image processing, AI development requires a knowledge of the product being inspected and the ability to identify and label images as good or bad. It is something that can be done by anyone who understands what a defect looks like, such as quality engineers, maintenance personnel, or operators.

Building data sets

During the data gathering phase of the AI development loop, images are collected and labeled based on the characteristics of the part. These images and labels are called data sets, and the development of a good data set is the most important factor in determining the success of an application. The key to building a good data set is building a good understanding of the full spectrum of variations present in a production process and defining how this spectrum splits into good and bad parts. The model used in this application was trained to have two classes: normal and damaged, corresponding to good and bad. The damaged class contained all the defect types, including ripples, large dents, and severe crushes. It is important to plan data gathering carefully and design a data set that contains the full range of conditions that are truly representative of the conditions being tested for, including marginal and extreme cases.

One of the benefits of modern AI tools is that there has been a reduction

in the amount of data required to train an AI system. Many AI tools use pretrained models that have been developed using generalized data sets, so the end user only needs a fraction of the data required to perform the final training pass of the model. For most applications, millions of images are no longer needed. A good baseline to aim for is 1000 images to ensure there is enough data and representative variability for the training and validation.

This aluminum can inspection model was trained using as few as 100 sample parts, imaging them in varying dry and wet conditions, while also introducing rotation and perspective shifts to simulate the production environment for a total of 1500 images. Approximately half of the images were quarantined from training and used for system performance validation.

Training the model

Training an AI model is dependent on the technology, hardware, and pipeline used. It is often performed offline from the factory environment and requires a PC with a strong GPU. Training a model requires the developer to choose an architecture and tune certain training

options referred to as hyper-parameters. There is no one solution for choosing architectures and hyper-parameters, so it requires experimentation.

With modern AI trainer software packages, this process is normally optimized and easy to set up. Training times have been accelerated to the point where it is possible to do quick training loops of 10 to 30 min. Since the training loop has been accelerated, a developer can improve the model in a few hours. There are also software options in which the AI trainer will perform an automatic optimization, referred to as a hyper-parameter sweep, and find the best combination of parameters through heuristic methods.

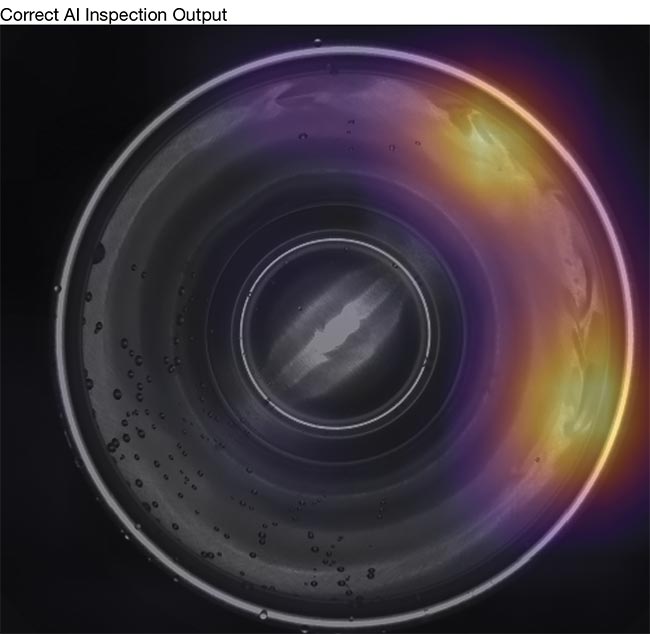

An AI model heat map output correctly highlighting damaged areas and ignoring water droplets. Courtesy of Teledyne DALSA.

With a carefully prepared data set and diligent training time, it was possible to develop a model that performed at accuracies of better than 99.9% on a wide range of damage in wet or dry conditions. A large portion of the data was quarantined from the training process and saved for validation to give the model some unique data on which to test performance. The model was validated against this unique data set to ensure that the performance would generalize to the new parts in production.

AI model training and development has been around for many years, but many of the software packages and open-source tools have been lacking the features required to support AI in manufacturing and automation.

Depending on the GPU hardware used for deployment, this model can run inference on an image in 5 to 20 ms, which is fast enough to support applications up to 50 parts per second. This was all achieved in a very short time frame, without coding, and

resulted in a fast, portable model ready to be tested in a factory environment.

Deploying AI into the factory

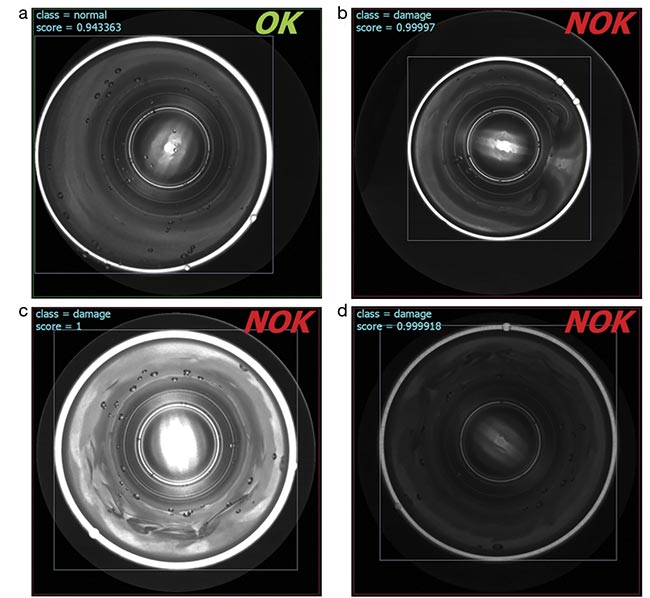

When the AI model was deployed into the field, it was possible to test the performance under a wide range of challenging conditions. Primarily, the model maintained perfect accuracy regardless of whether the cans were wet or dry.

The images show results from an AI classification model showing a good part correctly identified with large degree of perspective shift (a); a bad part correctly identified with scale shift (b); a bad part correctly identified with an overexposed image (c); and a bad part correctly identified with an underexposed image (d). NOK: not OK. Courtesy of Teledyne DALSA.

Additionally, the model was able to handle changes in brightness, scale, and alignment without any adjustment to parameters or settings. This is a very powerful feature of AI for long-term support. Once the models are trained and deployed correctly, they can function without constant fine-tuning. AI model training and development has been around for many years, but many of the software packages and open-source tools have been lacking the

features required to support AI in

manufacturing and automation. To be useful in a factory environment, vision systems need to communicate with input/output devices, factory programmable logic controllers, and industrial robots. It is also necessary to have a connection with clean understandable operator interfaces to communicate performance and results to the operators who are running the manufacturing line. One of the benefits of modern industrial vision solutions is that they have integrated these features with AI models, making deployments easier to support.

Additional applications

This is just one example of what can be accomplished with modern industrial AI computer vision. Across all industries that have previously used computer vision tools, AI solutions are now resolving problems that would have been impossible to solve in the past. Examples include identifying scratches, chips, holes, or discolorations in materials with varying surface finishes in packaging, automotive, and battery industries. Additional applications include identifying PCB defects and misplaced components for electronics, sorting, and counting overlapping and randomly placed objects in logistics, identifying different types of objects for waste management, and checking quality in food production applications.

The aluminum can inspection is an excellent example of where AI offers

an improvement over traditional computer vision tools. Conventional approaches for vision inspection can often lack accuracy or be difficult to support long-term, especially in complex applications. AI-driven solutions can offer easy-to-deploy solutions to these challenging tasks and lower the barrier to entry into computer vision in manufacturing.

Meet the author

Szymon Chawarski is a product line manager at Teledyne DALSA with 15 years of experience in computer vision, automation, and product design. He leads new product development for industrial vision systems and smart cameras, blending hardware design and software development to create user-friendly and cost-effective machine

vision products. He has recently focused on applying AI and deep learning to solve challenging real-time inspection applications; email:

[email protected].