The future of alternative, virtual, and mixed reality hinges on waveguide designs that can deliver user comfort, high functionality, and low cost.

JAMES SCHLETT, CONTRIBUTING EDITOR

Here is something David Goldman, vice president of marketing for Lumus, never expected to come into the field of view of waveguides: Wine growers using augmented reality (AR) glasses to teach unskilled labor workers exactly where to prune grape vines, leading to productivity gains of up to fivefold.

Though the adoption of AR glasses in this enterprise application and others is worthy of a toast, the technology still has a long road to broad consumer adoption. Alluding to the Gartner Hype Cycle, Andreas Georgiou, a Microsoft researcher and optical engineer with Reality Optics in Cambridge, England, said the AR industry has transitioned from the “Peak of Inflated Expectations” to the “Trough of Disillusionment.”

The expected release in early 2024 of Apple’s Vision Pro will mark the world’s first spatial operating system that blends digital content with the physical world. Courtesy of Apple.

This arguably applies to all extended reality headgear, which encompasses AR as well as virtual reality (VR) and mixed reality (MR) devices. In all cases, the design of waveguides — the thin pieces of glass or plastic that redirect light projected onto them — will play an important role in transitioning these technologies to the next stage of Gartner’s Hype Cycle: the “Slope of Enlightenment.”

Today, several companies are taking

different approaches to designing waveguides that offer the optimal size, weight, cost, and efficiency parameters for the

extended reality market. These approaches adopt either reflective or diffractive designs, plastic or glass materials, and manufacturing tools ranging from standard optics machinery, mechanically ruled blazed gratings, or e-beam lithography.

It will be the big players, such as Microsoft, Apple, or Google, and ultimately consumers that determine which headset or smart glasses design will succeed.

AR glasses for use in specific enterprise applications prioritize functionality over low-weight gear, though user comfort is always a consideration. DigiLens designed its plastic waveguides for use in its ARGO smart glasses for enterprise applications. Courtesy of DigiLens.

“The consensus is that the big players are minimizing their costs and risks while waiting for the market to be ready in terms of technology and applications,” said Georgiou. “At the same time, there is an explosion of demand for materials, systems, electronics, optics, and anything that could help the large players to succeed. Much of this activity will translate into new products or devices in the next two to five years.”

Optimizing user experience

The expected release in early 2024 of Apple’s Vision Pro headset is what Georgiou predicted would be the “main breakthrough for mixed reality,” which allows digital elements to interact with the physical ones they overlay in a headset user’s view. In contrast, AR enables digital elements to overlay the physical world, but without the interaction supported by MR.

The release of Vision Pro will influence waveguide development by redefining the boundaries of MR and VR products. Apple describes its technology as a “spatial computer that seamlessly blends digital content with the physical world, while allowing users to stay present and connected to others.”

Vergence-accommodation conflict (VAC) occurs when the human brain receives mismatching visual cues between the apparent distance of a virtual object and the actual distance required for the eyes to focus on that object. Existing waveguide combiners can struggle to create 3D images if incoming light follows paths of different length. The effect is that users see multiple partially overlapping copies of the input image at random distances (top). VividQ’s 3D waveguide combiner design can adapt to these diverging rays to display images correctly and minimize VAC (bottom). Courtesy of VividQ.

Though it displays an enhanced version of the user’s environment, Vision Pro is by most industry definitions a VR headset. It has a largely opaque heads-up display onto which the user’s physical space is projected. The primary difference between Vision Pro and true AR gear is the level at which the wearer of a transparent AR device can interact with their physical world, said Alastair Grant, senior vice president of optical engineering for DigiLens. “In particular, a large part of human communication is unspoken, direct eye contact.”

Georgiou said Vision Pro’s release also makes cost a secondary metric, with the priority resting squarely on the quality of user experience.

When it comes to waveguide design over the next few years, Georgiou said he is not expecting any breakthroughs; instead, there will be slow and steady

improvements in wafer flatness, the quality of gratings’ refractive index, and manufacturing costs. “The best that can happen is for Apple to release a product that costs $5000 to $6000 so the other manufacturers realize that they can concentrate on improving the headset’s user experience [rather than] reducing the cost,” he said.

One major influence on user experience is the comfort level of extended reality gear, for which weight is a big factor. Minimizing the weight of headsets and glasses places demands on the materials, design, and fabrication methods used. For enterprise applications in which a headband solution is practical, a 200-g system is considered acceptable. But with this comes higher expectations for functionalities, such as onboard mobile compute, embedded camera, and GPS. However, AR glasses designed for all-day consumer use should weigh between 60 and 100 g, according to Grant.

In pursuit of this weight range, in 2021, DigiLens deepened its partnership with Mitsubishi Chemical to commercialize

consumer-grade plastic waveguides for extended reality. Due to its use of a photopolymer and holographic contact copy manufacturing process, DigiLens claims to be the only plastic waveguide technology provider that does not rely on nanoimprint lithography.

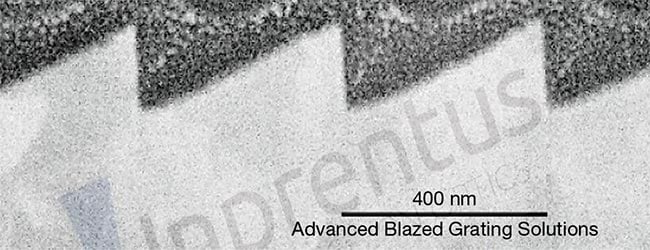

Mechanically ruled, fully blazed waveguides offer high spectral tunability, high efficiency, a large field of view, no write field limitations, and low cost of mass manufacturing. Courtesy of Inprentus.

Glass is stiffer than plastic, so it can be thinner for a given level of waveguide optical quality. But plastic has approximately half the density of glass and offers advantages in both weight and safety (e.g., ballistic and impact protection).

“Ultimately, plastic needs to offer equivalent optical performance to glass to deliver the same waveguide optical performance,” Grant said.

Rather than relying on expensive semiconductor fabrication methods, DigiLens’ surface-relief grating technology enables the company to construct these structures

from the underlying volume Bragg grating. DigiLens’ solution can also be achieved with no residual bias layer. The absence of this layer allows a wider-angle response with the high-index material. In practical terms, the result is a wider field of view.

Inprentus Precision Optics is a diffraction gratings manufacturer aiming to advance single-layer blazed waveguides, except it uses standard nanoimprint lithography methods to produce its

mechanically ruled, fully blazed solutions.

An AR waveguide illuminated from the bottom. The circular port is where the overlay image from the light engine enters the waveguide. This overlay image is expanded in the trapezoidal section for an expanded eye-box and finally projected out of the plane of the waveguide into the users’ eyes. Courtesy of NIL Technology.

The best that can happen is

for Apple to release a product

that costs $5000 to $6000

so the other manufacturers

realize that they can

concentrate on improving the

headset’s user experience...

Andreas Georgiou, Reality Optics

“Mechanical ruling is a one-step manufacturing process with the best pitch uniformity and 75 years of proven track record for mass replicability,” said Subha Kumar, Inprentus’ chief manufacturing officer. “With fully blazed waveguides, this means high spectral tunability, high efficiency, high field of view, no write field limitations, and low cost of mass manufacturing. Since with mechanical ruling each line is written independently, the nature of noise is random as opposed to correlated defects related to write fields in e-beam techniques.”

3D waveguides

Vergence-accommodation conflict (VAC) is a discomforting phenomenon familiar to users of early VR headgear. It arises when the human brain receives mismatching visual cues between the apparent distance of a virtual object (vergence), and the actual distance required for the eyes to focus on that object (accommodation). It often causes headaches and visual fatigue and can limit or even prevent usage of VR headgear.

For VividQ, solving this challenge trumps those pertaining to waveguide width and weight. In early 2023, the London-based company in partnership with Dispelix announced the manufacture of a waveguide that can accurately display simultaneous continuous-depth 3D content.

“Achieving 3D replication in a waveguide was previously described as ‘quasi-impossible’ by leading figures in the industry,” said VividQ’s head of research, Alfred Newman. “The ability to display in true 3D means that you can overcome comfort issues such as VAC, but [it] also enables users to focus naturally on interacting digital and physical objects.”

VividQ’s waveguide architecture is based on a standard surface-relief grating structure with a geometry that is tuned to match a holographic optic. Unlike other diffractive AR solutions on the market, VividQ’s optical engine has a large exit pupil and the 3D waveguide has a relatively large in-coupler that helps maintain coherence across the entire pupil. This design prioritized highly functional AR glasses over the thinnest and lightest-weight spectacles possible.

“Such devices have a place, but the functionality will be more directed toward basic information and notifications, rather than a mixed reality experience,” Newman said. “That is why we believe that the best AR devices for us to focus on are purpose-built AR headsets, where the consumer demand is image quality and where thickness and weight are less of an issue.”

Diffractive difficulties

Despite the momentum behind diffractive waveguides and their mass manufacturing advantages, challenges continue to dog them. Among those challenges is the

difficulty in applying ray tracing.

“Ray tracing a diffractive waveguide is a challenging problem,” said Georgiou. “Each ray bounces fifty to hundreds of times and, with three to four splits each time … Try to trace a whole image, and this is impossible.” He noted that Zemax is developing techniques to reduce the ray tracing burden in its optical design software. But diffractive waveguides are more challenged by refractive indexes that are either too high or low. “And when it is matched, particles inside create scattering and nonuniformity across the grating height,” he said.

Georgiou identified volume holographic waveguides as being the best-positioned solution to emerge as an alternative to diffractive options, but it might take 10 years before they are implemented. In the interim, he sees diffractive waveguides as remaining the dominant solution for high-end AR technologies, while their reflective counterparts will develop a niche where optimal cost or robustness is the determining factor.

However, metalenses, which are nanostructured surfaces able to manipulate light in innovative ways, might also upend the diffractive waveguide market.

NIL Technology, which introduced its first diffractive gratings for AR in 2008, is seeing customers continuously request more advanced nanostructures to optimize their display performance. “At the same time, customers are, to a constantly higher degree, designing for manufacturing both from a mastering and a replication point of view,” said Theodor Nielsen, NIL Founder and CEO. “We see very clear signs that the AR market is maturing.”

In parallel to its focus on masters,

NIL is working with compact optical modules benefiting from metalenses.

“We can design both lenses and modules in metalenses and take this to prototyping, manufacturing, and assembly,” Nielsen said.

In early 2023, VividQ announced its own diffractive surface-relief grating waveguide, but the assembly also has a reflective counterpart that uses similar algorithmic techniques. Newman noted that diffractive waveguides not only offer a clearer path to mass manufacturing but also fit well into the algorithmic pipeline and offer a tightly controlled optical

surface. This makes it easier to “invert” the effect of that surface on the 3D image in extended reality headgear. In contrast, reflective waveguides perform better when it comes to brightness and see-through performance, but they lack the clear path to inexpensive mass production.

Lumus’ novel multilayer reflective waveguide allows optical elements, such as prescription or transitional lenses, to be directly bonded to them and to the

waveguide without performance interference. This enabled the development of a waveguide and projector assembly that weighs just >11 g. The new waveguides have a 50° FOV, and Lumus plans to release samples with 70° or 80° FOVs next year. Courtesy of Lumus.

“We need a photonics breakthrough to improve diffractive waveguide design, or we need a materials breakthrough to make reflective waveguides more manufacturable,” Newman said.

While most diffractive waveguide makers emphasize the single-layer approach, Israel-based Lumus is pioneering a novel multilayer product. Its new reflective waveguide can be bonded directly to optical elements. This means prescription or transitional lenses can be directly bonded to the waveguide without performance interference. This capability allowed Lumus to reduce the weight of a waveguide and projector assembly to just >11 g. The new waveguides have a 50° field of view (FOV). Lumus plans to release samples with FOVs up to 70° or 80° next year.

“The physics challenge of creating large-FOV waveguides while maintaining small and lightweight projector modules is not trivial,” Lumus’ Goldman said. “We are creating a massive active area on a glass substrate and attempting to maintain high quality, color uniformity, brightness, and several other important parameters, and making the process for producing these waveguides stable and repeatable at scale. The technology will scale as has previous tech such as semiconductors,” Goldman said.