Infrared sensing and camera technology offer support as regulators push proposals for nighttime pedestrian automatic braking systems and improved outcomes on roadways.

JAMES SCHLETT, CONTRIBUTING EDITOR

According to data from the National Highway Traffic Safety Administration (NHTSA), more than 75% of automotive-related pedestrian fatalities in the U.S. in 2021 occurred at night. This value is hardly an outlier. Nighttime pedestrian fatalities in the U.S. increased at a rate of more than 3× that of comparative daytime incidents since 2010, per the Governors Highway Safety Association.

TriEye’s detection and ranging platform delivers 2D and 3D SWIR images. The company combines the advantages of SWIR imaging with an approach that aims to overcome challenges that traditional windshields face for assisted and fully autonomous driving. Courtesy of TriEye.

As investigations into the causes of this disparity are ongoing, prospective solutions built on IR sensor-enabled advanced driver-assistance systems (ADAS) are ramping up, with the aim to help reduce nighttime pedestrian fatalities. For developers of IR sensors and cameras, the race is on to position SWIR and LWIR technologies for mass adoption by the automotive industry.

Regulations and technologies

This spring, the NHTSA finalized a federal motor vehicle safety standard that would make automatic emergency braking (AEB), including pedestrian AEB (PAEB), standard on all passenger cars by September 2029. Around one year earlier, the agency proposed a set of standards that would govern PAEB nighttime detection. Under these proposed rules, new lightweight vehicles must be equipped with PAEB systems that can enable nighttime drivers to avoid hitting other vehicles when traveling at 62 mph (~100 km/h) and to avoid hitting pedestrians when traveling at 37 mph (~60 km/h).

A car equipped with PAEB traveling at 37 mph would need ~148 ft (45 m) to come to a complete stop. The detection of a pedestrian at this distance, at a high level of confidence, can be achieved with a low-resolution thermal camera (<0.1 MP). According to Sebastien Tinnes, global market leader for IR detectors designer and manufacturer Lynred, a 12-µm pixel pitch quarter video graphics array resolution thermal camera (320 × 240 pixels) can fulfill all the NHTSA’s proposed PAEB requirements.

Sensors and cameras are the principle photonic technologies at the heart of the undertakings of the NHTSA as well as of comparable agencies worldwide. And, currently, thermal imaging and lidar are the two modalities at the fore of meeting the proposed PAEB standards. But other technologies in this conversation must be considered. The ADAS in most vehicles primarily use radar. Some also include visible cameras.

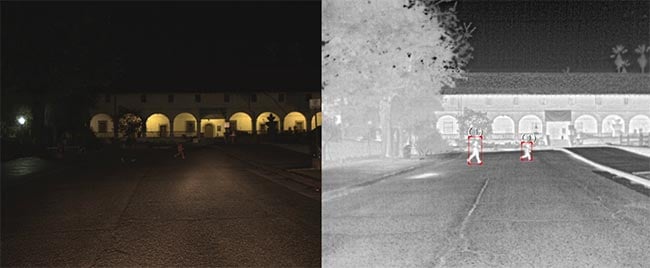

A comparison of nighttime driving imaging with visible (left) and thermal cameras (right). Courtesy of Teledyne FLIR.

Strengthening synergies among detection technologies will be the key to meeting the NHTSA’s targets. While ADAS, for example, are effective during daytime hours with clear weather conditions, their efficacy in preventing accidents involving pedestrians diminishes in poor weather and dark driving conditions.

Since they cannot achieve their desired function in or behind a standard windshield, system integrators have historically placed LWIR thermal cameras on the grills of cars.

Lidar, on the other hand, is an expensive solution compared to similar technologies. Also, many current iterations struggle to make identifications and

classifications of living objects, especially in crowded urban environments, said Ezra Merrill, senior director of marketing at Teledyne FLIR for OEMs and emerging markets.

“An additional sensor is needed to address nighttime and poor weather conditions,” Merrill said.

“As a result, Teledyne FLIR is seeing a significant increase in demand from automotive OEMs that understand the need

to expand sensing capabilities to pass

upcoming testing standards in review with the NHTSA. In many cases, this means adding a thermal camera to the existing visible-and-radar fused solution.”

Sensor fusion is an established concept for automotive, bringing the strengths of multiple modalities together in a common

system or application. Fusion in the context of ADAS, however, is still evolving. Last fall, Teledyne FLIR announced that its thermal cameras were operating in Ansys’ sensor simulation software. Teledyne FLIR also partnered with Valeo to deliver thermal imaging technology for night-vision ADAS. The system at the heart of this collaboration will use Valeo’s ADAS software stack to support functions, such as nighttime AEB, for passenger and commercial vehicles as well as autonomous cars.

Teledyne FLIR’s upcoming automotive-grade thermal camera will be less than half of the size and weight of its previous generation. At a pixel resolution of 640 × 512, the automotive thermal cameras’

resolution standard is equal to video graphics array resolution and is 4× greater than the pixel count of previous thermal-enhanced driver-aided systems, Merrill said.

Comparisons between the technical and performance capabilities of thermal

sensors and their visible counterparts feed into additional considerations.

“Combined with the reduced pixel count needed, thermal sensors can be smaller than visible sensors and come closer to visible sensor prices,” Tinnes said. For its part, Lynred plans to introduce a product that brings the pixel pitch down to 8.5 µm within the next two years.

SWIR and LWIR

Many of the technologies that factor into autonomous mobility and automotive safety systems are well established.

Sensing and imaging in the SWIR band, for example, is often favored in harsh

environments and nighttime settings. This method delivers high-resolution

images in these challenging environments. Solutions for industrial manufacturing, surveillance, counterfeiting detection, and food inspection rely on SWIR sensing and imaging.

Israeli startup TriEye is developing a CMOS-based SWIR sensing solution for ADAS. TriEye’s detection and ranging platform leverages a CMOS-based high-definition SWIR sensor and merges the functionalities of a camera and lidar into a single modality that delivers 2D and 3D mapping capabilities.

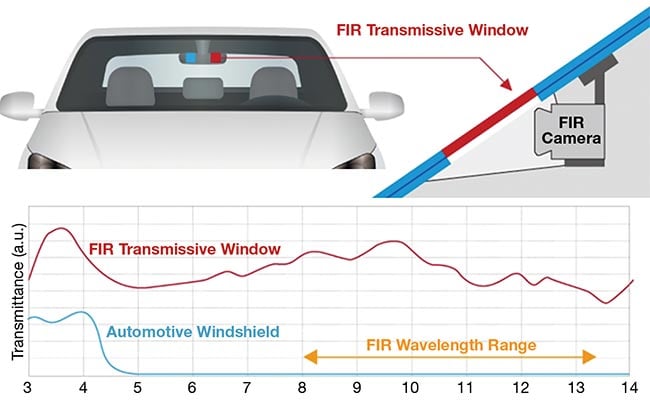

AGC has developed a windshield that fuses traditional glass with a material capable of transmitting far-IR (FIR) wavelengths. Courtesy of AGC.

Unlike LWIR sensors, TriEye’s SWIR technology can be positioned behind the standard glass of windshields and headlamps. Windshield glass is opaque to LWIR light, which means that system integrators are unable to place many

thermal cameras behind standard windshields since the incoming and perceived light inhibits their use in nearly all conditions.

“The compatibility of SWIR camera outputs with existing computer vision algorithms streamlines the integration process, avoiding the need for extensive training of new deep learning algorithms — a requirement for thermal camera systems due to their unique heat-based imagery,” said Nitzan Yosef Presburger, head of marketing at TriEye. Presburger said that SWIR illumination, compared to MIR and LWIR illumination, makes it less susceptible to external environmental conditions. SWIR cameras also rely on a photodiode effect that produces detailed views of scenes that are unlike those based on the thermal differences measured by the bolometric sensors that are commonly integrated into LWIR

cameras. Further, thermal imaging’s extended wavelength, ~10 µm, can compromise resolution by enlarging pixel sizes and physically constraining pixel density. The bigger pixels also contribute to thermal cameras’ need for larger lenses, compared to their visible light counterparts.

In this way, TriEye’s technology aims to circumvent the challenges posed

by traditional windshields while also leveraging the advantages of SWIR

imaging.

Despite these advantages, SWIR solutions may be expensive, and, according to Merrill, these cost concerns are largely driving automotive industry customers away from SWIR and toward LWIR. SWIR sensing and imaging’s reliance on active illumination also makes it vulnerable to the potential for a living light source, whether a person or animal. The additional light sources required to support SWIR sensors add cost to existing solutions as well as power concerns, in some cases.

“If all cars are equipped with SWIR illuminators, it leads to the same effect as headlamps for a visible camera: The camera is bloomed by other illuminators from other vehicles,” Tinnes said.

Windshield glass is opaque to LWIR light, which means that system integrators are unable to place many thermal cameras behind standard windshields. TriEye’s SWIR technology can be placed behind windshields as well as headlights. Courtesy of TriEye.

According to Presburger, frequently occurring conditions that may affect the performance of a SWIR sensing solution include the presence of sand or chemicals. In response, TriEye is developing advanced filtering and predictive analytics to minimize effects of severe conditions.

LWIR properties and windshield worries

Since they cannot achieve their desired function in or behind a standard windshield, system integrators have historically placed LWIR thermal cameras on the grills of cars. There, the cameras cannot benefit from windshield wipers

that clean water and debris from the lens, although hydrophobic coatings, air blasts, and/or spray nozzles are often used to clear the window for such exterior-situated thermal cameras, according to Merrill.

In response, several suppliers to the

automotive industry have developed LWIR-conducive windshields. Last

September, for example, Lynred, in

collaboration with Saint-Gobain Sekurit, unveiled a design that integrates a visible and thermal camera. This windshield features a small area that is transparent to longwave radiation. The windshield enables a sensor fusion that improves

daytime detection rates, via redundancy, and extends the PAEB’s nighttime operational design domain. The collaborators showcased a third-generation windshield with improved integration at AutoSens USA 2024 in Detroit, Tinnes said.

The solution found by Lynred and Saint-Gobain Sekurit followed Tokyo-based glass technology company AGC’s development of a far-IR (FIR) sensor-enabled windshield. Windshields are

typically comprised of a single material and subjected to strict standards, includ-

ing those that govern reliability and safety. The introduction of a FIR-

conducive material posed several

challenges, such as ensuring that it could resist scratching from water drops mixed with sand and withstand ultraviolet light damage. To overcome these challenges while also minimizing shine, AGC developed an optical coating film. The company expects to begin integration

of the FIR windshields into new vehicles in 2027.

Athermalization effects

Especially in terms of robustness, many LWIR cameras benefit from designs that optimize these imagers for military use. However, these cameras may not offer levels of adaptability that make them suitable for the wide temperature ranges demanded by automotive standards, said Raz Peleg, vice president of business development at smart thermal sensing technologies developer ADASKY.

This quality makes athermalization,

or the consistent performance in varying environmental conditions, critical, he said.

ADASKY has developed a shutterless

LWIR camera that is designed to withstand the harsh automotive requirements of −40 to 85 °C (−40 to 185 °F). ADASKY’s camera weighs <50 g and is sized at 26 × 44 mm. Similarly, Teledyne FLIR’s forthcoming automotive thermal camera is specified to operate athermally from −40 to 85 °C ambient temperature, Merrill said.

However, ADASKY has designed its solution to be located outside the vehicle. It is envisioned to be paired with a heated lens to prevent frost.

Nighttime detection with combined visible and thermal imaging. ADASKY’s shutterless LWIR camera is designed to withstand harsh requirements: −40 to 85 °C. Courtesy of ADASKY.

“LWIR is a camera-based modality. The camera needs to stay clean, and the OEM is responsible for cleaning it,” Peleg said. He said that the small size of the camera supports high mounting, such as on the roof of the vehicle. This positioning would help to minimize the effects of debris and reduce damage, according to Peleg.

Though the ADASKY and Teledyne solutions differ in the specifics of how they are to be deployed, both reflect the advantages of harnessing the LWIR band for automotive. According to Merrill, tests conducted at Sandia National Laboratories’ fog facility in 2021 showed that LWIR sensors provided the best overall visibility through various fog types and densities. The Teledyne FLIR-Sandia

tests compared LWIR results to those obtained via visible, MIR, SWIR, and lidar sensors.

AI-enabled

Whether the automotive thermal cameras

are integrated inside or outside the windshield, they still face cost challenges. As designers pursue cost-effectiveness, autonomous intelligence, a subset of

AI, is already making inroads in that direction.

Chuck Gershman, CEO and cofounder of Owl Autonomous Imaging (Owl AI) in Fairport, N.Y., said that the three biggest roadblocks facing cost reductions to thermal cameras are converters that turn sensor analog outputs into digital data; the processor that subjects the sensor data to nonuniformity corrections; and the need to provide flat image data via a mechanical shutter for defining the

correction factors.

Owl AI has developed a thermal HD camera system that performs the necessary digitization and corrections in a readout circuit in a semiconductor layer below the microbolometer array. As a result, the mechanism bypasses the costs that are typically associated with a mechanical shutter. This design also supports improvements in resolution that are most straightforward, reducing the need for high amounts of circuitry around the sensing area. While most LWIR cameras perform digitizing, correction, and interfacing in circuitry on several PC boards, Owl AI’s design leverages the sensor device to perform many of those functions. This supports a single-board camera with designs similar to its visible light counterparts.

“Properly executed internal corrections

for nonuniformity and temperature remove the need for additional preparation

of the image for the classification and range-finding tasks,” Gershman said.

“AI, therefore, is needed only for tasks directly related to extracting the data used by the ADAS, not for fixing bad images.”

Owl AI’s software identifies hundreds of objects at frame rates of 30/s. Currently, the AI can identify several types of objects, including pedestrians, cyclists, some animals, and various types of

vehicles. It can accurately process thermal signatures to ~40 m, and Owl AI aims to extend that range to 180 m with its next-generation system.

At the same time, ADASKY’s shutterless LWIR offering has also incorporated generative AI in its synthetic thermal data set. This incorporation has enabled the company to advance detection (≥100 m) as well as classification.