Newly developed tools are making field-programmable gate arrays easier to work with, offering advantages over running software code on a CPU or an imaging-optimized GPU.

HANK HOGAN, CONTRIBUTING EDITOR

A field-programmable gate array (FPGA), as the name implies, is an

integrated circuit that contains an array of transistor-based logic gates. The

connections between these gates can be changed at will, allowing for rewiring

that alters what the FPGA does. It

can turn pixels into a video stream for further processing; locate features

such as the eyes, nose, and mouth

to determine where a person is looking; or perform other vision tasks, such as object detection and identification.

Workloads moving to the edge require devices that are both low power and computationally powerful, characteristics of field-programmable gate arrays (FPGAs). Courtesy of Microchip.

Prized for their low power, small form factor, and computational capability, FPGAs are well suited for specialized

tasks that demand parallelism during

processing. The rub: They’re notoriously difficult to program. Newly developed tools, however, make programming them easier but not as simple as programming other types of processors.

Vendors are working to lower this programming burden even more while also increasing FPGA performance.

As a result, industry experts forecast the increasing use of FPGAs in embedded vision, particularly as resolutions

increase, interface data rates rise,

computing burdens grow, and the required response times shorten.

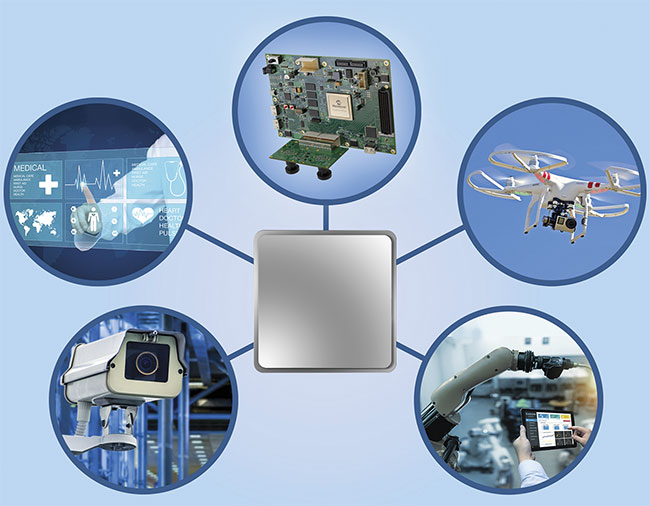

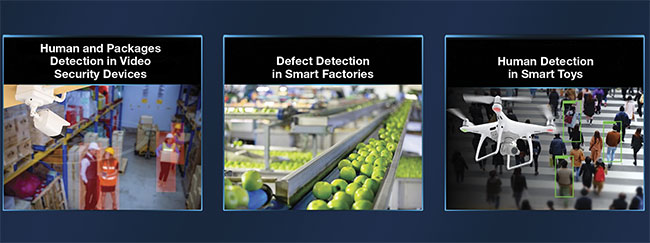

FPGAs are used in many designs, including in embedded vision, for product defect detection applications. Some of these applications involve high-speed conveyor belts. Courtesy of Avnet.

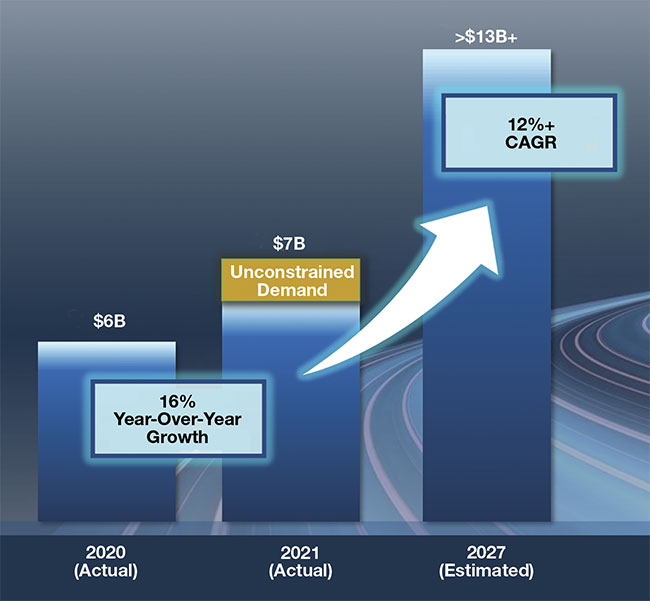

All of this translates into a sunny outlook for FPGA vendors. Intel, for example, sees the market growing at

a healthy clip.

“When we exit 2022, I wouldn’t be surprised if we’re well over eight, nine billion [dollars] as an industry, which is phenomenal,” said Shannon Poulin, corporate vice president and general manager of the company’s Programmable Solutions Group, at a briefing held before Intel’s Innovation conference in September. This industry

revenue number was more than $7

billion in 2021 and is projected to be more than $13 billion by 2027.

Lattice Semiconductor, another leading FPGA supplier, is also seeing strong demand. Mark Hoopes, director of industrial and automation products, said one reason involves the way the company optimizes its products.

“Our focus is on low-power FPGAs, small packages meant for edge processing,” he said.

FPGAs provide the processing bandwidth needed for cameras and sensors in embedded applications and elsewhere. Courtesy of Microchip.

An alternative method involves running software code on a standard CPU, or a GPU that is more optimized for image processing. However, a CPU handles the image analysis of pixels

serially, an approach that is much slower than using the parallel operation of a GPU or an FPGA. Most GPUs, however, consume much more power than an FPGA, as much as 10× more. For embedded vision, this higher power usage requires getting rid of more waste heat, which is a challenge when a camera is packed with other heat-generating components. Therefore, an FPGA can be the best processing solution for embedded vision.

Running AI algorithms

A typical use case involves using an always-on, yet low-power, laptop solution. In this scenario, an FPGA running AI algorithms monitors to detect when a person approaches a laptop, thereby detecting when the machine is about to be used and needs to be in a high-power state. To perform this task, the FPGA consumes only 200 mW, which is 250× less power than the 50 W used by the standard laptop when operating, and a tenth of the power when sleeping.

Embedded vision in many applications benefits from processing on an FPGA. Courtesy of Lattice Semiconductor.

Another application is the implementation of the JPEG XS compression standard. This system for image and video encoding cuts the data size of a video stream tenfold while not meaningfully affecting quality, Hoopes said. This makes it possible to run higher-bandwidth video over less expensive and lower-power networks.

Even better than FPGA performance is possible with an application specific IC (ASIC). But this approach suffers from high costs and long lead times because the design and manufacture of an ASIC takes months. Custom-designed chips also cannot be easily changed, if at all, to incorporate new algorithms or different solutions.

The FPGA market is growing due to expanding cloud, enterprise, and network markets, as well as edge and embedded applications. Embedded vision is part of the last two areas. CAGR: compound annual growth rate. Courtesy of Intel.

“With AI heading towards the edge, and particularly in vision systems, FPGAs are also exceptionally good at absorbing the vast quantities of compute that these applications require, and remain flexible even after deployment. That is particularly important in a volatile market such as AI,” said Mark Oliver, vice president of marketing at FPGA-maker Efinix.

The dividing line in deciding whether to go with an FPGA lies at about half-

a-gigapixel-per-second processing, said Ray Hoare, Concurrent EDA’s CEO. The company specializes in high-end FPGA designs and solutions. Concurrent is also a distributor of high-speed Mikrotron cameras, Euresys frame grabbers, and other machine vision components.

Below half a gigapixel, it makes more sense to go with a CPU, Hoare said. Above this data processing threshold,

either an FPGA or a GPU will be needed. The higher power requirements of a GPU, however, mean that an FPGA will often be best for embedded vision.

In addition to handling high data rates, another situation in which to go with an FPGA is when an application needs a rapid, reliable response. An example would be a vision system used in a control loop for product moving rapidly on a conveyor belt. The amount of data processed per image is small, but the system must almost instantly assign a pass/fail category to the parts as they speed by.

“The big strength of an FPGA in these applications is the low latency, which can be very important for critical applications,” said Mario Bergeron, machine learning specialist at Avnet,

a global technology distributor and solutions provider.

Putting an FPGA to work in an application may not require any new hardware, according to Hoare. Smart cameras typically have an FPGA built into them to handle the interface to the sensor or to the outside world. These real-time tasks entail dealing with high-speed data transmission while

not needing a full-blown operating system. Hence, they are good fits for an FPGA.

Most cameras allow no access to their internal FPGA, or they have unused capacity in the FPGA fabric that can be programmed, or both. The cameras sold by Concurrent, however, provide both access and space. Concurrent therefore crafts solutions that go in a camera’s firmware, putting customized compute and tailored machine vision capabilities

right at the edge. The firmware, for instance, might run through an algorithm to find an area of interest and then locate edges or the centers of objects within that area.

The power and usefulness of FPGAs in machine vision require that the chips be programmed. The code is written into the hardware of the chip, and the entire process is more complex than the programming needed for a CPU or GPU via software alone.

Hoare said FPGA programming is “really a chip design process.”

The steps involve simulating the proposed FPGA layout, followed by examining signals and waveforms

moving through the chip. Engineers analyze these results, making sure there are no errors, such as the signal from one part of the chip getting to another part too early or too late. Then the engineers program the FPGA with the layout and debug it. If there’s an error, the FPGA may not work, or it might produce the wrong results. If either of these is the case, the entire process starts again.

FPGA vendors are making programming easier. Joe Mallett, senior marketing manager in Microchip’s FPGA business unit, said past challenges for applications developers arose due to the various programming languages and tools.

“However, with the development of high-level synthesis tools, this is becoming a much lower barrier to adoption,” he said.

Engineers can develop a design in a familiar software environment, verify functionality, and transform the code into equivalent hardware modules. Once confirmed, Mallett said, the modules can become hardware IP cores that can be integrated into a larger system. Such cores provide building blocks to use in developing a solution, and they can be reused in other applications.

Intel, AMD, Lattice Semiconductor,

and other vendors also have tools designed to make programming FPGAs easier.

But all of these tools, Hoare said, have their own quirks and limitations.

Moving a function, such as compressing a video stream, from software running on a CPU to hardware on an FPGA will cut power consumption and space. According to Intel’s Poulin, these reductions will ultimately translate to a lower cost to perform the task. But it doesn’t make sense to send every function to an FPGA, he said — in part, because the tools to translate software code into a gate layout are not perfect.

“It does take engineering,” Poulin said. “It is not seamless.”

A commonly used arrangement is to segregate functions, with some being handled by an FPGA and the rest running on a CPU. This technique requires careful planning during the design, but it maximizes performance while minimizing cost.

Companies such as Concurrent EDA, Avnet, Gidel, and others offer services to smooth the FPGA path and speed up the time it takes to go from vision concept to implementation. For instance, Gidel, which specializes in FPGA technology, says its ProcVision Suite cuts the time spent developing an image signal processing design by threefold or more. The result can be directly ported to an Intel FPGA by replacing the basic modules that make up the solution.

“Gidel’s innovative development tools significantly simplify the development task of hardware and FPGA software. They reduce the time to market of FPGA-based imaging and vision systems,” said Hai Migdal, director of EMEA sales for the company.

In addition to making it easier to

program FPGAs, vendors are also improving the performance of the chips themselves. At Intel’s Innovation conference, for example, the company announced that it would be moving FPGAs from legacy production processes to its latest manufacturing technology, thereby cutting power consumption and reducing FPGA chip size even further.

These industry innovations should result in even better performance for embedded vision systems, which will benefit from the more powerful edge computing and image analysis. The improved capabilities could be particularly useful as camera resolutions grow, applications demand 3D imaging, sensors expand their spectral windows, and interface data transmission rates climb.