Decentralizing image collection and processing offers users numerous benefits, and with a bevy of new, ready-to-use products, the promise of Industry 4.0 may soon be fulfilled.

KEVIN MCCABE, IDS IMAGING DEVELOPMENT SYSTEMS INC.

The raw computing power within most embedded systems is an order of magnitude greater than only a few short years ago. More robust computing power, coupled with recent optimizations in how AI networks are processed, has created new opportunities for the use of AI on edge devices, specifically by using convolutional neural networks (CNNs) to classify images into categories, detect objects in images, or find unexpected anomalies in images, such as damage on a produced part. Yet moving AI processing to the edge brings with it new challenges, including making AI systems user-friendly within a manufacturing environment.

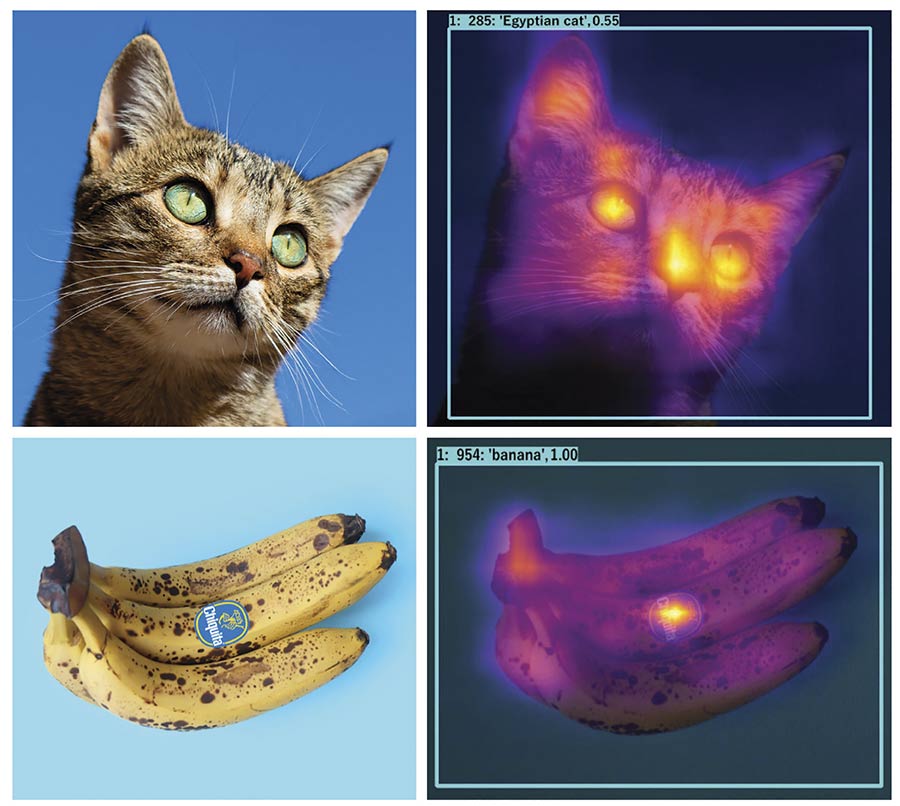

The use of AI on edge devices is growing, notably with the integration of convolutional neural networks (CNNs) to classify images. A CNN attention map depicts the features of an image and displays them next to a false-colored heat map. Courtesy of IDS.

The march toward automation has been marked by notable advancements since the late 20th century. First came the incorporation of programmable logic controllers (PLCs) within industrial systems, informing each component

on what to do within a complex industrial process. The implementation of machine vision marked a second

milestone, in which systems that incorporated a camera and software on a PC processed images and output results. This proved beneficial in controlling processes and monitoring the quality of goods.

Today, experts are predicting a new era of digitalization in which devices and systems used in production are networked to communicate with one another — a phenomenon known as Industry 4.0. Two major computing paradigms can be applied to this digitization: cloud-based and edge-based computing. In cloud computing, data is transferred out of a local system for on-demand analysis in data centers. In edge computing, processing occurs within the data collection device itself or on a dedicated device co-located with the sensor.

Choosing the right protocol

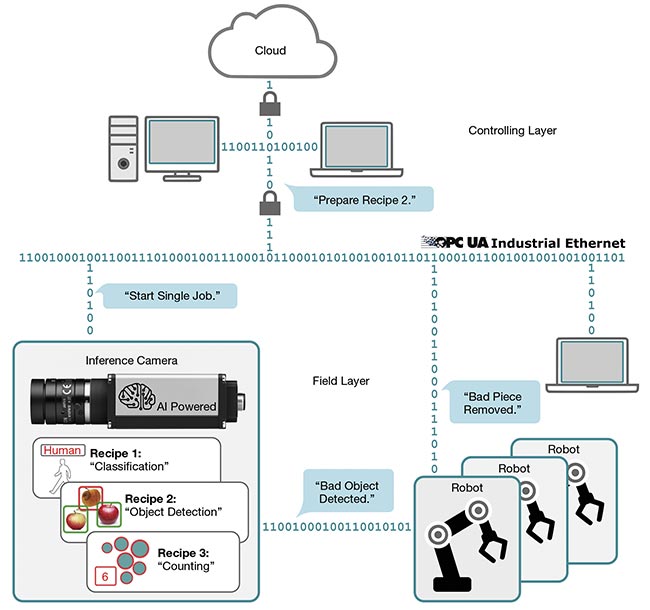

There are several choices for managing the interconnection of these digital devices, and much depends on the complexity of the operation. General protocols — such as TCP/IP (Transmission Control Protocol/Internet Protocol) and REST (representational state transfer), proprietary protocols from PLC manufacturers, and manufacturer-agnostic protocols such as OPC UA (Open Platform Communications Unified Architecture) — are how edge devices will communicate with each other. Choosing a single protocol may be ideal because it means less maintenance and less complexity

when troubleshooting, but the single protocol may limit the choice of usable devices.

OPC UA is a key protocol that has been established for open, secure, and extensible communication between edge computing devices. OPC UA is platform- and OS-independent, so it can be used and deployed on a wide array of devices. It uses the TCP/IP model and piggybacks on the security options that are available for it, such as firewalls and other forms of packet authentication. Device manufacturers create an OPC server and client on their device that communicates with other OPC UA-compliant servers and software application clients (Figure 1). The protocol allows not only a simple transmission of a result from a process (OK/NOK), but also additional data

surrounding the result.

Figure 1. An example of an OPC UA (Open Platform Communications Unified Architecture) network. Courtesy of IDS.

Using OPC UA on an industrial camera with on-board processing, for example, would allow the result of a process, the configuration of the

camera, and some metadata — such as an accurate timestamp and I/O states — to be transmitted to other

devices. This is all possible without OPC UA being dependent on hardware-specific APIs (application programming interfaces). Each device manufacturer creates an OPC UA recipe and configuration so that their device effectively communicates with other devices, and the devices are not required to understand each other’s internal workings.

CNNs in action

Rather than mimicking generalized human intelligence and behavior,

following the concept of artificial general intelligence, a concept called narrow AI involves solving specific problems, such as determining whether a shipping crate has been damaged and how.

The first incorporation of AI within vision began with the use of machine learning, in which algorithms learn from example images fed to them. A subset of this type of algorithm, deep learning, has recently become the focus of attention for image processing. Deep learning utilizes CNNs to process the examples given to it. CNNs are algorithms in which many layers of networked computing cells, called neurons, are used to find complex relationships in data. CNNs commonly classify objects in images into categories, detect objects in images, or find unexpected anomalies in images, such as damage on a produced part.

Consider the example of autonomously charging an electric vehicle in a parking lot. One vision task involves finding the charging plug on the car, which at first may seem easy to perform using traditional, rules-based machine vision. An electrical plug has

a fixed shape, with fixed color and text.

However, the backdrop is the uncontrolled and varying environment of a parking lot, not a well-controlled production line. Factors such as varying light levels, the various colors of a car’s body panels, dynamic image backgrounds, and a variety of styles of connectors (for example, a Tesla connector vs. a J plug) pose a serious challenge for rules-based machine vision. One could potentially keep defining more rules for various connector types, edge cases, and lighting conditions, but this can get complicated quickly. Instead, a user could take images of cars and plugs and then train an AI network to learn how to find a plug in various conditions.

Each of these aforementioned network types for machine vision CNNs have applications in industry. A classification network could determine whether an object is an apple or an

orange, for example. The network could be trained with examples of

each, and then after training, the resulting network would be able to infer what an object is between the two categories. Similarly, an object detection network could be trained with examples of one object and then be able to locate and count those objects within an image.

Finally, an anomaly detection network could be trained with images of “good” parts and then be able to determine when an imaged part deviates from the given examples. Combinations of these networks are also possible, such as using object detection to find a part, and then using an anomaly detection network to determine whether the part has a flaw.

Despite their potential, CNNs are only suited for a subset of all machine vision tasks. These tasks can be segregated into two categories of decision making: quantitative and qualitative. Quantitative results would generate statements such as “The diameter of this hole is 2 cm,”

or “This barcode means 0086754.” Traditional rules-based machine vision works well for these circumstances where the variables are well understood.

Qualitative results such as “These grapes look rotten,” “A piece is missing from this assembly,” or “This person looks tired,” are well suited for artificial intelligence. A rule of thumb emerges: If an expert employee could make a decision without grabbing calipers, AI could likely be trained to do it.

Bringing AI to the edge

AI has traditionally operated in the cloud, or, at the least, in industrial PCs with powerful GPUs and swaths of memory capable of combing through incoming image data. CNNs require billions of calculations to process and input, and they have millions of parameters that describe their network

architecture. Even with the recent boom in raw computing power that is inexpensive and power-efficient, CNNs have still been so complicated and demanding that, for most applications, such networks are not viable. Techniques that prune these parameters and compress networks — along with the development of parameter-efficient networks such as MobileNet, EfficientNet, and SqueezeNet — have recently made CNNs suitable for embedded use.

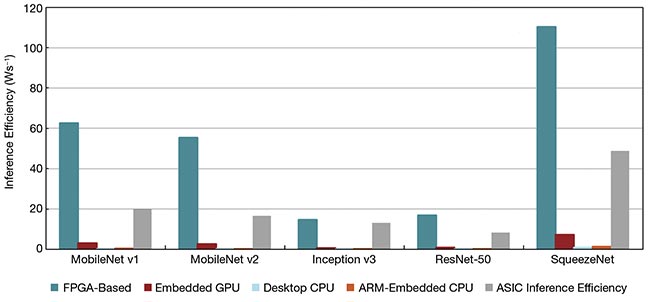

Following this increase in efficiency, the advent of efficient AI accelerators is the second piece of this computational puzzle (Figure 2). CPU-based acceleration is flexible and well supported, but the latency, especially at the embedded level, is poor. CNNs require vast amounts of parallel computational power, and current CPU architecture is a poor match for the operations that networks must do. GPU-based acceleration is well established in deep learning. Since GPUs contain many parallel cores, this architecture is much more suitable for CNNs, though embedded GPUs are still somewhat inefficient in terms of inferences per watt. Field-programmable gate arrays (FPGAs), similar to GPUs, are fantastic at parallel modes of operation, efficient in terms of inferences per watt, and reconfigurable in the field. However, FPGAs are complicated and require specialists to program AI accelerators for them. A full ASIC solution would be a clear winner, as ASICs are customizable, efficient, and cost-effective at large volumes. But flexibility is where ASICs fail. They can take years to develop, while AI is a fast-moving sector of technology. ASICs’ custom architecture could fall behind if a new leap forward in AI network architecture deviates from current networks.

Figure 2. The inference efficiencies of various CNNs. Courtesy of IDS.

Now that the computational burden is somewhat addressed, many factors are bringing AI and computing to the edge. The challenge of managing interface bandwidth causes engineers to look for decentralized computing.

Processing data in the cloud has the inherent risks of transferring the data across the internet. As factories become more multipurpose, with vastly different production lines, engineers search for flexibility in adjusting the vision solutions implemented on these lines.

With the pandemic affecting supply chains globally, edge AI systems could be part of the solution.

Interface bandwidth is becoming

a premium. Many manufacturing solutions require multiple high-resolution cameras, not to mention other sensors, and these interfaces become bandwidth-limited quite quickly. While a PC could be expanded with multiple interface ports, there is still a practical upper limit to the expandability of industrial PCs, not to mention the fact that the many interface cables going back to the PC can be difficult to

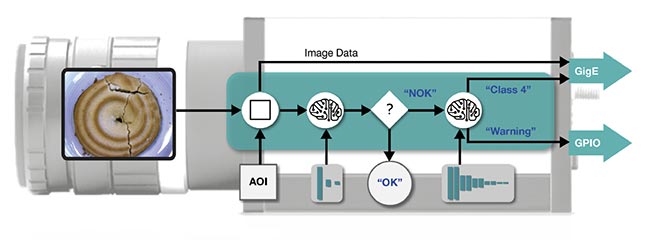

manage and install. Instead, an edge device could take the incoming image from a camera, process it, and send out the appropriate signals to the next device in the process (e.g., OK/NOK), without the need to transfer entire images to a host for processing (Figure 3). If some image data still needed to be collected, the edge device could identify and send only the important portion of the image, saving precious bandwidth.

Figure 3. An AI-based camera transmits only the needed signals to other parts of the manufacturing process, saving bandwidth. Courtesy of IDS.

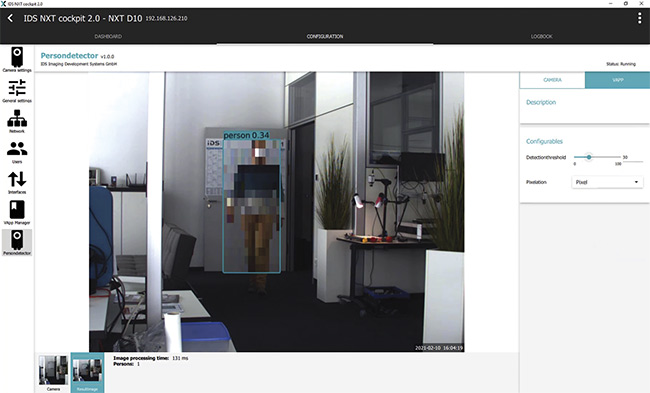

Processing data on an edge device improves the security of a system. As little data as is needed for collection is moved across the network, rather than the entire image data. And because the processing of these images is centralized, there is more probability of the process being disrupted and data being compromised from a single attack. When people are recorded in the images — such as during access control, occupancy counting, or presence/absence detection — all personal data remains on the device and can be omitted from the result that is shared to other devices (Figure 4).

Figure 4. An AI-based camera removes detected persons before transmitting image data. Courtesy of IDS.

Spreading computational load across embedded devices also provides a clear separation of specific tasks. In a factory, hundreds of workstations may require a custom image-classification task in order to analyze objects at each station. Hosting multiple different AI networks in the cloud quickly becomes expensive. Instead, multiple AI-based cameras that only differ in the training that their networks have received could be

deployed. Further, as the lines change, the networks could be retrained — using the captured images — when the CNN has trouble with the changes.

Despite these advantages, AI still has quite a barrier to overcome to truly become a revolutionizing force in Industry 4.0. Machine learning works very differently than rules-based image processing, and thus it requires new core competencies and experience. Inference decisions made by a network seem opaque, but in the end they are a result of a mathematical system. This means they can be analyzed, and most importantly they are reproducible systems. The answer lies not in hardware alone but in software that supports the powerful tool of AI and enhances it.

A programming API is not enough to address this high

barrier to entry. The software must break down the steps of the AI workflow, and it will need to make the behavior of these networks more transparent to users. CNNs are not fully understood by those looking for factory-floor solutions, and many users consider the task of troubleshooting poor results to be impossible without knowing the intricate details of the network. To truly break into industrial applications, producers of AI solutions must address this issue with features that help to visualize the process, such as CNN attention maps or statistical tools such as confusion matrices.

With the pandemic affecting supply chains globally, edge AI systems could be part of the solution. Embedded systems could soon check the quality of a part created by 3D printing or some other miniaturized production process. And making adjustments to edge AI systems could be as easy as uploading a new network. These small production floors become hyperflexible, meeting the needs of whatever product is in demand, potentially alleviating strains on global logistics and creating more localized production of inexpensive products and parts.

Most current edge AI systems are not so easy to reconfigure. Imagine an edge AI vision system that is as easy to use as a smartphone, with little to no experience required in AI or application programming. Manufacturers need to work on lowering this barrier to entry for AI machine vision. AI still has a mystique about it, and manufacturers must work to address this mystique before AI can truly become a revolutionizing force in industry.

Meet the author

Kevin McCabe is a senior applications engineer at IDS

Imaging Development Systems Inc. He helps other engineers to integrate IDS 2D, Ensenso 3D, and NXT AI inference

cameras, and he provides in-depth product training to

customers, distributors, and resellers to ensure a quick time to market. He holds bachelor’s and master’s degrees in electrical engineering from the University of Massachusetts Lowell; email: [email protected].