Breakthroughs in path planning, collision detection, and detailed 3D point maps have made it easier for robots to more accurately find parts in bins, paving the way for advancements in bin picking.

GRANT ZAHORSKY, CANON USA

As the Fourth Industrial Revolution nears, companies all over the world will need to prepare for the immense changes coming their way. Machine vision, used in

combination with artificial intelligence and deep learning, strives to make manufacturing systems more productive than ever. This is resulting in a greater focus toward robot-based bin picking. High-level collision detection and path planning allow robots to move independently without any human intervention. High-quality structured light projection creates a detailed 3D map

of points so that the system can accurately identify the location and orientation of the parts in the bin.

Artificial intelligence’s effect can be seen in robots, self-driving cars, and facial recognition, and soon will address society’s most pressing issues. Advancements toward this new level of artificial intelligence are being made every day and will continue to merge with industry as it becomes more and more sophisticated.

These new technologies are paving

the way for companies around the world to create a human-like, self-reliant industrial environment that is more

dependable and more profitable than ever thought possible. Not only that, but the working environment will be safer than it is for today’s workforce, with robots helping to resolve the present challenges of labor shortages and high labor costs by filling vacant positions, many of which are considered undesirable.

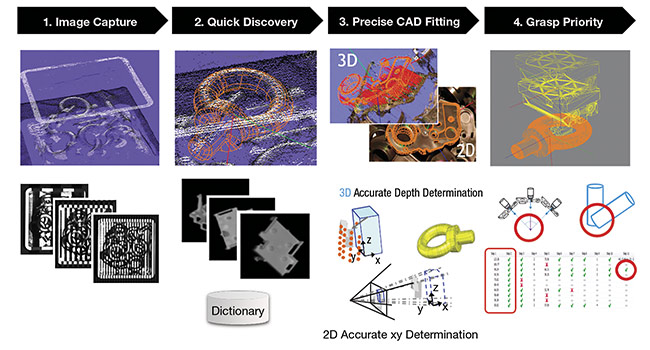

The Canon 3D Machine Vision System’s four-step process for accurate and precise part recognition and grasping. Courtesy of Canon.

Eyes behind automation

Machine vision provides image-based automatic inspection and analysis for a variety of applications, such as inspection, system analysis, robot guidance, and intelligent pick-and-place solutions. The goal is to give robots “eyes” with which to see so that they can assume more tasks at a time, without companies needing to rely on several humans to accomplish the same work at a slower and more expensive rate. High volumes of information from machine vision can be processed quickly to identify and flag defects on parts. System analysis helps a company to understand deficiencies and allow for fast and effective intervention that may otherwise go unknown to employees and upper management. Complex guidance allows robots to move through space, thereby increasing the effectiveness of a system. Finally, intelligent pick-and-place applications allow for growth in the workplace by reallocating employees doing repetitive tasks to much more useful and sophisticated positions, while retaining1 the old positions through self-sufficient operations.

To assess the benefits and roles of machine vision better, it helps to understand how and why it works. One example of machine vision is robot-based bin picking. Such a system first

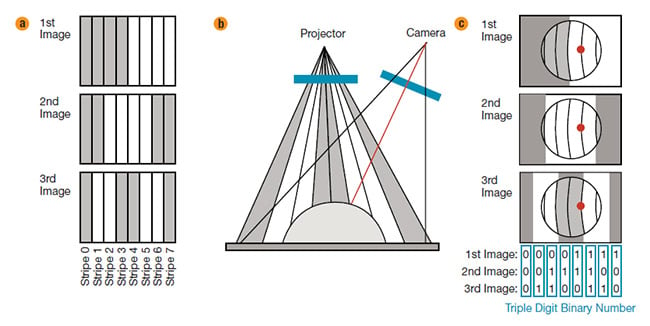

uses a technique called space encoding to project different structured light patterns onto a bin of parts. This method enables the use of an onboard projector and camera to project these patterns of light and dark stripes onto the objects and capture them with the camera. By projecting multiple types of patterns using a binary code called “gray code,” which is frequently used with systems that employ sensors, the system can “see” these parts in the bin and their location. The system does so by analyzing the stripes and how they are deformed by the height of the parts and bin in the various images that are captured. Essentially, the black portions of the images are assigned “0” while the white portions are assigned “1.”

An example of the space encoding method

used in Canon’s 3D Machine Vision System.

Pattern images (a), schematic of the projection

and image capture (b), and the images captured

by the camera (c). Courtesy of Canon.

This process is applied to all of the pixels in the image, and the various

stripes can be identified in each image and pattern. To achieve such high accuracy and resolution, 1000-plus such patterns are projected. The system captures multiple images of these varying patterns and obtains a highly detailed group of measurement points, referred to as a point cloud, that represents the three-dimensional data of the randomly oriented parts in the bin. Then, the system uses physical information about the parts — such as shine, shadow, rust, oil, and so on —

to roughly understand where the parts are in the bin and collect 2D data. The system combines this 2D data with the 3D point cloud and CAD data provided to get a precise model fitting of the parts in the bin. These recognized parts are then analyzed and the best candidates (the parts that generated the highest scores in the bin and passed all the collision and occlusion checks) are chosen.

Each part is further analyzed to determine the best way to pick the part out of the bin, before all of this information, and more, is sent back to the robot.

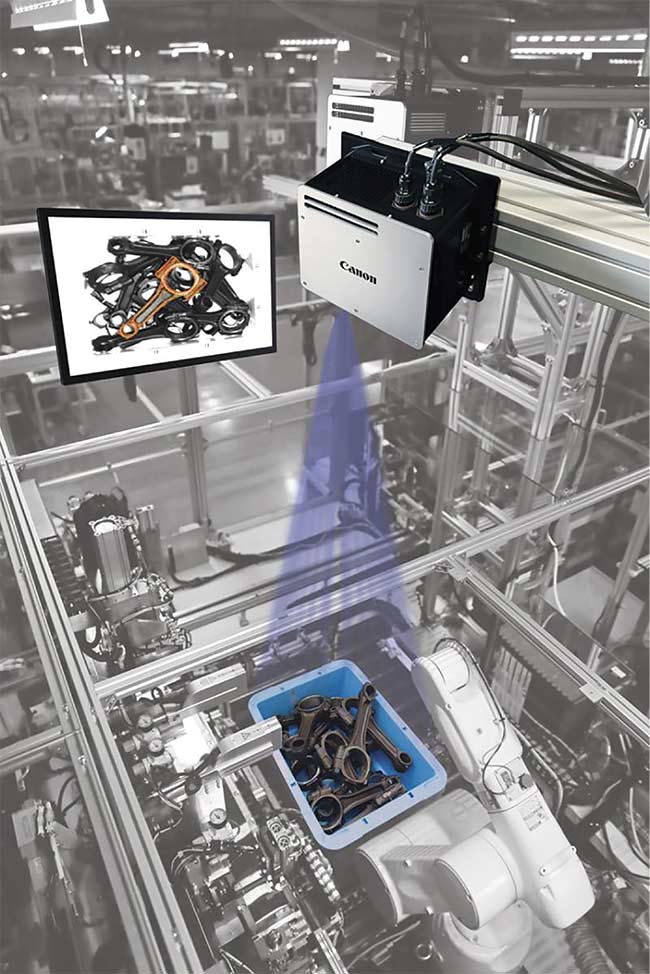

Close-up view of a machine vision-guided robot performing bin picking. Courtesy of Canon.

Machine vision is critical for the automotive industry, where fast cycle times and near zero defects on production lines are requirements. Fast recognition and communication times (typically between 1.8 and 2.5 s) are important, but to be as efficient as possible, dynamic picking strategies with sophisticated collision checks are implemented entirely in the software. In addition, optional software is available to further reduce the cycle time and workload

of a system. This removes almost all of the stress from the customer so that they can focus on creating a better application.

Besides cycle time, precision and repeatability are also major factors that companies are considering when deciding whether they should invest

in machine vision. A repeatability of

0.1 mm allows for more complex applications and fewer secondary processes. There is no need for additional 2D scans to determine the position and orientation of the parts. Instead, the machine vision head can determine all of this information, and more, completely independently from any other processes. Other information that the system analyzes is whether or not the bin is empty, how the part is grasped, which part is grasped, how many parts were recognized, and locally high positions, so the robot can position the parts if need be. This allows the robot to not only be independent, but also “intelligent.” And so, industrial robots can go beyond repetitive pick-and-place applications that involve basic welding or other minor processes, and the robot and the machine vision system can work together to accomplish much more intricate applications.

Overhead view of a machine vision-guided robot selecting parts from a bin. Courtesy of Canon.

Artificial intelligence

Fei-Fei Li, co-director of the Stanford Institute for Human-Centered Artificial Intelligence and the Stanford Vision and Learning Lab, said, “AI is everywhere. It’s not that big, scary thing in the future. AI is here with us.” It can be found in the form of IBM’s Watson, Google’s search algorithms, or Facebook’s facial recognition used to tag people in photos. AI, or artificial intelligence, can be defined as “the theory and development of computer systems that are able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.”

Random bin picking plays a vital role in modern manufacturing environments. Courtesy of Canon.

To simplify this even further, AI can be split into two groups: “narrow” and “general.” Narrow AI is defined as artificial intelligence that exists in our world today. It is capable and intelligent but has its limitations, as it is designed to carry out a single task. Narrow AI can be seen in applications such as image and face recognition, AI chatting bots, and even self-driving cars. While these technologies are quite advanced, the artificial intelligence used is not conscious or sentient.

With Cortana or Siri, for example, our responses aren’t being consciously understood by the machine but, instead, the machine is processing our language and returning one response out of myriad preprogrammed ones. At

the most basic level, the machine

is simply following lines of code and doing what it is told to do no matter

the task, reason, or logic behind the action.

In contrast, general AI can successfully perform any task that a human being can, intellectually. This type of AI is frequently seen in sci-fi movies (think “Her” or the HAL 9000 computer from the “Space Odyssey” series), and it converses with humans on an intellectual level, making its own decisions

and using its own reasoning. General

AI can take knowledge from one area and apply it to another and generalize it, plan ahead for the future based on past experience, and adapt to environ-

mental changes as they occur. This ability allows these types of machines to reason, solve puzzles, and exhibit common sense, a capability that

narrow AI does not yet have.

General AI is not yet fully developed, while narrow AI is helping people all over the world accomplish tasks more efficiently. AI can eliminate predetermined maintenance schedules that sometimes end up taking unnecessary time and result in secondary damage during labor. By collecting data on the system at hand, one can use AI to analyze it and allow the system to implement predictive maintenance. This ensures that the machines being worked on have a longer remaining useful life than they would with routine maintenance schedules.

The use of AI for inspection and quality purposes is growing and becoming a top priority for companies all over the world. By having a sophisticated and always-learning system, one can allow AI to focus specifically on identifying and flagging defects in parts, which, in turn, results in a decrease in overall costly recalls. At the end of a production process, the determining factor of a company’s success is whether or not the customer had a good product experience. This, in turn, directly affects sales, income, and so on.

In 2018, there were an estimated 1.3 million industrial robots around the world. This is due to the rising demand for automation in the workforce to

address the issues mentioned earlier

in this article. The number is only

increasing and at a very steady rate.

To combat the job losses that will come with these additions, companies are offering high-level training sessions to further educate their employees for advancement.

As these technologies continue to advance, significant changes will alter daily routines and workplaces. More machines and computers will be implemented alongside humans to further increase the efficiency and output, a trend that can already be seen in the case of the 3D machine vision systems.

Meet the author

Grant Zahorsky has worked for Canon USA since 2019, focusing on the progression of the Canon RV-Series Machine Vision System. He has a bachelor’s degree in robotics engineering from Worcester Polytechnic Institute in Massachusetts; email: [email protected].

Reference

1. International Federation of Robots (2018). Executive Summary World Robotics 2019 Industrial Robots, www.ifr.org/downloads/press2018/Executive%20Summary%20WR%202019%20Industrial%20Robots.pdf.

Labor Shortages Prompt Rising Demand for Robots

Today, inspection-related and simple pick-and-place jobs are increasingly expendable. In 2018, the International Federation of Robotics estimated roughly 1.3 million industrial robots were in use around the world. About 420,870 of those units were produced in that same year. Since 2010, the demand for industrial robots has increased significantly due to the ongoing trend toward automation and the rise of Industry 4.0. This demand means that more and more humans are working side by side with robots in a relatively collaborative environment (roughly 99 robots per 10,000 employees), and this number is only growing. It was projected that between 2019 and 2022, there would (coincidentally) be another 420,870 industrial robot sales, growing to 583,520 (+12%) per year1.

Another issue that companies are constantly battling is high labor costs. With fewer workers interested in performing repetitive tasks, companies are forced to raise their average salaries to combat the lack of demand, as well as the rising cost of living in the U.S. and around the world. People are simply not settling for low-paying, mundane jobs. By increasing automation in the workplace, not only can companies mitigate the impact of labor shortages, but they can see dramatic increases in profitability by removing high average salaries and replacing them with one-time solutions.

One of the most important arguments for industrial automation and its effect on the workforce is this: Industrial automation eliminates the low-paying and mundane jobs for workers who have the potential for more meaningful and significant work during their lifetimes. By removing these types of jobs from industry, space is made for more sophisticated jobs that benefit the employee and the company. Already, companies are offering higher-level training opportunities for their employees in preparation for this.

In the automotive industry, training is offered for programming, design, and high-level maintenance. Not only will this benefit the company, but it will also have an impact on society’s general knowledge base by allowing for exponential growth in what we, as humans, are capable of learning and doing.

|