Machine learning algorithms can differentiate between medical images — and this analysis could ultimately help doctors determine whether skin biopsy and surgery are necessary in a clinical setting.

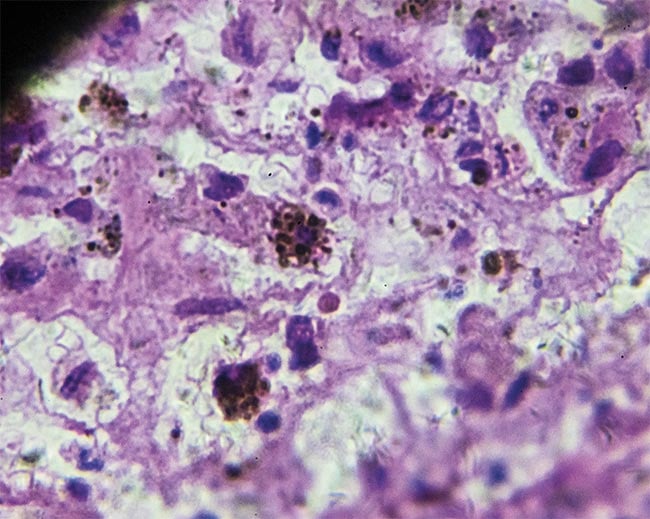

Historically, the gold standard for diagnostics in dermatology has been histopathology, which is the examination of tissues and cells under a microscope. The tissue sample that is extracted from the patient for this purpose, such as from a lesion or mole, is called a biopsy. It is a standard routine that clinicians carry out daily — but new advancements in artificial intelligence (AI) and machine learning may be able to disrupt this process by drawing data from many contexts during image analysis.

A melanoma biopsy from different regions under a microscope. Courtesy of iStock.com/jxfzsy.

In the contemporary workflow, labs usually receive histopathological results within a week. Strict specimen labeling, handling, and delivery are required. The results often lead to other tests of a lesion, such as immunohistochemistry, in which antibodies connected to a fluorescent dye are used to check for specific markers in the specimen. Immunohistochemistry is often used in conjunction with hematoxylin and eosin (H&E) staining, a common technique used to identify patterns and structures of cells.

Researchers believe AI could enhance this procedure by drawing diagnostic parallels noninvasively and targeting patients more effectively who are suffering from skin cancer or other conditions.

This delineation is increasingly vital. According to the International Agency for Research on Cancer within the World Health Organization, skin cancers are the most common groups of cancers diagnosed worldwide. In 2020 alone, an estimated 325,000 cases of melanoma (which originates in the melanocytes, or pigment cells) were diagnosed worldwide, and 57,000 of these cases resulted in death.

AI and machine learning techniques typically fall into a few different categories: convolutional neural networks, which find patterns in images; recurrent neural networks, which process sequential data; generative adversarial networks (GANs), which use multiple networks to verify the validity of data sets; long short-term memory models, which learn order dependence in recurrent neural networks; and hybrid systems that incorporate elements of each. These techniques have been used for industrial and manufacturing purposes, but they are being weighed as a potentially powerful force in medicine.

Specialized training of AI

At the University of California, Los Angeles (UCLA), the laboratory of Aydogan Ozcan has been working to perform the staining process virtually, with an emphasis on consistency while drawing from increasingly large groups of tissue images.

“Biopsy followed by H&E staining is the ground truth that diagnosticians use for various conditions,” said Ozcan, the Chancellor’s Professor at UCLA. “That is why virtual H&E that is faster, more consistent, and chemistry-free is very important.”

He said virtual staining can mirror immunohistochemical stains and other stains, deriving multiplexed stain information from the same tissue.

In a number of experiments, the team has used H&E staining, the most frequently used stain for diagnosis of numerous conditions, to train a GAN. In a GAN, two deep neural networks are used. One generates new data while the other works to determine its validity. The GAN was able to rapidly generate synthetic H&E images from label-free, unstained samples, mimicking the chemically stained versions of the same tissue1. Ozcan said experts were called upon to view the virtually stained images, and their feedback has been highly encouraging.

Much of the lab’s work on skin imaging has revolved around the virtual staining of images created through reflectance confocal microscopy, with the aid of hand-held and benchtop VivaScope systems. Reflectance confocal microscopy has been cleared by the FDA for use in dermatology. Historically, however, a significant limitation of the technique has been that its images appear in grayscale, a format that diagnosticians are not traditionally trained to evaluate. With the aid of virtual staining, contrast can be established via an image stack in intact skin, providing dermatopathologists with biopsy-free images in the contrast that they are accustomed to.

Virtual staining also has implications beyond skin diagnostics, however, and has inherent advantages for real-time surgical guidance. Ozcan pointed to the process of frozen sections, in which an extracted tissue sample is frozen in a cryostat machine, stained, and examined under a microscope.

“[A] frozen section is preferred during a surgical operation since it is very fast; however, it reveals distorted images,” Ozcan said. “That is why standard H&E takes longer but produces better images. Virtual staining using AI can create H&E plus many other stains, multiplexed on the same tissue map, which is arguably one of the most important advantages of virtual tissue staining — i.e., [a] multiplexed panel of virtual stains that are nanoscopically registered to each other without any chemical labels or staining procedures that are normally destructive to tissue. Virtual staining preserves valuable tissue specimens, eliminating the need for secondary biopsies from the same patient.”

Casting a wide net

As these techniques have matured, many clinicians have noted that — particularly when it comes to dermatology — the quality of AI is proportional to the variety of the images that have been collected to train it.

In a data set descriptor article published earlier this year, a group centered at Memorial Sloan Kettering Cancer Center (MSKCC) recounted the compilation of more than 400,000 distinct skin lesion images from dermatologic centers in different parts of the world into the Skin Lesion Image Crops Extracted from the 3D total body photography data set. Institutions involved in the International Skin Imaging Collaboration Kaggle included MSKCC in New York; Hospital Clinic de Barcelona in Barcelona, Spain; the University of Queensland in Brisbane, Australia; Monash University at the Alfred Hospital in Melbourne, Australia; FNQH Cairns in Westcourt, Australia; the Medical University of Vienna; the University of Athens in Athens, Greece; the Melanoma Institute Australia in Sydney; and the University Hospital of Basel in Basel, Switzerland. The images were drawn from 3D total body photographs produced by the Vectra WB360 imaging system, by cropping out a 15- × 15-mm field of view centered on each lesion >2.5 mm in diameter or manually tagged by the physician during clinical examination2.

Data set descriptor authors or challenge organizers said that most existing data sets of skin lesions were preselected for specific clinical purposes or short-term monitoring, limiting the capacity of AI to those specifically trained in the interpretation. The idea behind the skin lesion data set, they said, was to provide a triage tool to determine who needed to visit a dermatologist in the first place and to prevent potentially unnecessary biopsies. Results were confirmed through the review of pathology reports.

Nicholas Kurtansky, a senior data analyst at MSKCC, said that most data points available that existed prior to this study were assembled at research institutions, potentially excluding a large swath of the population.

“Many skin conditions, including precancerous lesions, are handled at local dermatology offices or secondary medical institutes, not cancer centers,” he said. “Collecting data from these centers — if available — is very challenging; therefore, this part of the population is unfortunately excluded or neglected. Similarly, access to tertiary care at large institutes is limited to certain socioeconomical groups, again contributing to the lack of diversity. AI tools should be sufficiently trained on all subgroups of patients and diseases they may encounter.”

Kivanc Kose, assistant lab member at MSKCC who was also involved in the skin lesion image article, said that a long-term goal for builders of algorithms in dermatology — and diagnostics, in general — required establishing interpretability and standardization in their models. Neural networks, while efficient at finding correlations between samples, can also function as something of a “black box,” and draw connections between features that may or may not be relevant to improve a perceived result.

He said that data that informs algorithms must be updated regularly and scrutinized by the research community for any inherent biases.

“If a model learns to attribute signal from the nongeneralizable — spurious features of a training data set, such as camera properties, or pen or paper markers on skin — then it won’t be as reliable in broader applications where the settings are different,” he said. “With the availability of larger amounts of diverse data, in many cases unlabeled, and new AI model training techniques, i.e., self-supervised learning-based pretraining, researchers have started to be able to train models that enable identification and mitigation of biases and spurious correlations in data. This enables the development of more generalizable models, called foundational models, that are capable of adapting to various tasks beyond what they are trained for.”

AI in the real world

Autoderm is a California-based company that has studied the utility of using machine learning to differentiate skin lesions. The company’s AI models are based on both deep learning and three neural networks commonly used in computer vision. Application programming interfaces allow them to be integrated into companies’ existing data collection methods.

In their recent study, the Autoderm system assessed 100 images of skin lesions taken via a smartphone in 2021 at facilities in and around Catalonia, Spain (Figures 1 and 2). It established a ranking of the five most likely skin conditions associated with the lesion. The specific patients and setting were chosen to determine the system’s viability among primary care doctors, not only dermatologists, said Josep Vidal-Alaball, head of the Central Catalonia Primary Care Innovation and Research Unit at Institut Catala de la Salut. He said that these clinicians regularly attach their dermatoscopes to their smartphones for telemedicine referrals as part of their normal protocol.

Figure 1. Anna Escalé-Besa, a clinician at Institut Catala de la Salut, examines a patient’s skin with a dermatoscope. Courtesy of Institut Catala de la Salut.

Figure 2. Anna Escalé-Besa analyzes lesion information by feeding it into the AI-powered Autoderm system. Courtesy of Institut Catala de la Salut.

“In the study, the general practitioner first evaluated the patient’s skin lesion. Then, an image of the lesion was captured using a smartphone and fed into the AI system for analysis,” said Vidal-Alaball. “Both the doctor and the AI system attempted to diagnose the lesion. Finally, the diagnosis was confirmed by dermatologists, which served as the gold standard.”

He acknowledged that the overall accuracy of the machine learning model was lower than that of trained clinicians. However, while the model at use in the study differentiated between 44 types of lesions, the algorithm has recently been adapted to include many more. The study also determined that 92% of clinicians found the system to be a useful tool and 60% said it aided in their final diagnosis3.

“At the time [that] we conducted the study in 2021 to 2022, there were only a few studies that had been carried out in real-world primary care clinical settings,” Vidal-Alaball said. “Most AI mechanisms like the one we used had primarily been applied in academic and research scenarios and in hospital settings rather than in everyday primary care practice.”

Further steps to bring such AI models into real-world use would include multicenter studies, the successful integration into health care professionals’ daily routines, regulatory considerations, and the use of diverse populations to inform the data set, he said.

AI in hand

The potential for AI assistance in the diagnosis of melanoma, the deadliest of skin cancers, is gaining traction in Europe. Earlier this year, a successful bridge financing round was announced for Swedish company AI Medical Technology, which has developed the Dermalyser, a mobile application designed for clinical support.

The application can be used with a dermatoscope mounted in front of a smartphone camera. When a picture of a patient’s skin lesion is taken, the AI analyzes its contents (Figure 3).

Figure 3. The Dermalyser uses a smartphone attached to a dermatoscope to detect potential melanoma. Courtesy of AI Medical Technology.

“I started calling general practitioners, dermatologists, and other AI companies and they said there was a lot of potential here,” said Christoffer Ekström, CEO of AI Medical Technology.

He said the mobile app was intended to fit into a clinician’s normal workflow. The analysis is predicated upon key performance indicators, which align results with specific details embedded in the AI algorithm. Results could inform clinicians about whether an excision is necessary, and are integrated into equipment on which medical professionals are already trained.

A study published earlier this year outlined the collaboration of 36 primary care centers in Sweden to determine the viability of the Dermalyser to diagnose melanoma. Patient lesions were taken with a smartphone connected to a dermatoscope, with samples also undergoing traditional lab investigation at the same time. A total of 253 skin lesions were examined, and 21 proved to be melanomas. Results were represented graphically in the area under the receiver operating characteristic curve, which illustrates the differentiation between positive and negative instances of a particular classification. The area under the receiver operating characteristic curve of 0.96 showed substantial promise diagnostically (1 is considered perfect performance)4.

Ekström acknowledged that while clinical validation has been shown, a larger population will need to be examined. Further studies will target the thickness of melanomas. To clear regulatory hurdles, he is working with agencies in both Europe and the U.S. to bring AI capabilities into clinical settings. He said that AI Medical Technology is teaming up with global partners to expand its reach commercially.

AI at the front and back end

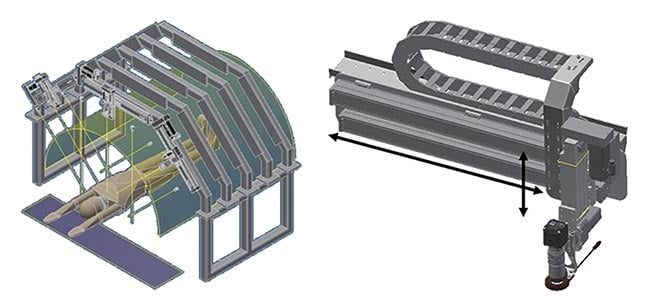

In dermatology, lesions must be considered related to overall skin composition. That is the goal of the Intelligent Total Body Scanner for Early Detection of Melanoma project, comprised of several institutions in Europe: to develop an AI diagnostic platform that monitors changes in lesions. Mutations are captured via numerous high-resolution cameras, equipped with liquid lenses, composed of membranes immersed in liquid (Figure 4). The AI platform integrates this data with medical records, genomics data, and other resources to analyze every mole on the body.

Figure 4. The camera module constructed for the Intelligent Total Body Scanner for Early Detection of Melanoma project. Courtesy of Ansys.

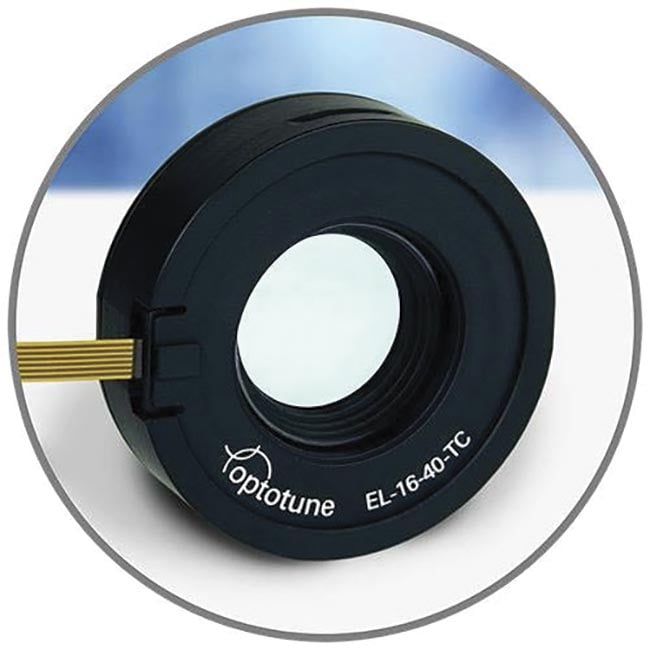

The cameras are produced by Optotune, a spinoff of ETH Zürich, and are designed with Ansys engineering simulation software, Zemax OpticStudio, through which they measured the effects of a number of variables on the lenses before production.

The versatile lenses created for the Intelligent Total Body Scanner for Early Detection of Melanoma project show that AI has value not only in the data collection and analysis phase but also in the design of an optical system to capture that information. While he was not directly involved in the European project, David Vega, lead research and development engineer for Ansys, said he often sees new AI applications aimed to incorporate various requirements into the design of systems for biomedical imaging. In some cases, these might involve not only liquid lenses but also metalenses that are <1 mm and operate at a particular wavelength. Metalenses are still challenging to design with classical optical design techniques, and therefore, optical design with AI is being heavily explored in this area.

In the case of skin cancer, which might be present only in the subcutaneous tissue of a patient, different skin types can be modeled during the system design. It can also model how fluids can affect or distort an image.

“Correctly modeling the multi-physics involved can prevent failure of the imaging system caused by nonaccounted physical phenomena and make it much more robust and reliable,” he said.

For the Intelligent Total Body Scanner project, Mark Ventura, cofounder and vice president of business development at Optotune, said the liquid lenses, such as the one seen in Figure 5 — which allow for rapid focus, similar to how an eye functions — are combined with tilting mirrors to make whole-body scans available, with a variety of optical pathlengths and an enhanced field of view.

Figure 5. An example of a liquid lens with thermal compensation. Courtesy of Optotune.

“The ultimate goal is to get the best possible image of a patient’s skin,” Ventura said. “The threshold for diagnosis is usually around 20 µm. Traditionally, hand-held cameras are used to get images of lesions that are plugged into AI, but in this case, we don’t just want images of specific areas; we want to scan whole bodies.”

He said that the project has been motivated, in part, by trends toward preventative medicine; in the future, such scans may begin in patients when they reach their 40s. These examinations could occur in treatment centers that focus on the holistic health of the patient and are increasingly common in nations such as Sweden.

References

1. J. Li et al. (2021). Biopsy-free in vivo virtual histology of skin

using deep learning. Light Sci Appl, Vol. 10, No. 1, p. 233.

2. N.R. Kurtansky et al. (2024). The SLICE-3D dataset: 400,000 skin lesion image crops extracted from 3D TBP for skin cancer

detection. Sci Data, Vol. 11, No. 1, p. 884.

3. A. Escalé-Besa et al. (2023). Exploring the potential of artificial intelligence in improving skin lesion diagnosis in primary care. Sci Rep, Vol. 13, No. 1, p. 4293.

4. P. Papachristou et al. (2024). Evaluation of an artificial intelligence-based decision support for the detection of cutaneous melanoma in primary care: a prospective real-life clinical trial.

Br J Dermatol, Vol. 191, No. 1, pp. 125-133.